ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- expected performance from CIFS (throughput)?

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a question about CIFS from Netapp filers. When I enable CIFS on my vfilers, I get strange low throughput from CIFS (from clients). Like for instance: copying folder containing 20k small files (for website) from CIFS to local disk (on dedicated windows 2008 r2 server) goes with speed ~1.5MB/s even less (drops to 400k/s). First I thought it's because of small files, but I did a test with the same folder, with 2 physical servers (windows 2008 r2) over unc shares - there was throughput of 15MB/s constantly (so 10x more).

Ok, disks are shared for different things as well (14x SATA disks in aggregate) used for NFS as well, but their load is avg 60% (each disk), so even though it should give much better performance...

I am confused about this speed, and what should be expected in that setup. Someone could say: change to FC disks, but still these 2 physical servers also have sata disks inside, and then copying just performs normally (as it should).

Can anyone point me to right direction of this, or even better - what should be expected from CIFS over Netapp? Maybe 1.5MB with small files is max what Netapp can push?

BTW, I did a test with bigger file as well (2GB) and copying was going 20MB/s (so better, but still didn't max 1Gbit connection)... And the same file (2gb) went between 2 servers with speed 100MB/s

I hope someone can help with that thing...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello there,

I have seen some horrendous performance with CIFS and filers and am currently still trying to nail down an issue where an lacp vif has 15Mb/s to some servers while a multimode works fine, doesnt help load balancing at all. One thing to check (if you can) is change the mode if you are using lacp. SMB2 is disabled by default on filers, turning that on will give you a boost for 2008R2. increasing the cifs tcp window size can increase performance in some circumstances as well.

NetApp have told me in previous support calls regarding CIFS that NetApps implementation of CIFS doesn't match microsoft's and not to expect the same speed, but with SMB2 and a chunky R710 with a decent intel card can pull at 100MB/s (on a good day without lacp turned on, or with if I'm very lucky)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just read this post now. Are you still having issue with CIFS performance? If so, please collect perfstat. 1.5MB/s seems too low.

Thanks,

Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you done any testing to verify that your NIC is configured properly? Depending on what type of NIC you are using (PCI vs onboard) that could be your issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

we are having similar issues. the cifs latency is low. but even when the system isn't used heavily we still can't seem to pass the 30MB/s mark.

i sent a perfstat in the past and they said everything looks good.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

which version of ONTAP are you using? I think perfstat would still be useful. Thanks, -Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

using 7.3.5.1

i'm discussing with my colleagues on enabling smb2 (we have mixed windows environment) and increat tcp window size(still reading on this).

i'm waiting for a colleage to come back from vacation before i do the next perfstat as he is likely to do a lot of data moves/copy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great. These are good steps. There are some parameters in the controller you can tune. Thanks, -Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i'm doing a bit of digging regarding tcp window size.

http://media.netapp.com/documents/tr-3869.pdf tells me to cifs.tcp_window_size, to 2096560

but i can't find anything else on the net about that size. however, i find lots of people using 64240

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My colleague Chowdary answered the following questions:

- What's the recommendation for tuning cifs.tcp_window_size?

[Chowdary ] with SMB2 the size is 2MB (2097152)

- What are the best practices to get good CIFS throughput?

[Chowdary ] 1. enabling SMB 2 on the controller and using SMB 2 enabled clients would give better performance than SMBv1.

2. Make sure there is not much latency between the Domain controllers and the controller

3. Need to make sure that no stale DCs are listed under the preferred DCs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so far we've enabled SMB2 and on XP nothing has changed (expected) but on windows 7 desktops i've noticed read performance increase. almost double in some cases.

the next step is to change the tcp windows size

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's great! Thanks, -Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so i just setup a V3140 with ontap 8.1P1

config:

root vol is on its on RG 2+1 (raid 5) sas drives

aggr1 has 5 LUNs from a 20+2 (raid6) sas RG. each LUN is 2TB (1952GB for HDS. based on NetApp doc)

results:

| default | mtu 9000 | tcp 64240 | notes | ||

| xp | 1m19s | 1m5s | 1m10s | smb2 enabled | |

| xp | 1m6s | 1m4s | 1m14s | mpx 1124 | |

| xp | 1m8s | 1m4s | 1m5s | buf 64k | |

| 472 files | |||||

| w7 | 48s | 42s | 40s | 20 folders | |

| w7 | 42s | 41s | 40s | size on disk 2.39 GB (2,571,423,744 bytes) | |

| w7 | 48s | 40s | 42s | values are in time | |

| time is not exact (+/- 2s) | |||||

| w2k8 | 27s | 27s | 31s | desktops limited to 1g link | |

| w2k8 | 29s | 30s | 31s | ||

| w2k8 | 28s | 26s | 27s | ||

is this a normal performance expectation?

on a windows 7 with SSD i was able to get 100MB/s performance (so basicaly maxing out the 1gb/s link of the switch)

on w2k8 with a RAID5 (not sure # of disks) i would get the same about

the peak it ever reached was 130MB/s and that didnt last long (this was tested on a single 8GB file)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wei

Its over a year you make following statement:

with SMB2 the size is 2MB (2097152)

In the TR-3869 the value is 2096560. Which one is now correct or it doesn't matter which value I take?

Thanks

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Thomas,

We are talking about small detail here. But I believe TR-3869 is correct. Please reference Microsoft KB on TCP window size (http://support.microsoft.com/?id=224829). Let's say the intention is to set the tcp_window_size to 2MB, or 2097152 bytes. According to Q224829, the Scale Factor is 5 and Window Scaled is 2097120. However, the TCP packet size is 1460 bytes and we want the tcp_window_size to be an even multiple of the packet size, thus, 2096560.

Thanks,

Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wei

Thanks for your quick answer and your explanantions.. I Know its a small detail, but sometimes such things can have a significant impact.

Thats why I wanna be sure I set the right values..

regards and a nice weekend

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point. I agree. Have a nice weekend, -Wei

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This might be of some help. I found it over here:

Checklist for troubleshooting CIFS issues

• Use "sysstat –x 1" to determine how many CIFS ops/s and how much CPU is being utilized

• Check /etc/messages for any abnormal messages, especially for oplock break timeouts

• Use "perfstat" to gather data and analyze (note information from "ifstat", "statit", "cifs stat", and "smb_hist", messages, general cifs info)

• "pktt" may be necessary to determine what is being sent/received over the network

• "sio" should / could be used to determine how fast data can be written/read from the filer

• Client troubleshooting may include review of event logs, ping of filer, test using a different filer or Windows server

• If it is a network issue, check "ifstat –a", "netstat –in" for any I/O errors or collisions

• If it is a gigabit issue check to see if the flow control is set to FULL on the filer and the switch

• On the filer if it is one volume having an issue, do "df" to see if the volume is full

• Do "df –i" to see if the filer is running out of inodes

• From "statit" output, if it is one volume that is having an issue check for disk fragmentation

• Try the "netdiag –dv" command to test filer side duplex mismatch. It is important to find out what the benchmark is and if it’s a reasonable one

• If the problem is poor performance, try a simple file copy using Explorer and compare it with the application's performance. If they both are same, the issue probably is not the application. Rule out client problems and make sure it is tested on multiple clients. If it is an application performance issue, get all the details about:

- ◦ The version of the application

- ◦ What specifics of the application are slow, if any

- ◦ How the application works

- ◦ Is this equally slow while using another Windows server over the network?

- ◦ The recipe for reproducing the problem in a NetApp lab

• If the slowness only happens at certain times of the day, check if the times coincide with other heavy activity like SnapMirror, SnapShots, dump, etc. on the filer. If normal file reads/writes are slow:

- ◦ Check duplex mismatch (both client side and filer side)

- ◦ Check if oplocks are used (assuming they are turned off)

- ◦ Check if there is an Anti-Virus application running on the client. This can cause performance issues especially when copying multiple small files

- ◦ Check "cifs stat" to see if the Max Multiplex value is near the cifs.max_mpx option value. Common situations where this may need to be increased are when the filer is being used by a Windows Terminal Server or any other kind of server that might have many users opening new connections to the filer. What is CIFS Max Multiplex?

- ◦ Check the value of OpLkBkNoBreakAck in "cifs stat". Non-zero numbers indicate oplock break timeouts, which cause performance problem

Message was edited by: Dave Greenfield

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thats a good checklist but based on what i have seen CIFS in general isn't a fast protocol

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

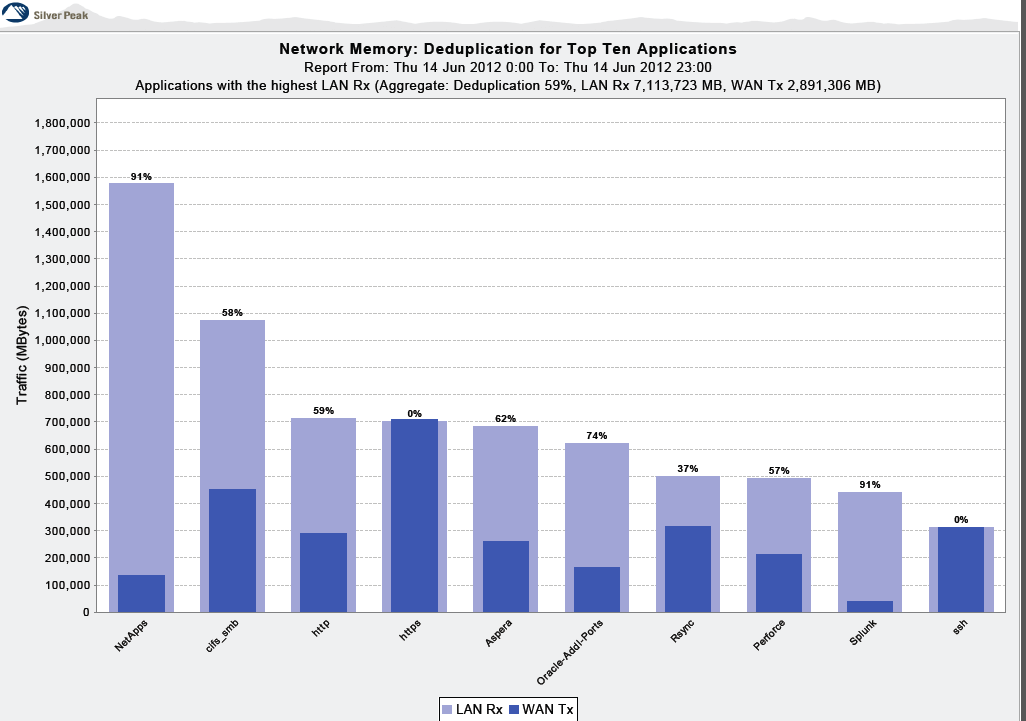

CIFS has a number of problems, but chattiness has to be the biggest issue by far. By using read ahead and write behinds along with metadata caching CIFS performance can be more than quintupled Here's a performance graph from one network I just happened to have . You'll see NetApp / CIFS see the highest reductions of any application and these numbers are probably low, actually. I've seen CIFS reduction of over 96%.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello to all,

new to NetApp, I bought recently a FAS2020 with 12x1GB SATA HDD.

I have several CIFS problems, regarding performance, eventhough I have a very small network (80-100 clients and about 20 servers).

I've seen the poor performance in some excel sheets, where calculations are being made, inside nested files (excel which refers to another excel etc..)

In my previous Windows FileServer the calculation took place in about, eg. 15 sec, and now got up to 1min or more !!

Also when I have a cifs share and I'm using it through a Win2003 terminal server, takes too long to open it, and read the files (sometimes up to 30 sec just to open the shared folder !!!)

In my previous windows environment, that action took place immediately ...

Also sometimes when multiple users access a file, I saw some "ghost files" in that folder, with random alphanumeric values , like A02350 (no extension ...) and make the file inaccessible from the 2nd user ... (had to make a copy, and use the copied file instead ....)

The above situations happens all times during the day, and got nothing to do with eg snapshots hours etc.

(I tried stopping the snapshot, but nothing happened ...)

Can anyone suggest anything with these problems ?

I saw some hints in the forum, but I don't know what options/values to edit.

I don't know what option to put on windowsize, and if it is ok to enable/disable oplocks ...

My clients are 90% windows xp, and I'm using a Windows 2003 Active Directory

Ay help would be greatly appreciated ,

Regards,

Panagiotis

PS. I enclose my cifs stat, and options.cifs values, for any help ....

My CIFS stats are as follows:

**************************************************

nireas1-netapp-up*> cifs stat

reject 0 0%

mkdir 0 0%

rmdir 2957 0%

open 0 0%

create 0 0%

close 25207932 10%

X&close 0 0%

flush 253214 0%

X&flush 0 0%

delete 69843 0%

rename 149527 0%

NTRename 0 0%

getatr 3505 0%

setatr 0 0%

read 0 0%

X&read 0 0%

write 18559 0%

X&write 0 0%

lock 0 0%

unlock 0 0%

mknew 0 0%

chkpth 3505 0%

exit 0 0%

lseek 0 0%

lockread 0 0%

X&lockread 0 0%

writeunlock 0 0%

readbraw 0 0%

writebraw 0 0%

writec 0 0%

gettattre 0 0%

settattre 0 0%

lockingX 1761438 1%

IPC 1028131 0%

open2 0 0%

find_first2 9340863 4%

find_next2 67292 0%

query_fs_info 4044916 2%

query_path_info 32781575 14%

set_path_info 0 0%

query_file_info 14894300 6%

set_file_info 13129809 5%

create_dir2 0 0%

Dfs_referral 595908 0%

Dfs_report 0 0%

echo 1572431 1%

writeclose 0 0%

openX 0 0%

readX 49309904 20%

writeX 37717047 16%

findclose 0 0%

tcon 0 0%

tdis 196739 0%

negprot 84063 0%

login 266793 0%

logout 164204 0%

tconX 232783 0%

dskattr 0 0%

search 0 0%

fclose 1565 0%

NTCreateX 45017423 19%

NTTransCreate 2 0%

NTTransIoctl 725213 0%

NTTransNotify 683162 0%

NTTransSetSec 2621351 1%

NTTransQuerySec 321274 0%

NTNamedPipeMulti 0 0%

NTCancel CN 147226 0%

NTCancel Other 11 0%

SMB2Echo 0 0%

SMB2Negprot 0 0%

SMB2TreeConnnect 0 0%

SMB2TreeDisconnect 0 0%

SMB2Login 0 0%

SMB2Create 0 0%

SMB2Read 0 0%

SMB2Write 0 0%

SMB2Lock 0 0%

SMB2Unlock 0 0%

SMB2OplkBrkAck 0 0%

SMB2ChgNfy 0 0%

SMB2CLose 0 0%

SMB2Flush 0 0%

SMB2Logout 0 0%

SMB2Cancel 0 0%

SMB2IPCCreate 0 0%

SMB2IPCRead 0 0%

SMB2IPCWrite 0 0%

SMB2QueryDir 0 0%

SMB2QueryFileBasicInfo 0 0%

SMB2QueryFileStndInfo 0 0%

SMB2QueryFileIntInfo 0 0%

SMB2QueryFileEAInfo 0 0%

SMB2QueryFileFEAInfo 0 0%

SMB2QueryFileModeInfo 0 0%

SMB2QueryAltNameInfo 0 0%

SMB2QueryFileStreamInfo 0 0%

SMB2QueryNetOpenInfo 0 0%

SMB2QueryAttrTagInfo 0 0%

SMB2QueryAccessInfo 0 0%

SMB2QueryFileUnsupported 0 0%

SMB2QueryFileInvalid 0 0%

SMB2QueryFSVolInfo 0 0%

SMB2QueryFSSizeInfo 0 0%

SMB2QueryFSDevInfo 0 0%

SMB2QueryFSAttrInfo 0 0%

SMB2QueryFSFullSzInfo 0 0%

SMB2QueryFSObjIdInfo 0 0%

SMB2QueryFSInvalid 0 0%

SMB2QuerySecurityInfo 0 0%

SMB2SetBasicInfo 0 0%

SMB2SetRenameInfo 0 0%

SMB2SetFileLinkInfo 0 0%

SMB2SetFileDispInfo 0 0%

SMB2SetFullEAInfo 0 0%

SMB2SetModeInfo 0 0%

SMB2SetAllocInfo 0 0%

SMB2SetEOFInfo 0 0%

SMB2SetUnsupported 0 0%

SMB2SetInfoInvalid 0 0%

SMB2SetSecurityInfo 0 0%

SMB2FsctlPipeTransceive 0 0%

SMB2FsctlPipePeek 0 0%

SMB2FsctlEnumSnapshots 0 0%

SMB2FsctlDfsReferrals 0 0%

SMB2FsctlSetSparse 0 0%

SMB2FsctlSecureShare 0 0%

SMB2FsctlFileUnsupported 0 0%

SMB2FsctlIpcUnsupported 0 0%

cancel lock 0

wait lock 0

copy to align 167809

alignedSmall 353803

alignedLarge 187596

alignedSmallRel 0

alignedLargeRel 0

FidHashAllocs 657

TidHashAllocs 47

UidHashAllocs 0

mbufWait 0

nbtWait 0

pBlkWait 0

BackToBackCPWait 0

cwaWait 0

short msg prevent 15

multipleVCs 135226

SMB signing 0

mapped null user 0

PDCupcalls 0

nosupport 0

read pipe busy 0

write pipe busy 0

trans pipe busy 0

read pipe broken 0

write pipe broken 0

trans pipe broken 0

queued writeraw 0

nbt disconnect 66326

smb disconnect 17556

dup disconnect 185

OpLkBkXorBatchToL2 383611

OpLkBkXorBatchToNone 10

OpLkBkL2ToNone 44763

OpLkBkNoBreakAck 2

OpLkBkNoBreakAck95 0

OpLkBkNoBreakAckNT 2

OpLkBkIgnoredAck 0

OpLkBkWaiterTimedOut 0

OpLkBkDelayedBreak 0

SharingErrorRetries 4088

FoldAttempts 0

FoldRenames 0

FoldRenameFailures 0

FoldOverflows 0

FoldDuplicates 0

FoldWAFLTooBusy 0

NoAllocCredStat 0

RetryRPCcollision 0

TconCloseTID 0

GetNTAPExtAttrs 0

SetNTAPExtAttrs 0

SearchBusy 0

ChgNfyNoMemory 0

ChgNfyNewWatch 116806

ChgNfyLastWatch 116793

UsedMIDTblCreated 0

UnusedMIDTblCreated 0

InvalidMIDRejects 0

SMB2InvalidSignature 0

SMB2DurableCreateReceived 0

SMB2DurableCreateSucceeded 0

SMB2DurableReclaimReceived 0

SMB2DurableReclaimSucceeded 0

SMB2DurableHandlePreserved 0

SMB2DurableHandlePurged 0

SMB2DurableHandleExpired 0

SMB2FileDirInfo 0

SMB2FileFullDirInfo 0

SMB2FileIdFullDirInfo 0

SMB2FileBothDirInfo 0

SMB2FileIdBothDirInfo 0

SMB2FileNamesInfo 0

SMB2FileDirUnsupported 0

SMB2QueryInfo 0

SMB2SetInfo 0

SMB2Ioctl 0

SMB2RelatedCompRequest 0

SMB2UnRelatedCompRequest 0

SMB2FileRequest 0

SMB2PipeRequest 0

SMB2nosupport 0

Max Multiplex = 47, Max pBlk Exhaust = 0, Max pBlk Reserve Exhaust = 0

Max FIDs = 452, Max FIDs on one tree = 197

Max Searches on one tree = 6, Max Core Searches on one tree = 0

Max sessions = 91

Max trees = 316

Max shares = 157

Max session UIDs = 3, Max session TIDs = 153

Max locks = 809

Max credentials = 245

Max group SIDs per credential = 14

Max pBlks = 896 Current pBlks = 896 Num Logons = 0

Max reserved pBlks = 32 Current reserved pBlks = 32

Max gAuthQueue depth = 3

Max gSMBBlockingQueue depth = 4

Max gSMBTimerQueue depth = 4

Max gSMBAlfQueue depth = 1

Max gSMBRPCWorkerQueue depth = 4

Max gOffloadQueue depth = 2

Local groups: builtins = 6, user-defined = 2, SIDs = 4

RPC group count = 10, RPC group active count = 0

Max Watched Directories = 101, Current Watched Directories = 19

Max Pending ChangeNotify Requests = 102, Current Pending ChangeNotify Requests = 19

Max Pending DeleteOnClose Requests = 2688, Current Pending DeleteOnClose Requests = 0

**************************************************

***************************************************

nireas1-netapp-up*> options cifs

cifs.LMCompatibilityLevel 1

cifs.audit.account_mgmt_events.enable off

cifs.audit.autosave.file.extension

cifs.audit.autosave.file.limit 0

cifs.audit.autosave.onsize.enable off

cifs.audit.autosave.onsize.threshold 75%

cifs.audit.autosave.ontime.enable off

cifs.audit.autosave.ontime.interval 1d

cifs.audit.enable off

cifs.audit.file_access_events.enable on

cifs.audit.liveview.allowed_users

cifs.audit.liveview.enable off

cifs.audit.logon_events.enable on

cifs.audit.logsize 1048576

cifs.audit.nfs.enable off

cifs.audit.nfs.filter.filename

cifs.audit.saveas /etc/log/adtlog.evt

cifs.bypass_traverse_checking on

cifs.client.dup-detection ip-address

cifs.comment

cifs.enable_share_browsing on

cifs.gpo.enable off

cifs.gpo.trace.enable off

cifs.grant_implicit_exe_perms off

cifs.guest_account

cifs.home_dir_namestyle

cifs.home_dirs_public_for_admin on

cifs.idle_timeout 900

cifs.ipv6.enable off

cifs.max_mpx 50

cifs.ms_snapshot_mode xp

cifs.netbios_aliases

cifs.netbios_over_tcp.enable off

cifs.nfs_root_ignore_acl off

cifs.oplocks.enable on

cifs.oplocks.opendelta 0

cifs.per_client_stats.enable off

cifs.perm_check_ro_del_ok off

cifs.perm_check_use_gid on

cifs.preserve_unix_security off

cifs.restrict_anonymous 0

cifs.restrict_anonymous.enable off

cifs.save_case on

cifs.scopeid

cifs.search_domains

cifs.show_dotfiles on

cifs.show_snapshot on

cifs.shutdown_msg_level 2

cifs.sidcache.enable on

cifs.sidcache.lifetime 1440

cifs.signing.enable off

cifs.smb2.client.enable off

cifs.smb2.durable_handle.enable on

cifs.smb2.durable_handle.timeout 16m

cifs.smb2.enable off

cifs.smb2.signing.required off

cifs.snapshot_file_folding.enable off

cifs.symlinks.cycleguard on

cifs.symlinks.enable on

cifs.trace_dc_connection off

cifs.trace_login off

cifs.universal_nested_groups.enable on

cifs.weekly_W2K_password_change off

cifs.widelink.ttl 10m

cifs.wins_servers 10.1.1.10

nireas1-netapp-up*>

****************************************************