ONTAP Hardware

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Hardware

- :

- Re: Network Port Roles for FAS2240 With SFP+ Running cDOT 8.2.1

ONTAP Hardware

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Network Port Roles for FAS2240 With SFP+ Running cDOT 8.2.1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

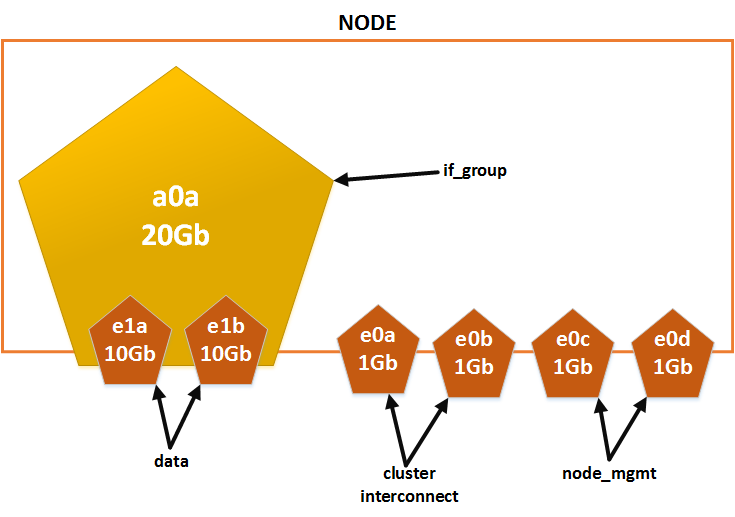

We're in the process of rolling out a FAS2240 running cDOT 8.2.1 that has onboard 2 x SFP+ (e1a, e1b) and 4 x 1Gb (e0a - e0d), and being newbies to the cDOT platform want to make sure we're aligned with best practices as close as possible (i.e. redundant links) before we put it into production. Does the per node physical port layout below look correct?

e1a, e1b - data

e0a, e0b - cluster

e0c, e0d - node_mgmt

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You must use 10G ports for cluster interconnect. Your configuration will work technically but probably won't be supported.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You must use 10G ports for cluster interconnect. Your configuration will work technically but probably won't be supported.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So the best we can hope for (and stay within support) is the layout below?

e1a, e1b - cluster

e0a, e0b, e0c - data

e0d, e0M - node_mgmt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct... There is also support for a single 10Gb cluster connection per controller (only on FAS22xx) so you can use the other for data, but I don't like that for a production system.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In your experience is the difference in performance for NFS ESX datastores (for 20-30 moderate usage VMs) negligible when using 1 x 10Gb vs. 4 x 1Gb LACP? The VMs in question are fairly mundane (e.g. no SQL VMs, high-precision timing VMs).

I do realize it is a bit more of a kludge in production, but we're trying to get the best NFS performance possible for our 20-30 ESXi VMs to the FAS2240 (all 4TB SATA with 200GB FlashPool).

e1a, (e0d) - cluster (cluster failover-group)

e1b, (e0a, e0b) - data (data failover-group)

e0c, e0M - node_mgmt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It doesn't look like you will be doing a lot of sequential reads / writes - it is highly unlikely you will be ever able to saturate a 4x1GbE link.

However, If you opt for 4x 1GbE ifgroup, the thing worth to remember is that a single NFS datastore will always use only a single 1GbE link, so to really increase the bandwidth, you need to split VMs into multiple datastores.

Regards,

Radek

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Depending on number of clients and workload using individual physical ports with load balancing may lead to better overall utilization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You do not need two node management ports, use all four for data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks aborzenkov, since we have around two dozen moderate usage ESXi VMs we're accessing over NFS, I'm unsure 4 x 1Gb LACP will be better than 1 x 10Gb. I'd hate to roll out with 4 x 1Gb and have that be a performance bottleneck later on.