ONTAP Hardware

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Hardware

- :

- Raid group

ONTAP Hardware

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

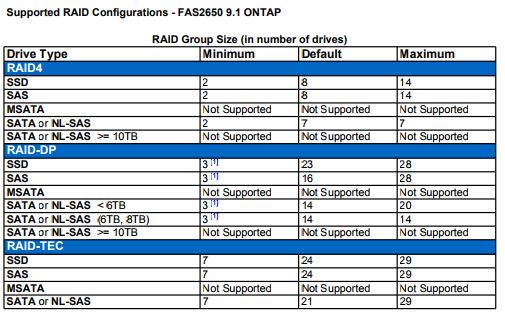

On the FAS2650, raid-dp has a limitation of 14 disks.

The professional installer had one aggregate with 20 disks which belong to two raid groups.

rg0 group has 14 disks

rg1 group has 6 disks.

Would it be better if i adjust both groups to be equally, like 10 disks each per raid group? If I made the adjustment, would data be lost?

My other aggreate (2nd) has 13 disks which belong to one raid group.

Thanks,

SVO

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, sorry for the delay in replying.

In terms of performance there will be negligable performance difference between the two RAID types. With larger RAID groups you can write in larger stripes that is always of benefit, however the reason RAID-TEC was introduced is to help with the risk introduced with the longer time these larger drives take to reconstruct. If a third disk were to fail in the same RAID-DP RAID group while the first/second were still being reconstructed, you will be in a situation where the multi-disk failure will likely take the aggregate offline. This risk can be minimised by making the RAID groups smaller; therefore less chance of a second disk failing, however less data disks means performance may suffer. RAID-TEC allows for 3 disks to fail while mainting the aggreate online, as well as allowing for larger RAID groups to be used. RAID-TEC is the default and recommended RAID protection for disks >6TB and mandatory for disks >10TB.

It is also best practice to have 2 spare drives for each disk type (excluding SSDs) to ensure Maintenance Centre (MC) is in operation. This will proactively fail a drive if errors are being reported and put it through the manufacturers diagnostics to confirm if is indeed about to fail. If it passes it is put back into the spaces pool, otherwise it is failed. Without MC the drive would be immediately failed and you would need to go throught the reconstruct process, which will adversley affect performance - disk blocks need to be copied as quickly as posible. Therefore with these large drvies I would suggest you ensure you have 2 spares to help minimise disk failures.

Bearing these points in mind, for your 36*8TB drives, I would actually aim for 2 RAID-TEC groups each of 17 disks, leaving 2 spares. Either one large aggregate on one system or 2 smaller aggregates per systems to maximise system resources.

Assuming you go for the one large aggregate (otherwise will require additional spares on the partner system), I would only use the first 17 disks, waiting for the remaining disks from the second aggregate to be released before adding the second RAID group. Best practice to add disks in complete RAID groups.

The only other thought is that with the movment of the volumes between the aggregates, the first RAID group of the 8TB drives will initially contain all your data. The second RAID group will be empty. This will not be too much of a problem if you are expecting a fair amount of data to be written or change, since all new blocks will be written to the new RAID group. Over a period of time the data will then start to level between the aggrgeates. There are two ways the layout of your data can be measured:

- To view how the data layout is optimised use the volume reallocation measure command.

- To view how the free space is optimised use the storage aggregate reallocation start command

Both are detailed in the ONTAP 9 Documentaion Command Man Pages (https://docs.netapp.com/ontap-9/index.jsp). Schedules can also be set to automate the optimisation of the layout.

Otherwise your plan would make the best use of the disks, both for capacity and performance.

Hope this helps.

Thanks,

Grant.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Please note the following limitations w.r.t raidgroups:

You change the size of RAID groups on a per-aggregate basis. You cannot change the size of individual RAID groups. The following list outlines some facts about changing the RAID group size for an aggregate: • If you increase the RAID group size, more disks or array LUNs will be added to the most recently created RAID group until it reaches the new size. • All other existing RAID groups in that aggregate remain the same size, unless you explicitly add disks to them. • You cannot decrease the size of already created RAID groups. • The new size applies to all subsequently created RAID groups in that aggregate.

Ref: https://library.netapp.com/ecm/ecm_download_file/ECMP1141781 page 116

Since you cannot decrease the size of raigdgroup already created, I recommend you can continue to use the system as is.

storage system performance is optimized when all RAID groups are full. You can add additional disks in the future to have more disks in the rg1 raidgroup.

please refer Considerations for sizing RAID groups for disks on page 107 in the link above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SVO,

I'm guessing by you stating that the FAS2650 is limited to 14disk RAID-DP that you are using 6 or 8TB drives...

Why not go for a RAID-TEC aggregate with a single RAID group of 20 disks, giving you 17 data drives? This also falls inline with best practices where you should avoid having any RAID group that is less than one half the size of other RAID groups in the same aggregate.

However, as Sahana states this is disruptive (i.e. you'll need to destroy the aggregate to reconfigured the RAID groups), however all data could be moved to the second aggregate (assuming you have space), which can be completed nondisruptively using the volume move command (https://library.netapp.com/ecm/ecm_download_file/ECMLP2496251).

Hope this helps.

Cheers,

Grant.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are correct about the 8 tb drives.

Here are the current drives of the NAS.

12 -900 GB, 10K drives (OS for now, 2 aggregates)

36- 8TB, 7.2K drives (data storage, 2 aggregates)

In terms of performance, is raid-tec the same as raid-dp?

I am leaning towards of having one large aggregate (raid-tec) instead of two aggregates for more spindles. I have moved all the volumes to the 2nd aggregate. Here is my plan.

1) already moved volumes to 2nd aggregate (aggr2)

2) wipe out 1st aggregate (aggr1)

3) create new aggregate (aggr1) with raid-tec and adjust the disk to 29 (even though only 22 available at this time).

4) move volumes back to aggr1

5) wipe out 2nd aggregate (aggr2)

6) add more disks to 1st aggregate (aggr1)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, sorry for the delay in replying.

In terms of performance there will be negligable performance difference between the two RAID types. With larger RAID groups you can write in larger stripes that is always of benefit, however the reason RAID-TEC was introduced is to help with the risk introduced with the longer time these larger drives take to reconstruct. If a third disk were to fail in the same RAID-DP RAID group while the first/second were still being reconstructed, you will be in a situation where the multi-disk failure will likely take the aggregate offline. This risk can be minimised by making the RAID groups smaller; therefore less chance of a second disk failing, however less data disks means performance may suffer. RAID-TEC allows for 3 disks to fail while mainting the aggreate online, as well as allowing for larger RAID groups to be used. RAID-TEC is the default and recommended RAID protection for disks >6TB and mandatory for disks >10TB.

It is also best practice to have 2 spare drives for each disk type (excluding SSDs) to ensure Maintenance Centre (MC) is in operation. This will proactively fail a drive if errors are being reported and put it through the manufacturers diagnostics to confirm if is indeed about to fail. If it passes it is put back into the spaces pool, otherwise it is failed. Without MC the drive would be immediately failed and you would need to go throught the reconstruct process, which will adversley affect performance - disk blocks need to be copied as quickly as posible. Therefore with these large drvies I would suggest you ensure you have 2 spares to help minimise disk failures.

Bearing these points in mind, for your 36*8TB drives, I would actually aim for 2 RAID-TEC groups each of 17 disks, leaving 2 spares. Either one large aggregate on one system or 2 smaller aggregates per systems to maximise system resources.

Assuming you go for the one large aggregate (otherwise will require additional spares on the partner system), I would only use the first 17 disks, waiting for the remaining disks from the second aggregate to be released before adding the second RAID group. Best practice to add disks in complete RAID groups.

The only other thought is that with the movment of the volumes between the aggregates, the first RAID group of the 8TB drives will initially contain all your data. The second RAID group will be empty. This will not be too much of a problem if you are expecting a fair amount of data to be written or change, since all new blocks will be written to the new RAID group. Over a period of time the data will then start to level between the aggrgeates. There are two ways the layout of your data can be measured:

- To view how the data layout is optimised use the volume reallocation measure command.

- To view how the free space is optimised use the storage aggregate reallocation start command

Both are detailed in the ONTAP 9 Documentaion Command Man Pages (https://docs.netapp.com/ontap-9/index.jsp). Schedules can also be set to automate the optimisation of the layout.

Otherwise your plan would make the best use of the disks, both for capacity and performance.

Hope this helps.

Thanks,

Grant.