Tech ONTAP Articles

- Home

- :

- Tech ONTAP Podcast and Blogs

- :

- Tech ONTAP Articles

- :

- Case Study: The Suncorp Private Cloud

Tech ONTAP Articles

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

|  | ||||||||||||||||||

| |||||||||||||||||||

Tech OnTap has devoted a lot of attention recently to cloud computing and related technologies, including virtualization, secure multi-tenancy, the best ways to build and protect shared infrastructure, and much more. This article presents a case study in which all these elements have been brought together into a fully functional, but still rapidly evolving, private cloud. Just two years ago only 7% of Suncorp business was over the Internet, while today that number has grown to over 42%. What enabled us to make this change was a massive IT transformation that has dramatically improved our ability to deploy IT infrastructure to adapt to rapidly changing business needs. Our wholesale effort to standardize IT infrastructure—including replacing 80% of existing storage with NetApp® virtualizing the majority of our applications; and offering streamlined, cloud-based services—has resulted in substantial IT efficiency improvements:

While these achievements are significant, just as important for the company going forward is that we've gained tremendous flexibility and fostered a culture of innovation that reaches beyond IT. In this article, we'll highlight some of the key elements of our private cloud that have allowed us to achieve these savings and improve business agility. Because every IT infrastructure is essentially a work in progress, we hope to give you a candid look at not only where we are today, but also where we're headed in the future. Standardized Infrastructure

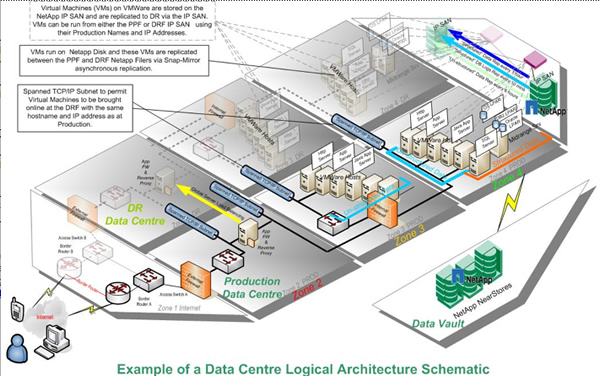

Like many financial services companies, a significant part of our growth has been through mergers and acquisitions, and as a result, when we made the decision to transform four years ago, we were burdened with an IT environment with siloed applications and a vast variety of server, network, and storage hardware. The first step was to begin the ongoing process of standardizing and simplifying our IT infrastructure as much as possible, which to a large degree meant replacing most of our previous infrastructure. Key elements of our current infrastructure, including secure multi-tenancy, are illustrated in Figure 1. (Secure multi-tenancy is described later.)

Figure 1) Key elements of the physical infrastructure, including elements that contribute to secure multi-tenancy. Servers Our server environment now consists of two major tiers: an x86 tier running on IBM blade servers and a midrange tier running AIX. Overall, we are 85% virtualized with a portfolio of 1,500 applications. The x86 environment is 95% virtualized using 350 VMware® ESX hosts and 4,500 virtual machines. The AIX environment has also been highly virtualized using 80 IBM logical partitions (LPAR). Networks All our networks are built using the latest Cisco Nexus® 7000 and 5000 series data center switches. We run our own Multiprotocol Label Switching (MPLS) network between data centers, allowing VLANs to span data centers. This simplifies restarting virtual machines (VMs) at our DR site. Within each data center we use 10GbE, eliminating the need for Fibre Channel cabling and switch fabric, simplifying our cabling infrastructure, leveraging our network resources across both platforms, and hence saving money. We're currently simplifying our cabling even further by consolidating our LAN and IP SAN traffic. Storage At least 80% of our deployed storage is now NetApp. Initially, we began by rolling out FAS6070 and FAS6080 systems to support our core infrastructure. We currently have eight FAS6000 series systems in our production and DR facilities. Within the last 12 months, we've also started deploying the FAS3100 series. We have 49 NetApp storage controllers in total with 3.7PB of total usable storage capacity. These include a number of storage systems dedicated as file servers plus storage systems at hub sites around the country. All our FAS3100 systems use 512GB Flash Cache modules, and due to the performance gains observed, we're retrofitting our FAS6000 systems as well. Flash Cache works by providing intelligent caching that automatically adapts to changes in workload to optimize performance. This will help us support the virtual desktop infrastructure (VDI) environment that we are currently deploying (discussed later) and also gives us significant reductions in latency for applications with structured data. For instance, I/O-intensive Oracle® applications have seen read latency drop from between 11 and 12 milliseconds, down to below 2 milliseconds when Flash Cache is enabled. About 350TB of storage on FAS6000 systems is dedicated to support VMware ESX. Deploying our entire VMware environment on NFS was a big win for us in terms of speed of deployment and flexibility; we are one of the largest VMware deployments on NFS in the world. The ability to preprovision very large volumes for VMware made the transition to the new environment much simpler. We use the NetApp multiprotocol capability to store most application data on separate iSCSI LUNs on the same storage, which is in accordance with NetApp recommendations. As we roll out SnapManager® for Virtual Infrastructure (SMVI), we anticipate being able to move to an all-NFS environment by writing data into VMDK files. Updates to SnapDrive® make this strategy a reality. Our IBM AIX LPAR environment also runs on NFS, which makes it one of the largest such installations in the world. Thin Provisioning, FlexClone, and Storage Efficiency Initially, we started out with all our storage volumes thick provisioned because of concerns about manageability, but 18 months ago we switched to NetApp thin provisioning across our entire NetApp storage environment. Thin provisioning has allowed us to recover about 1.9PB of storage. That's a huge savings by any measure—in essence that's 1.9PB that we haven't had to purchase, rack, power, and cool over the last 18 months. NetApp Operations Manager has enabled us to put alerts in place at both the volume and aggregate level. These are reported up to the Enterprise Management tool in our National Operations Centre. We've set the critical levels lower than we would for a thick-provisioned environment. When an aggregate reaches approximately 70% of capacity, we stop adding new volumes to it, leaving the remainder for organic growth of existing volumes. We perform capacity management on a monthly basis to make sure that there is enough storage preprovisioned on the floor to accommodate growth. Utilizing NetApp FlexClone® technology has enabled us to rapidly provision test environments without consuming a lot of additional storage. FlexClone lets us make virtual clones of existing volumes for use in testing in a matter of seconds. These clones only consume additional storage capacity as changes are made, and when testing is done, we can just release the clones and immediately recover whatever incremental storage space was used. We're also moving to implement NetApp deduplication across our entire storage environment and so far have recovered about 120TB of capacity. We expect this number to grow much larger, particularly when we deduplicate our VMware environment. Our intent is to perform data deduplication by default for the VDI environment, which we are currently rolling out. We expect that deduplication will reduce our storage costs by a further 20% to 30%. Secure Multi-Tenancy

At Suncorp we've implemented secure multi-tenancy at the platform level (Oracle, SQL Server®, MySQL, and so on) rather than the level of the individual application. We place platform-specific volumes and LUNs into different logical security zones. We implement secure multi-tenancy as needed within specific zones to achieve our specific objectives using the capabilities of NetApp MultiStore®. Key elements of this approach are illustrated in Figure 1. We'll be adding additional SMT capabilities (as described in a recent Tech OnTap® article) over time as we roll out the Cisco Nexus 1000V Distributed Virtual Switch in our VMware environment over the next six months. We use a MultiStore virtual storage system (vFiler®) for each platform in each zone as needed. We have an equivalent vFiler unit in the same zone at our DR site. NetApp SnapMirror® is used to replicate data between the primary data center and DR site. (We'll explain more about data protection and DR in the following section.) Major applications on both x86 and AIX run in this environment, including VMware, SQL Server, Oracle, SAS, and our Guidewire ClaimCenter claims management system. For us, SMT gives us the ability to roll out multiple applications on the same storage without worrying about security, and it also makes management much simpler. Without the vFiler constructs, we have to carefully document the location of each volume and LUN. vFiler units logically organize all of that for us, simplifying and accelerating new deployments while letting us apply platform-specific policies. Applying policies will become even more streamlined as we roll out NetApp Protection Manager and NetApp Provisioning Manager in coming months. Storage Service Catalog

We've always been firm believers in the need to have a standardized service catalog as a prerequisite for effective shared infrastructure, virtualization, and cloud deployment. Our previous generation storage service catalog, however, didn't go far enough in helping us standardize offerings. Our current service catalog now contains just four basic offerings:

In each category we offer a bronze, silver, and gold level of service. For example, for our DR service we offer:

These levels are achieved using NetApp Volume SnapMirror with appropriate settings. For instance, for structured data, we typically want a gold level of service, which means that we need to replicate logs every 5 minutes to achieve the 10-minute RPO. Our backup service offers NetApp SnapVault® disk-based backup to a separate data vault location for operational backup and recovery with subcategories that specify performance and retention. All our archive operations are currently to tape to satisfy stringent regulatory requirements. This service catalog works well for us, but we will probably continue our efforts to streamline and simplify it to get down to as few services as possible without jeopardizing operations. Cloud Services

The various elements described in the preceding section, including a standardized infrastructure with secure multi-tenancy architecture and a well-defined storage service catalog, provide a baseline from which a private cloud can be constructed. In general, we define eight key elements that serve as the foundation for our cloud environment: automation, standardization of services, self-service, on-demand provisioning, virtualization, location-independent resource pooling, rapid elasticity, and security. Before we implemented cloud, we rolled out our service-based operating model, which included a service catalog and service offerings for each infrastructure platform similar to the storage service catalog described in the previous section. An important element of this was simply continuing to drive standardization for all platforms and service offerings to make them easier to automate. We didn't buy an orchestration product to implement our private cloud; we chose instead to develop our own orchestration model, as illustrated in Figure 2. We created Web services robots that serve as wrappers around best-in-class provisioning products from our major partners, including VMware, Cisco, NetApp, IBM, RedHat, and Microsoft. These connect to a common Web services bus. Our orchestration layer sits logically above the Web services bus, and our self-service portal connects through it to the various provisioning services it requires.

Figure 2) Logical architecture of the Suncorp cloud. Users of the portal can provision into all of our environments at the push of a button. They can get a pool of resources and easily provision and deprovision to that pool or request additional resources using the cloud interface. Current offerings include infrastructure as a service (IaaS) with VMs provisioned with Windows® 2008 or Red Hat Linux® and platform as a service (PaaS) with a variety of platforms, including Oracle, Microsoft® SQL Server, MySQL, and JBoss. We're also getting ready to roll out desktop as a service (DaaS). Our goal is to extend the life of existing PCs by converting them to use VDI. Suncorp is working to create smart work environments with an emphasis on the ability to hotel and hot desk inside the organization. These capabilities will be supported by VDI. Our VDI offering is currently provisioned outside the cloud, but we're working to include it. Users will be able to request virtual desktops for both production and testing use. We'll make use of the rapid cloning capability within the NetApp Virtual Storage Console (VSC) to deliver this capability using an approach similar to that described in a previous article. Flash Cache modules installed in our NetApp storage will allow our infrastructure to accommodate the boot and login storms that can occur in VDI environments. Our ultimate goal is to be able to offer architecture as a service (AaaS) in which we can provision simple, standardized architectures where all server components, storage, networking HA, DR, backup and recovery, and archival are standardized. This will make it easy for cloud users to access resources while making sure the cloud always delivers necessary levels of data protection and regulatory compliance. Conclusion

Although this discussion has been broad rather than deep, we hope it has provided a good overview of how Suncorp is approaching cloud deployment and how we're making the transition to the new paradigm. The changes we've already made allow us to respond faster to business changes and market demands. Costs have decreased, and we're delivering three times as many business capabilities as before. You can learn more about Suncorp and the business benefits we've achieved in a recent video as well as a success story.

Got opinions about private cloud? Got opinions about private cloud?Ask questions, exchange ideas, and share your thoughts online in NetApp Communities.

|   About Suncorp The Suncorp Group is a leading, diversified financial services company—one of Australia's top-25 listed companies—with over 9 million customers and approximately 16,000 employees in Australia and New Zealand. The group provides insurance, banking, and wealth management (including superannuation) products and services, predominantly to retail customers and small to medium-sized businesses. A variety of NetApp resources highlight various aspects of the Suncorp IT transformation. More on Cloud Deployment  | ||||||||||||||||||

| |||||||||||||||||||

- Find more articles tagged with:

- cloud

- cloud_based_services

- cloud_orchestration

- consolidation

- data_protection

- dedupe

- Deduplication

- efficiency

- flexvol

- infrastructure_standardization

- it_efficiency

- march_2011

- netapp

- private_cloud

- secure_multi-tenancy

- shared_it_infrastructure

- storage_catalogs

- storage_efficiency

- storage_utilization

- suncorp

- tech_ontap

- techontap

- thin_provisioning

- tot

- tot_newsletter

- vdi

Please Note:

All content posted on the NetApp Community is publicly searchable and viewable. Participation in the NetApp Community is voluntary.

In accordance with our Code of Conduct and Community Terms of Use, DO NOT post or attach the following:

- Software files (compressed or uncompressed)

- Files that require an End User License Agreement (EULA)

- Confidential information

- Personal data you do not want publicly available

- Another’s personally identifiable information (PII)

- Copyrighted materials without the permission of the copyright owner

Continued non-compliance may result in NetApp Community account restrictions or termination.

Ross and Jason,

Great stuff! I understand you did not go deep into the details to keep this article managable. But, I have one deeper question. Do you employ any quotas on the storage consumed by individuals, applications or VMs in your cloud implementation?

Anthony, Im just guessing here but they are thin provisioned so Im guessing that at best they re using soft quotas. Putting hard quotas in would be a bit like using volume guarantee which is the opposite of thin provisioning.

Mind you it could be interesting to use hard quotas to control thin provisioning, havent tried it though.