Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: HOW-TO: consume parameters from OCUM on a PowerShell script and generate a custom email/ticket

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HOW-TO: consume parameters from OCUM on a PowerShell script and generate a custom email/ticket

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all.

A few weeks back someone asked me on a PM for help with customizing the On-Command Unified Manager alerts. My initial response was that "you are looking for troubles" as i had bad experience trying to do something like that before... The reason it is not straight forward is because of the way OCUM passes the parameters to the script, instead of sending it as parameters and values. it just throw it at the command line in somewhat structured way, and expect you to pick it up as $args[x] in the location you expect the relevant value (you can see examples in TR-4585)

i created essentially two PS lines and i'm publishing it below, hope to make your life easier for consuming the parameters from OCUM. it also process parameters coming from EMS messages if you have such custom ones configured.

#create hashtable, Get rid of chars that break the script, append any word that start with " -" to a hashtable index with the input following it until the next " -" as a value.

$parms=@{};" "+($args -replace "\]|\[|@"," " -join " ") -split " -"|?{$_}|%{$t=$_ -split " ",2;$parms.($t[0])=$t[1]}

#run on $parms.eventArgs and append the first word before a "=" as a eventArgs_* index, and as the value any words after it - until the next word that come before "=".

($parms.eventArgs -split " "|%{if($_ -match "="){".cut."}$_}) -join " " -split ".cut. "|?{$_}|%{$t=$_ -split "=";$parms.("eventArgs_"+$t[0])=$t[1]}

On the attachment you can see a bit more of code as example for how to later use $parms hashtable and perform the following:

- Output the $Parms to a file (used for deployment and debugging as the parameters are not always consistent. especially the "EventArgs")

- Acknowledge to OCUM that the alert has been processed by the script.

- Pulling Quota information from the OCUM DB where i find the information in a specific alert (Quota soft limit breach generated by custom EMS) as lacking.

- If the Event is new generate custom emails, one for the event mentioned above, and one generic.

To implement it as-is you need to edit the variables $Emailto and $SMTPServer. and upload it to OCUM in https://server_name/admin/scripts and assign it to alert in https://server_name/#/alerting

Note that the very large region "Pulling Quota information from the OCUM DB" is not necessary for the rest of the functionality but will just not run if you don't configure this EMS. if you don't need it - feel free to delete it.

Gidi

- View By:

-

unified manager

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Gidon,

Does this works with OCUM vapp appliance as well ,if yes then how to implement your script in order to push customize emails for user quota alerts.

Thanks in advance.

Pranjal Agrawal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’m afraid it won’t as the vapp is Debian based and don’t support Powershell. You can see here pretty helpful thread with examples that can work for the vapp:

https://community.netapp.com/t5/OnCommand-Storage-Management-Software-Discussions/OCUM-7-2-Powershell-Script-for-Quota-Breach-Custom-Email-to-Specific...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Gidon,

Is there any alternative way where i can alert the quota users for quota breach which appends the mount point and the filer name.

Regards

Pranjal Agrawal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

For user or group type of quota you have the built in configurable email notification within OCUM

.

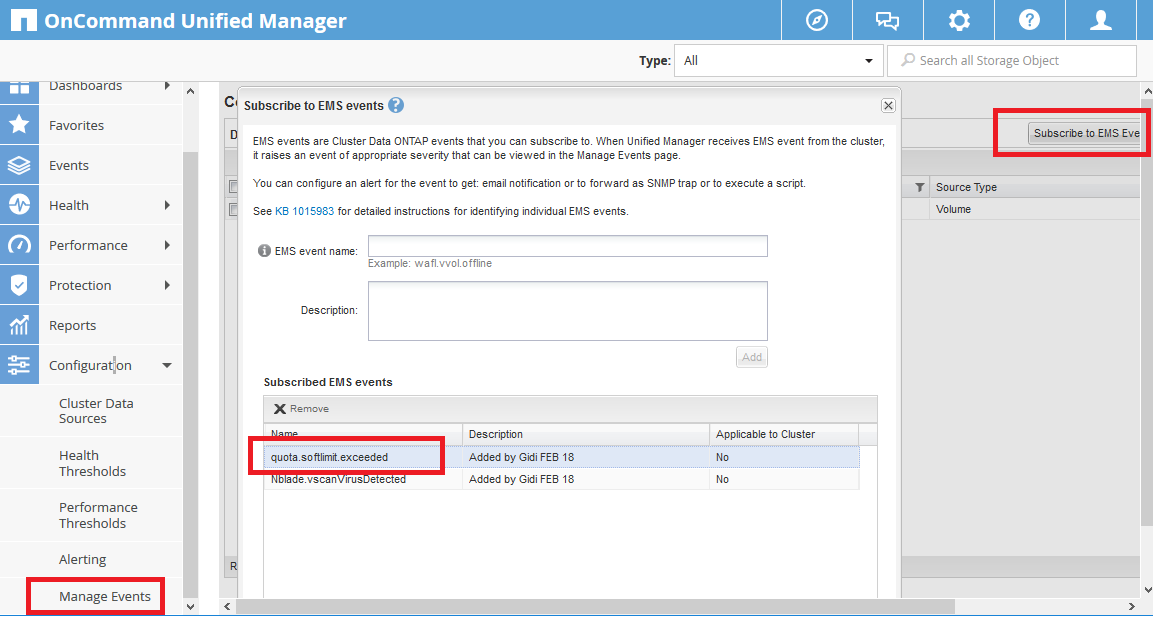

But for qtree type of quota it's seems not to be working... also seems that OCUM is not aware for the first quota breach (threshold) and not generating event for it (it does for soft limit and hard limit). so i had to create and EMS rule in OCUM (see line 32 in the script):

.

The next problem. and i guess that's what you refering to. is that the threshold event genrated from EMS looks like that:

Trigger Condition - quota.softlimit.exceeded: Threshold exceeded for tree 7 on volume Vol1@vserver:43243242-34324-14444-92222a-87a987a0a808e0e This message occurs when a user exceeds the soft quota limit (file and/or block limit).

And not informative as the built it one that exists for soft and hard limit.

Source - SVM1:/VOL1/Tree1 Trigger Condition - The soft limit set at 675.00 GB is breached. 677.35 GB (100.35% of soft limit) is used. The hard limit is set at 750.00 GB.

The SQL query in my script (line 79) should work on any scripting lang, it will give you what you asked for - but you will need to figure out how to get the tree number and vserver ID from the the long string OCUM passes to the script. every language will allow you to do similar manipulation on the string as i did in PS. but it will require some try and miss..

-----

If you can. I would have dropped the use of the “threshold” object and stay only with soft and hard limit. As this all process I mentioned before is subject to a lot of errors. and i would have also avoid using qtrees what so ever.

A bit of steam release:

I’m very disappointed from the very lacking integration NetApp did for the "qtree" object with OCUM in Cdot. I’ve passed that feedback before to NetApp (no growth reporting, no alert on threshold, the quota user email rules are not flexible enough)..

I ended up doing all that (and internal capacity reporting table in SQL for Qtrees) as when we moved to cdot it looked like a scalable good idea to create large volumes as buckets each with single policy/tier for perf, efficiency, snapshot schedule ,snampmirror, snapvault etc etc... and put the different workload in different Qtrees placed in the different buckets. That given us lots of “standardization” benefits. And also “solved” some performance issues we had in 7-mode for hundreds of snapmirror/vault relationship frequently updated.

But looking now on the operational pros and cons. I would have separating the workload to different volumes. And have the standardization enforced with scripts and workflows.