Cloud Volumes ONTAP

- Home

- :

- NetApp Data Services

- :

- Cloud Volumes ONTAP

- :

- volume move in CVO 9.7P5 between tiered aggregates

Cloud Volumes ONTAP

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

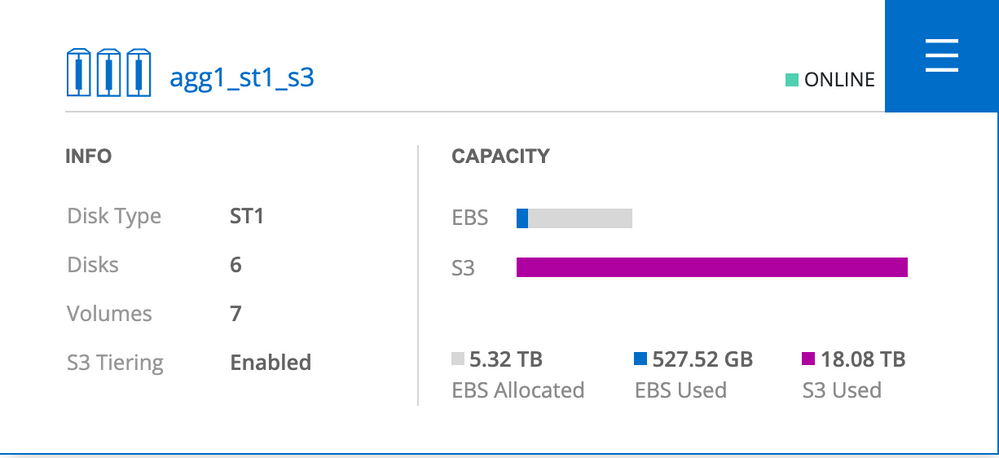

I have a volume that I want to move from one tiered aggregate to another, just created, tiered aggregate. The volume's footprint looks like this:

Total Footprint: 5.45TB

Total Footprint Percent:

Containing Aggregate Size: 5.32TB

Name for bin0: Performance Tier

Volume Footprint for bin0: 143.8GB

Volume Footprint bin0 Percent: 3%

Name for bin1: S3Bucket

Volume Footprint for bin1: 5.27TB

Volume Footprint bin1 Percent: 97%

I was under the impression that I could move this volume from one tiered aggregate to another, and the only thing that would move would be the 143.8GB in the Performance Tier, but that is not what is happening. The volume move is moving the entire volume. Shouldn't the move just be copying the 143.8GB in bin0?

--Carl

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it looks like this volume (erpauxdb1_u02) was created when the cluster was running ONTAP 9.4. Volume moves of volumes that were created in pre-9.5 will not be able to take advantage of the optimized volume move feature that stops the data from being pulled back to the performance tier during the move. Only volumes that were created in 9.5 and newer will be able to utilize this feature.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Carl!

Unless a volume move policy of "all" is specified in the vol move command, the data moves into the local (performance) tier first, before cooling per policy to go into the s3 tier. So my understanding is that you should move it as "all", then change it back to "auto" to enable re-warming and gradual cooling of new data - https://docs.netapp.com/us-en/occm/concept_data_tiering.html#volume-tiering-policies

Please let us know how it goes!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately, this still doesn't appear to be working. I'm testing moving another volume from the same source and destination aggregates and using the -tiering-policy all option, and it's still appears to be moving all of the data:

*******************

volume move show -vserver awssvm1 -volume erpauxdb1_u02

Vserver Name: awssvm1

Volume Name: erpauxdb1_u02

Actual Completion Time: -

Bytes Remaining: 8.30TB

Destination Aggregate: agg1_gp2_s3

Detailed Status: Transferring data: 246.0GB sent.

Estimated Time of Completion: Mon Jul 20 10:38:02 2020

Managing Node: awsclu1-01

Percentage Complete: 2%

Move Phase: replicating

Estimated Remaining Duration: 00:17:32

Replication Throughput: 8.07GB/s

Duration of Move: 01:27:08

Source Aggregate: agg1_st1_s3

Start Time of Move: Mon Jul 20 08:53:31 2020

Move State: healthy

Is Source Volume Encrypted: false

Encryption Key ID of Source Volume:

Is Destination Volume Encrypted: false

Encryption Key ID of Destination Volume:

*******************

From volume show-footprint:

Total Footprint: 8.10TB

Total Footprint Percent:

Containing Aggregate Size: 5.32TB

Name for bin0: Performance Tier

Volume Footprint for bin0: 170.2GB

Volume Footprint bin0 Percent: 2%

Name for bin1: S3Bucket

Volume Footprint for bin1: 7.88TB

Volume Footprint bin1 Percent: 98%

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it looks like this volume (erpauxdb1_u02) was created when the cluster was running ONTAP 9.4. Volume moves of volumes that were created in pre-9.5 will not be able to take advantage of the optimized volume move feature that stops the data from being pulled back to the performance tier during the move. Only volumes that were created in 9.5 and newer will be able to utilize this feature.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's great you found that! I'm sorry we weren't able to find it quicker.

Thanks for updating - I hope this helps someone in the future.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please advise how I could find out this volume ( (erpauxdb1_u02 in this case) was created when the cluster was running ONTAP 9.4 or prior??