ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: Paired array name mismatch using SRA 2 and SRM 5

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

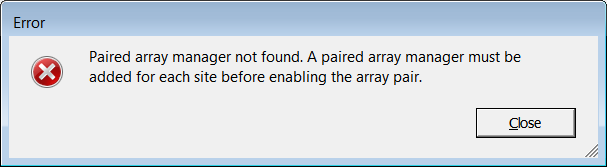

Hello, I am using VMware SRM 5 and SRA 2.0 in conjunction with 3 FAS2040 filers running ONTAP 8.0.1-7. 2 filers in a primary dc, 1 in a dr dc. I am trying to pair the arrays but I keep getting the VMware error below each time I click on the Enable action.

The DR Site has 2 remote arrays entries while each of the primary filers have 1 entry each but I don't have the option to enable (it's grayed out) from the primary filers.

One of the primary arrays:

DR:

The problem is that the DR array wants to connect to a different name (FQDN) while the primary arrays are only recognized as HOSTNAME. Below is an excerpt from the SRM log file:

2011-12-28T14:55:36.627-05:00 [05624 verbose 'PropertyProvider' ctxID=7ea28e87 opID=34BFBF3D-00000193] RecordOp REMOVE: taskInProgress["dr.storage.ArrayManager.addArrayPair23"], storage-arraymanager-13192

2011-12-28T14:55:36.627-05:00 [05624 info 'DrTask' ctxID=7ea28e87 opID=34BFBF3D-00000193] Task 'dr.storage.ArrayManager.addArrayPair23' failed with error: (dr.storage.fault.ArrayNotFound) {

--> dynamicType = <unset>,

--> faultCause = (vmodl.MethodFault) null,

--> id = "'HOSTNAME1A.FQDN.local",

--> siteUuid = "aab9fd9f-ead3-45d6-9f42-a90ef02c5120",

--> siteName = "Site Recovery for MIA",

--> msg = "Array 'HOSTNAME1A.FQDN.local' not found at site 'Site Recovery for MIA'.",

I have tried to find the location the DR array is grabbing those names from but I have been unsuccessful so far. It's not domain DNS and it's not local host file DNS (from either vcenter or the filers)

Has anyone seen this error before?

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem was/is with Operations Manager / OnCommand Core and how it creates the relationships for SnapMirror. If I create the SnapMirror manually through the Filer the problem goes away and each Filer sees the other as HOSTNAME:/VOL - I still have a ticket opened with NetApp for OnCommand but the pairing of the arrays in SRM is now working.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem was/is with Operations Manager / OnCommand Core and how it creates the relationships for SnapMirror. If I create the SnapMirror manually through the Filer the problem goes away and each Filer sees the other as HOSTNAME:/VOL - I still have a ticket opened with NetApp for OnCommand but the pairing of the arrays in SRM is now working.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi cserpadss,

I am configuring this for a customer and ran into the same problem. Snapmirrored volumes using OnCommand and can't add arrays in SRM. Has NetApp ever responded to your issue with an alternate fix? Or is my best bet to remove the OnCommand snapmirror relationships and redo them via command line or system manager?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Create the Snapmirror relationship via OnCommand but edit the snapmirror.conf manually.

In my case when the snapmirror relationship was created it would create the SRC filer name as HOSTNAME.DOMAIN:/VOL/ on the DST filer. I manually made it HOSTNAME:/VOL and then replication worked with the names in the snapmirror.conf file I re-added the Filers in SRM.

Example snapmirror snippet:

XXXXXXNFASSXX=multi(10.X.XX.27,10.X.XX.126)(10.X.XXX.27,10.X.XXX.26)

XXXXXXNFASSXX:vol1_XXX_XX_XXD YYYYYYNFASSYY:SnapMirrorxXXXxXXX_mirror_XXXXXXNFASSXX_vol1_XXX_XX_XXD - - - - -

XXXXXXNFASSXX:vol2_XXX_XX_XXXXD YYYYYYNFASSYY:SnapMirrorxXXXxXXX_mirror_XXXXXXNFASSXX_vol2_XXX_XX_XXXXD - - - - -

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue is caused because there is a mismatch between the way the snapmirror relationship is defined at the destination (in the case above by a connection name or by FQDN used as source name) versus the way the snapmirror relationship appears at the source controller, where source name shows simply as short hostname in snapmirror status output.

In the admin guide for the NetApp SRA 2.0 there is a SRA configuration file feature described for use when you have a snapmirror relationship defined on the destination by IP address of the source, rather than by hostname of the source. Using an IP address as source name also causes the mismatch. The feature, which is use of the ip_hostname_mapping.txt file in the SRA directory, is a way to tell the SRA about this mismatch so it can still detect the relationship.

The ip_hostname_mapping.txt file can also be used to resolve the mismatch between FQDN name and source name.

Please review the admin guide for details if you want to use this method, but the basic difference is this:

The admin guide gives an example where each filer hostname is aligned with an IP address that is used in the replication relationship:

hostA = 10.10.0.1

hostB = 10.10.0.2

Where hostA and hostB wold be changed to your source and destination controller host names.

To use the feature for FQDN name you would put this:

hostA = <hostA FQDN>

hostB = <hostB FQDN>

Where <hostA FQDN> is the FQDN of that controller.

You should just put the same entries in the ip_hostname_mapping.txt file on both SRM servers, as the entries will be needed later if you reverse replication and failback anyways. Please review this in the admin guide, as there is also a config file option you have to turn on to enable the use of the ip_hostname_mapping.txt file.

Larry

BTW, I'm working on getting the documentation updated to mention this.

EDIT: adding some more information for clarity, this is the FQDN use case reproduced in my lab:

Snapmirror relationships viewed on destination array (note source shows as FQDN of hostname.netapp.local):

vmcoe-fas2020-01> snapmirror status

Snapmirror is on.

Source Destination State Lag Status

vmcoe-fas3070-01.netapp.local:nfs01 vmcoe-fas2020-01:nfs01 Snapmirrored 00:07:41 Idle

vmcoe-fas3070-01.netapp.local:nfs02 vmcoe-fas2020-01:nfs02 Snapmirrored 06:32:26 Idle

vmcoe-fas3070-01.netapp.local:nfs03 vmcoe-fas2020-01:nfs03 Snapmirrored 6052:02:50 Idle

vmcoe-fas3070-01.netapp.local:nfs04 vmcoe-fas2020-01:nfs04 Snapmirrored 6052:02:48 Idle

vmcoe-fas3070-01.netapp.local:vmfs01 vmcoe-fas2020-01:vmfs01 Snapmirrored 00:04:39 Idle

vmcoe-fas3070-01.netapp.local:vmfs02 vmcoe-fas2020-01:vmfs02 Snapmirrored 168:52:29 Idle

destination array /etc/snapmirror.conf file:

vmcoe-fas3070-01.netapp.local:nfs01 vmcoe-fas2020-01:nfs01 - 0 22 * *

vmcoe-fas3070-01.netapp.local:nfs02 vmcoe-fas2020-01:nfs02 - 0 22 * *

vmcoe-fas3070-01.netapp.local:vmfs01 vmcoe-fas2020-01:vmfs01 - - - - -

vmcoe-fas3070-01.netapp.local:vmfs02 vmcoe-fas2020-01:vmfs02 - - - - -

vmcoe-fas3070-01.netapp.local:nfs03 vmcoe-fas2020-01:nfs03 - - - - -

vmcoe-fas3070-01.netapp.local:nfs04 vmcoe-fas2020-01:nfs04 - - - - -

Snapmirror relationships viewed on source array (note source does not show as FQDN, because it's local system here and simply shows hostname, relationship will always show just hostname when viewed at source):

vmcoe-fas3070-01> snapmirror status

Snapmirror is on.

Source Destination State Lag Status

vmcoe-fas3070-01:nfs01 vmcoe-fas2020-01:nfs01 Source 00:09:02 Idle

vmcoe-fas3070-01:nfs02 vmcoe-fas2020-01:nfs02 Source 06:33:47 Idle

vmcoe-fas3070-01:nfs03 vmcoe-fas2020-01:nfs03 Source 6052:04:11 Idle

vmcoe-fas3070-01:nfs04 vmcoe-fas2020-01:nfs04 Source 6052:04:09 Idle

vmcoe-fas3070-01:vmfs01 vmcoe-fas2020-01:vmfs01 Source 00:06:00 Idle

vmcoe-fas3070-01:vmfs02 vmcoe-fas2020-01:vmfs02 Source 168:53:50 Idle

source /etc/snapmirror.conf:

Mine is empty because I have no relationships currently replicating to this array.

Source array /etc/snapmirror.conf should not have entries yet for reverse direction of above relationships, if there are any bad entries there get rid of them.

Only other relationships, currently replicating in direction to this array, should be listed.

ip_hostname_mapping.txt file at both sites contains this:

vmcoe-fas3070-01 = vmcoe-fas3070-01.netapp.local

vmcoe-fas2020-01 = vmcoe-fas2020-01.netapp.local

(EDIT by Larry on Feb 18,2012: You have to put both the controllers in the above list, even if you are not currently replicating in the other direction. Without both of them in there storage discovery will still have issues. The other controller will be needed there for the SRM reprotect workflow when replication is reversed.)

ontap_config.txt has this option set on at both sites:

use_ip_for_snapmirror_relation = on

the use_ip_for_snapmirror_relation option and the entries in ip_hostname_mapping.txt file, are global options, they apply for all relationships between these to arrays (vmware used relationships). So you cannot have some relationship between these two arrays defined by short hostname, some by FQDN, some by IP. All SRM managed snapmirror relationships between these two arrays must be defined the same way, not a mix.

After any changes you have to refresh the adapters to re-run storage discovery, by clicking refresh in the array manager devices tab.

Screenshot of devices discovered above. Note that I have nfs03 and nfs04 datastores on the netapp controller, and replicated, but they are not in use by my ESX hosts, they show up in discovery anyway, hence nothing in Datastore column for those. If you don't want these in there they you have to use the volume include/exclude fields on the edit array manager screen to exclude them.

Message was edited by: Larry Touchette

Message was edited by: Larry Touchette - added note under ip_hostname_mappin.txt example

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Larry,

I'm having a different issue with SRM 5/SRA2. In SRM 5 Array Managers, the array pairs are detected and enabled just fine but I'm getting the following errors for some reason.

Device '/vol/NFS_datastore_name' cannot be matched to a remote peer device.

Device '/vol/NFS_datastore_name/qtree' cannot be matched to a remote peer device.

Our naming convention is that the NFS datastore is called "nfs_datastore1" in the protected site but it's named "nfs_datastore1_mirror" in the DR site (snapmirror works fine). Does this create pairing issues in SRM 5?

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That error means that those volumes aren't mounted as datastores. When adding a filer in SRM you can choose to either select what volumes to pair or what volumes to omit from the volume search.

Regards,

Carlos Serpa

Systems Engineer

DSS, Inc.

This email was sent from a handheld device, please excuse any typographical errors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your quick reply, Carlos. Actually the NFS datastore in question is indeed mounted on 2 of 4 ESX hosts in the protected site and there are VMs currently running off that datastore. Do you have any other ideas?

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Michael,

Are you using qtrees as datastores or volumes as datastores?

Do you have the whole volume exported or each qtree exported?

If you are using qtrees, then you have to have an /etc/exports line for each qtree separately.

You can use one volume snapmirror relationship to replicate a volume that has multiple qtrees, but with SRM when using qtrees it's best to have a qtree snapmirror relationship for each qtree.

Since you say the SRA is reporting /vol/volume_name and /vol/volume_name/qtree then I think this means you are using qtrees as different datastores, but you are having an export only for the volume.

Larry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your quick reply, Larry. This is how our current NFS datastores setup look like on ESX hosts in the protected site (this was initially done for us by a third-party vendor when we purchased NetApp filers). We'd first create a NFS volume called "nfs_datastore1" on the filer, and then create a qtree (say qt1) for this volume. On each ESX host, we would mount the NFS datastore as:

/vol/nfs_datastore1/qt1

and all VMs are saved to the qt1 folder. On the storage filer in DR site, we would create the NFS volume as "nfs_datastore1_mirror" and setup SnapMirror relationship to replicate this whole volume (not qtree) from protected site to DR site.

On the storage filer in the protected site, I have setup the NFS exports as follows:

| /vol/nfs_datastore1 | -sec=sys,rw=x.x.x.0 (NFS IP subnet)/24,root=x.x.x.0/24 |

So in our case, do I have to setup NFS exports for the single qtree in each of our NFS datastores for SRM 5? Your help on this is greatly appreciated.

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Having volume names different on source and destination is not a problem.

In the vmware environment the path shown on the datastore is /vol/nfs_datastore1/qt1 correct?

The SRM server inventories the storage and VM's through vcenter and the ESX hosts. You are having a mismatch in SRM storage discovery because the ESX hosts say they are mounting /vol/nfs_datastore1/qt1. The SRA reports to SRM what is in the /etc/exports file. When SRM compares what the SRA reports to what vcenter/esx inventory is discovered, it does not find a match because one is reporting /vol/nfs_datastore1/qt1 and the other is reporting /vol/nfs_datastore1. SRM believes they are different.

Because you have only one qtree in the volume then doing volume snapmirror is just fine.

You will have to change your export line from:

/vol/nfs_datastore1 | -sec=sys,rw=x.x.x.0 (NFS IP subnet)/24,root=x.x.x.0/24 |

To:

/vol/nfs_datastore1/qt1 | -sec=sys,rw=x.x.x.0 (NFS IP subnet)/24,root=x.x.x.0/24 |

So that what's in the export file matches what's shown in vcenter as the NFS path.

Just changing the text in the /etc/exports file might be enough to make SRM storage discovery work. However, if you change the exports file and then make ontap re-read the exports file, for example by using the exportfs -r command, then you will cause the existing mounts to go stale. Even though the path is the same exportfs -r would unexport the existing path and then export the new path.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're a genius, Larry! After modifying the /etc/exports file to include the qtree name, the array pairs succeeded with no more errors. Thank you very much for your help! I have two more questions in regards to NFS exports. Is this how it's supposed to setup in the first place (meaning the NFS exports should match with how the NFS datastores are mounted on the ESX hosts)? I modified the /etc/exports file using wrfile without using the exportfs -r command, will there be any issues with the existing NFS volumes mounted on the ESX hosts if I need to reboot the NetApp filer later? Thanks again for all your help on this.

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is really good stuff. This post will help many people in the future I'm sure.

Your filers will read the /etc/exports file upon reboot which is the same thing as doing an exportfs -r. If what's on /etc/exports matches the results of the exportsfs command then you're good to go.

Now I am having a different issue with trying to protect VM's between datacenters but at least the arrays are paired nicely.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks, Carlos. I ran the exportfs command but it still showed the old NFS export (in our case it's /vol/nfs_datastore1) instead of /vol/nfs_datastore1/qt1. Will I have problems when I reboot the filers?

After creating the protection groups in SRM, I'm also having a iSCSI RDM LUN issue when trying to protect a VM running SQL, similar to what this guy experienced (https://communities.netapp.com/thread/15537). The SRM requirement states that any VM with RDM LUNs must reside on the same filer, which is what we have.

In our case two of the five RDM LUNs are showing as non-replicated although they are indeed replicated/SnapMirrored to DR site. Not sure what's going on here. If I can't find a resolution to this, I may have to manually attach those RDM LUNs after a failover.

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the delay, I was in China last week...

To try to answer a couple of your questions Michael:

Yes the values in the /etc/exports file should match each datastore that is mounted. There are lots of tools that look at whats exported and align that with what ESX shows for the mount path. If you export just the volume, but mount each qtree separately I believe this also causes problems for oncommand and the vsc, here's an example of that:https://communities.netapp.com/message/75022#75022

The exportfs command alone shows what's currently exported in memory. When your filer reboots the /vol/nfs_datastore1 path will no longer be exported, but each /vol/nfs_datastore1/qtree path will be exported if you have those lines in there now. However, I'm not 100% sure you will not have a problem if your filer boots. My vmware lab is not accessible right now to test, but a colleague and I did try something in another lab with linux client NFS mounting a qtree where the volume is what is exported. While the linux client is copying data into the export, if you change the export path by updating the exports file to add the qtree name to the end of the exports path, then doing exportfs -r (-r means re-read, which unexports anything not in the exports file like exports -u, and then exports everything in the exports file) this made the filer immediately start complaining that the linux client was trying to access a nonexistent export path. In essence, even though the linux client was mounting /vol/volname/qtree, the export path from the NFS server really was just /vol/volname. Unexporting the /vol/volname path did affect the client, even though the /vol/volname/qtree path immediately took it's place. And, a filer reboot would be the same as exports -r. I would recommend you remount your datastores. I think you could do this by vmotioning your vms off of an ESX host, remounting the affected datastores on the host that's not using the datastore anymore using the exact same path that was used before, then vmotion back and repeat on other hosts. I think this would work, unless vsphere wants to complain thinking these are different datastores now, if it won't let you vmotion back complaining that the target ESX host does not have connection to the correct datastore then that is the case and unfortunately I think you'd have to do a vm shutdown, unregister, remount and reregister.

I'm not sure what to make of your RDM issue. That is supposed to work. In the thread you linked to there was one user reporting that his simply detected ok on a later attempt. If yours is consistently not working I think you'd have to get a case open with netapp about that one.

Larry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Larry,

Hope you had a good time in China and thank you for the detailed reply. I fully understood what you wrote so I tested this on our filers in the DR site (FAS3210 single enclosure HA) last night. Guess what happened? Just like the Linux client in your lab, once I issued the cf takeover command from the HA partner filer, the ESX hosts immediately flagged the NFS datastore (which was mounted as /vol/nfs_datastore/qt) & any VMs residing on that datastore as inaccessible (good thing I already had a feeling this might happen from your explanation, so I shut all running VMs down prior to the filer reboot). Unfortunately the NFS datastore could not be unmounted from the ESX hosts (an error popped up saying it was in use since there were VMs still mounted from that datastore). It seemed that the only way to fix this was to 1) manually remove each affected VMs from the inventory, 2) unmount the NFS datastore from the ESX hosts, 3) re-mount the same NFS datastore with the qtree path, and 4) manually re-add each VMs back into the inventory.

Now this presents a major problem for us in the protected site. If the filer (where the current NFS datastores reside) were to go down for whatever reason, our virtual infrastructure would be totally screwed! I guess I have two options here:

1) Modify the /etc/exports file to include both NFS exports for the time being:

/vol/nfs_datastore & /vol/nfs_datastore/qt

Would this work and resolve our problem?

2) Create new NFS FlexVols (w/ NFS exports properly setup with the qtree name) and mount them to the ESX hosts. Use SVMotion to migrate all VMs from the existing NFS datastores to the new ones and unmount each old NFS datastores once done. This means all existing SnapMirror relationships (for the NFS datastores) must be rebuilt and everything has to be re-replicated over to our DR site.

Do I have any other options?

Anyway, thanks again for all your expert insights.

Thanks,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

Regarding item 1) I would put the original /vol/nfs_datastore line back in the exports file. I'm not sure if having all the lines (volume only line and each volume/qtree line) line would interfere with the SRM storage discovery. There's no harm in doing that temporarily to try storage discovery, but that might cause problems in the future for the failover back to primary after reprotect process, because when failing over to a site that already has export lines for the datastores (which is the case when failing back) the SRA needs find and modify the existing exports. Having that extra /vol/volume line in there along with the qtree lines might cause an issue during that failback. I might be able to try this in the next couple of days in my lab.

I don't like the idea of making new datastore and re-replicating either. I want to try a couple things in my lab.

Larry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't have access to my vmware lab for a couple days to test this end to end (in the middle of a sort of lab restructuring). But I did just check a couple things...

Right now on one of my filers I have both of these paths as active exports in memory:

/vol/larryt

/vol/larryt/qtree1

Data ONTAP lets you export /vol/volumename and also export /vol/volumename/qtree out of the same volume. Both these exports are currently active in memory:

fas6080c*> exportfs

/vol/larryt -sec=sys,rw,nosuid

/vol/larryt/qtree1 -sec=sys,rw,nosuid

In the Data ONTAP admin guide is says this in the exportfs man page: (this is ontap 8.0.1, I checked man page for ontap 7.3.5.1 too, it says same)

When an NFS client mounts a subpath of an exported file system path, Data ONTAP applies the export options of the exported file system path with the longest matching prefix. For example, suppose the only exported file system paths are /vol/vol0 and /vol/vol0/home. If an NFS client mounts /vol/vol0/home/user1, Data ONTAP applies the export options for /vol/vol0/home, not /vol/vol0, because/vol/vol0/home has the longest matching prefix.

Because ontap allows multiple exports from the same tree path, and because new mounts will use the longest matching path, then I think you could do this:

Leave the /vol/nfs_datastore export line in exports file.

Add export lines for /vol/nfs_datastore/qt (have them occur later in the file)

Do exportfs -a command. I just tested this, -a will read exports file making all new exports but it won't change the existing export of /vol/nfs_datastore, after this command then do exportfs alone and it should show like my example above, both paths exported.

vmotion all vms using /vol/nfs_datastore/qt (regular vmotion, not storage) to a different esx host. when you vmotion a vm to a different host, it is effectively removed from the inventory of the evacuated host so once they are all moved you will be able to unmount the datastore from just that host. (through the configuration tab for that specific host in vcenter, not through the vcenter datastores icon which wants to unmount on all hosts)

On the evacuated ESX host unmount the /vol/nfs_datastore/qt datastore, and remount it as /vol/nfs_datastore/qt. at the time of remount, this host will connect to the export defined with the full /vol/nfs_datastore/qt line as the ontap man page describes.

Vmotion the vms back to the evacuated ESX host. I believe this will work, only doubt i have is if vcenter would complain about the return vmotion operation, if it somehow thinks this is a different datastore, I hope it would not because the paths are exactly the same.

Repeat process for other vms and datastores.

If that all goes well remove the /vol/nfs_datastore line from the exports file, so you can configure SRM.

If I get my lab back tomorrow I might have time for a test of this myself.

Larry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Larry,

I never responded to your last reply. Sorry about that (I've been busy with other projects lately). Anyway, I just want to follow up with the NFS exports issue.

My original plan on fixing our NFS exports issue was to follow what you suggested but one thing I just wasn't sure about was this:

"Vmotion the vms back to the evacuated ESX host. I believe this will work, only doubt i have is if vcenter would complain about the return vmotion operation, if it somehow thinks this is a different datastore, I hope it would not because the paths are exactly the same."

Since I didn't want to risk it I ended up fixing the problem the hard way (which I knew would work). That is, 1) shut all VMs down and manually removed them from vCenter inventory; 2) unmounted each and every affected NFS datastore from the ESX hosts in cluster; 3) fixed the NFS exports file and rebooted the filers; 4) re-mounted the NFS datastores (with the qtree path) on the ESX hosts; and 4) manually re-added each VM back into the inventory.

BTW, our SRM project is moving along well and it's almost complete. The only issue we are experiencing now is that certain VMs w/ RDM LUNs (Exchange/SQL servers) wouldn't power on during the test run. Depending on which priority group I put the VMs in, the most frequent error we get is this:

Error - Failed to recover RDM '02000f000060a9800064666a41676f6a4b516456674c554e202020'. Failed to connect to NFC service at ESX host. Connection terminated by server

However, I noticed that if I put those VMs in priority group 4 or 5 instead, the chance of successful test run is much higher. So right now I need to find the right order to power on those VMs.

Anyway, many thanks again for all your help on this!

Take care,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So is the use of the multi directive supported by the NetApp SRA or not?

I have tried everything, and keep getting

Vcenter also throws this error "Array 'hosm1' nof found at site "vcenter.domain.com"

This is what I have in /etc/snapmirror.conf

Where hosm1 is a connection name and not a hostname.

#----/etc/snapmirror.con

hosm1=multi(GHoNetApp01,GDrNetApp01)(GHoNetApp01-vif2-70,GDrNetApp01-vif2-70)

hosm1:GHoLLIdxVol01 GDrNetApp01:GHoLLIdxVol01 compression=enable 0 0,2,4,6,8,10,12,14,16,18,20,22 * *

hosm1:GHoVSphereCluster01VolU01 GDrNetApp01:GHoVSphereCluster01VolU01 compression=enable 0 0,2,4,6,8,10,12,14,16,18,20,22 * *

hosm1:GHoVSphereCluster01Vol01 GDrNetApp01:GHoVSphereCluster01Vol01 compression=enable 0 0,2,4,6,8,10,12,14,16,18,20,22 * *

hosm1:GHoDoclinks GDrNetApp01:GHoDoclinks compression=enable 0,5,10,15,20,25,30,35,40,45,50,55 * * *

hosm1:Ghodoclinks1 GDrNetApp01:Ghodoclinks1 compression=enable 0,5,10,15,20,25,30,35,40,45,50,55 * * *

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

mghanawi1, SRM is complaining because the connection name you created "hosm1" is not a real system name that exists on the network and cannot be resolved. Connection names are used only internally by SnapMirror. You can make SRM work with connection names by making the connection name to match the host name of the source system. In your case changing all occurances of "hosm1" to "GHoNetApp01" (if GHoNetApp01 is the resolvable name of your array) in your snapmirror.conf file. Snapmirror will still use the multi connection, and SRM will recognize the name of the array properly. This is described in this tech report, under section about snapmirror connection names http://media.netapp.com/documents/tr-4064.pdf

Larry