Tech ONTAP Blogs

- Home

- :

- Blogs

- :

- Tech ONTAP Blogs

- :

- Consistency Groups in ONTAP

Tech ONTAP Blogs

Consistency Groups in ONTAP

There’s a good reason you should care about CGs – it’s about manageability.

If you have an important application like a database, it probably involves multiple LUNs or multiple filesystems. How do you want to manage this data? Do you want to manage 20 LUNs on an individual basis, or would you prefer just to manage the dataset as a single unit?

This post is part 1 of a 2. First, I will explain what we mean when we talk about consistency groups (CGs) within ONTAP.

Part II covers the performance aspect of consistency groups, including real numbers on how your volume and LUN layout affects (and does not affect) performance. It will also answer the universal database storage question, “How many LUNs do I need?” Part II will be of particular interest to long-time NetApp users who might still be adhering to out-of-date best practices surrounding performance.

Volumes vs LUNs

If you’re relatively new to NetApp, there’s a key concept worth emphasizing – volumes are not LUNs.

Other vendors use those two terms synonymously. We don’t. A Flexible Volume, also known as a FlexVol, or usually just a “volume,” is just a management container. It’s not a LUN. You put data, including NFS/SMB files, LUNs, and even S3 objects, inside of a volume. Yes, it does have attributes such as size, but that’s really just accounting. For example, if you create a 1TB volume, you’ve set an upper limit on whatever data you choose to put inside that volume, but you haven’t actually allocated space on the drives.

This sometimes leads to confusion. When we talk about creating 5 volumes, we don’t mean 5 LUNs. Sometimes customers think that they create one volume and then one LUN within that volume. You can certainly do that if you want, but there’s no requirement for a 1:1 mapping of volume to LUN. The result of this confusion is that we sometimes see administrators and architects designing unnecessarily complicated storage layouts. A volume is not a LUN.

Okay then, what is a volume?

If you go back about eighteen years, an ONTAP volume mapped to specific drives in a storage controller, but that’s ancient history now.

Today, volumes are there mostly for your administrative convenience. For example, if you have a database with a set of 10 LUNs, and you want to limit the performance for the database using a specific quality of service (QoS) policy, you can place those 10 LUNs in a single volume and slap that QoS policy on the volume. No need to do math to figure out per-LUN QoS limits. No need to apply QoS policies to each LUN individually. You could choose to do that, but if you want the database to have a 100K IOPS QoS limit, why not just apply the QoS limit to the volume itself? Then you can create whatever number of LUNs that are required for the workload.

Volume-level management

Volumes are also related to fundamental ONTAP operations, such as snapshots, cloning, and replication. You don’t selectively decide which LUN to snapshot or replicate, you just place those LUNs into a single volume and create a snapshot of the volume, or you set a replication policy for the volume. You’re managing volumes, irrespective of what data is in those volumes.

It also simplifies how you expand the storage footprint of an application. For example, if you add LUNs to that application in the future, just create the new LUNs within the same volume. They will automatically be included in the next replication update, the snapshot schedule will apply to all the LUNs, including the new ones, and the volume-level QoS policy will now apply to IO on all the LUNs, including the new ones.

You can selectively clone individual LUNs if you like, but most cloning workflows operate on datasets, not individual LUNs. If you have an LVM with 20 LUNs, wouldn’t you rather just clone them as a single unit than perform 20 individual cloning operations? Why not put the 20 LUNs in a single volume and then clone the whole volume in a single step?

Conceptually, this makes ONTAP more complicated, because you need to understand that volume abstraction layer, but if you look at real-world needs, volumes make life easier. ONTAP customers don’t buy arrays for just a single LUN, they use them for multiple workloads with LUN counts going into the 10’s of thousands.

There’s also another important term for a “volume” that you don’t often hear from NetApp. The term is “consistency group,” and you need to understand it if you want maximum manageability of your data.

What’s a Consistency Group?

In the storage world, a consistency group (CG) refers to the management of multiple storage objects as a single unit. For example, if you have a database, you might provision 8 LUNs, configure it as a single logical volume, and create the database. (The term CG is most often used when discussing SAN architectures, but it can apply to files as well.)

What if you want to use array-level replication to protect that database? You can’t just set up 8 individual LUN replication relationships. That won’t work, because the replicated data won’t be internally consistent across volumes. You need to ensure that all 8 replicas of the source LUN are consistent with one another, or the database will be corrupt.

This is only one aspect of CG data management. CGs are implemented in ONTAP in multiple ways. This shouldn’t be surprising – an ONTAP system can do a lot of different things. The need to manage datasets in a consistent manner requires different approaches depending on the chosen NetApp storage system architecture and which ONTAP feature we’re talking about.

Consistency Groups – ONTAP Volumes

The most basic consistency group is a volume. A volume hosting multiple LUNs is intrinsically a consistency group. I can’t tell you how many times I’ve had to explain this important concept to customers as well as NetApp colleagues simply because we’ve historically never used the term “consistency group.”

Here’s why a volume is a consistency group:

If you have a dataset and you put the dataset components (LUNs or files) into a single ONTAP volume, you can then create snapshots and clones, perform restorations, and replicate the data in that volume as a single consistent unit. A volume is a consistency group. I wish we could update every reference to volumes across all the ONTAP documentation in order to explain this concept, because if you understand it, it dramatically simplifies storage management.

Now, there are times where you can’t put the entire dataset in a single volume. For example, most databases use at least two volumes, one for datafiles and one for logs. You need to be able to restore the datafiles to an earlier point in time without affecting the logs. You might need some of that log data to roll the database forward to the desired point in time. Furthermore, the retention times for datafile backups might differ from log backups.

We have a solution for that, too, but first let’s talk about MetroCluster.

Consistency Groups & MetroCluster

While regular ol’ ONTAP volumes are indeed consistency groups, they’re not the only implementation of CGs in ONTAP. The need for data consistency appears in many forms. SyncMirrored aggregates are another type of CG that applies to MetroCluster.

MetroCluster is a screaming fast architecture, providing RPO=0 synchronous mirroring, mostly used for large-scale replication projects. If you have a single dataset that needs to be replicated to another site, MetroCluster probably isn’t the right choice. There would probably be simpler options.

If, however, you’re building an RPO=0 data center infrastructure, MetroCluster is awesome, because you’re essentially doing RPO=0 at the storage system layer. Since we’re replicating everything, we can do replication at the lowest level – right down at the RAID layer. The storage system doesn’t know or care about where changes are coming from, it just replicates each little write-to-drives to two different locations. It’s very streamlined, which means it’s faster and makes failovers easier to execute and manage, because you’re failing over “the storage system” in its entirety, not individual LUNs.

Here's a question, though. What if I have 20 interdependent applications and databases and datasets? If a backhoe cuts the connection between sites, is all that data at the remote site still consistent and usable? I don’t want one database to be ahead in time from another. I need all the data to be consistent.

As mentioned before, the individual volumes are all CGs unto themselves, but there’s another layer of CG, too – the SyncMirror aggregate itself. All the data on a single replicated MetroCluster aggregate makes up a CG. The constituent volumes are consistent with one another. That’s a key requirement to ensure that some of the disaster edge cases, such as rolling disasters, still yield a surviving site that has usable, consistent data and can be used for rapid data center failover. In other words, a MetroCluster aggregate is a consistency group, with respect to all the data on that aggregate, which guarantees data consistency in the event of sudden site loss.

Consistency Groups & API’s

Let’s go back to the idea of a volume as a consistency group. It works well for many situations, but what if you need to place your data in more than one volume? For example, what if you have four ONTAP controllers and want to load up all of them evenly with IO? You’ll have four volumes. You need consistent management of all four volumes.

We can handle that, too. We have yet another consistency group capability that we implement at the API level. We did this about 20 years ago, originally for Oracle ASM diskgroups. Those were the days of spinning drives, and we had some customers with huge Oracle databases that were both capacity-hungry and IOPS-hungry to the point they required multiple storage systems.

How do you get a snapshot of a set of 1000 LUNs spread across 12 different storage systems? The answer is “quite easily,” and this was literally my second project as a NetApp employee. You use our consistency group API’s. Specifically, you’d make an API call for “cg-start” targeting all volumes across various systems, then call “cg-commit” on all those storage systems. If all those cg-commit API calls report a success, you know you have a consistent set of snapshots that could be used for cloning, replication, or restoration.

You can do this with a few lines of scripting, and we have multiple management products, including SnapCenter, that make use of those APIs to perform data consistent operations.

These APIs are also part of the reason everyone, including NetApp personnel, often forget that an ONTAP volume is a consistency group. We had those APIs that had the letters “CG” in them, and everyone subconsciously started to think that this must be the ONLY way to work with consistency groups within ONTAP. That’s incorrect; the cg-start/cg-commit API calls are merely one way ONTAP delivers consistency group-based management.

Consistency Groups & SM-BC

SnapMirror Business Continuity (SM-BC) is similar to MetroCluster but provides more granularity. MetroCluster is probably the best solution if you need to replicate all or nearly all the data on your storage system, but sometimes you only want to replicate a small subset of total data.

SM-BC almost didn’t need to support any sort of “consistency group” feature. We could have scoped that feature to just single volumes. Each individual volume could have been replicated and able to be failed over as a single entity.

However, what if you needed a business continuity plan for three databases, one application server, and all four boot LUNs? Sure, you might be able to put all that data into a single volume, but it’s likely that your overall data protection, performance, monitoring, and management needs would require the use of more than one volume.

Here’s how that affects data consistency with SM-BC. Say you’ve provisioned four volumes. The key is that a business continuity plan requires all 4 of those volumes entering and exiting a consistent replication state as a single unit.

We don’t want to have a situation where the storage system is recovering from an interruption in site-to-site connectivity with one volume in an RPO=0 state, while the other three volumes are still synchronizing. A failure at that moment would leave you with mismatched volumes at the destination site. One of them would be later in time than others. That’s why we base your SM-BC relationships on CGs. ONTAP ensures those included volumes enter and exit an RPO=0 state as a single unit.

Native ONTAP Consistency Groups

Finally, ONTAP also allows you to configure advanced consistency groups within ONTAP itself. The results are similar to what you’d get with the API calls I mentioned above, except now you don’t have to install extra software like SnapCenter or write a script.

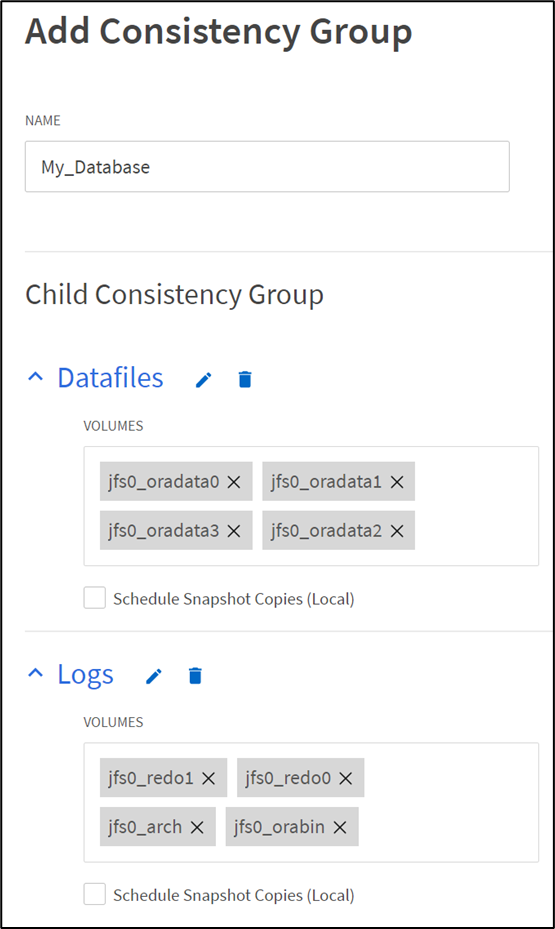

Here’s an example of how you might use ONTAP Consistency Groups:

In this example, I have an Oracle database with datafiles distributed across 4 volumes located on 4 different controllers. I often do that to ensure my IO load is guaranteed to be evenly distributed across all controllers in the entire cluster. I also have my logs in 3 different volumes, plus I have a volume for my Oracle binaries.

The point of the ONTAP Consistency Group feature is to enable users to manage applications and application components, and not worry about LUNs and individual volumes. Once I add this CG (which is composed of two child CGs), I can do things like schedule snapshots for the application itself. The result is a CG snapshot of the entire application. I can now use those snapshots for cloning, restoration or replication.

I can also work at a more granular level. For example, I could do a traditional Oracle hot backup procedure as follows:

-

“alter database begin backup;”

-

POST /application/consistency-groups/(Datafiles)/snapshots

-

“alter database end backup;”

-

“alter database archive log current;”

-

POST /application/consistency-groups/(Logs)/snapshots

The result of that is a set of volume snapshots, one of the datafiles and one of the logs, which are recoverable using a standard Oracle backup procedure.

Specifically, the datafiles were in backup mode when a snapshot of the first CG was taken. That’s the starting point for a restoration. I then removed the database from backup mode and forced a log switch before making the API call to create a snapshot of the log CG. The snapshot of the log CG now contains the required logs for making that datafile snapshot consistent.

(Note: You don’t really have to place an Oracle database in backup mode since 12cR1, but most DBA’s are more comfortable with that additional step)

Those two sets of snapshots constitute a restorable, clonable, usable backup. I’m not operating on LUNs or filesystems; I’m making API calls against CGs. It’s application-centric management. There’s no need to change my automation strategy as the application evolves over time and I add new volumes or LUNs, because I’m just operating on named CGs. It even works the same with SAN and file-based storage.

We’ve got all sorts of idea of how to keep expanding this vision of application-centric storage management, so keep checking in with us.