Tech ONTAP Articles

- Home

- :

- Tech ONTAP Podcast and Blogs

- :

- Tech ONTAP Articles

- :

- Intelligent Caching and NetApp Flash Cache

Tech ONTAP Articles

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Mark as New

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

The intelligent use of caching provides a way to decouple storage performance from the number of disks in the underlying disk array to substantially improve cost and at the same time decrease the administrative burden for performance tuning. NetApp has been a pioneer in the development of innovative read and write caching technologies. For example, NetApp storage systems use NVRAM as a journal of incoming write requests, allowing the system to commit write requests to nonvolatile memory and respond to writing hosts without delay. This is a much different approach than other vendors use, which typically puts write caching far down in the software stack. For read caching, NetApp employs a multilevel approach.

The technical details of all of these read and write caching technologies are discussed along with the environments and applications where they work best in a recent white paper. This article focuses on our second-level read cache; Flash Cache can cut your storage costs by reducing the number of spindles needed for a given level of performance by as much as 75% and by allowing you to replace high-performance disks with more economical options. A significant cache amplification effect can occur when you use Flash Cache in conjunction with NetApp deduplication or FlexClone® technologies, significantly increasing the number of cache hits and reducing average latency.

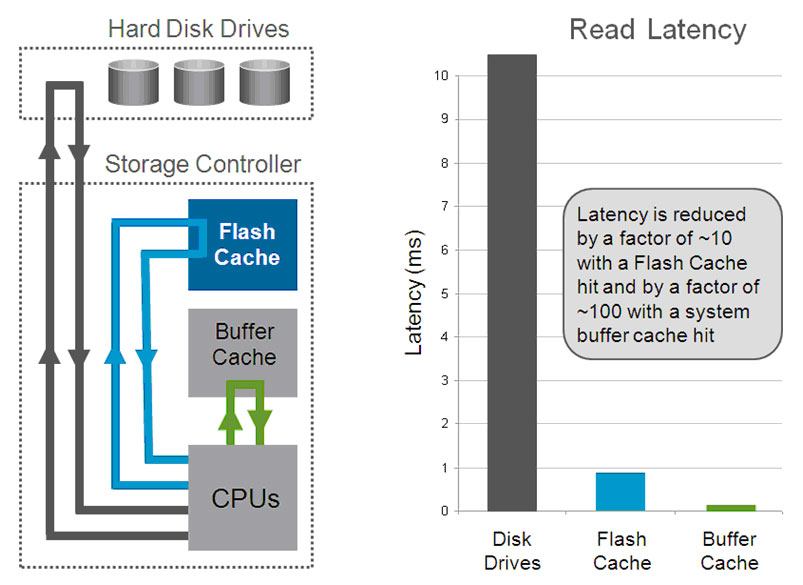

Figure 1) A 512GB Flash Cache module. Understanding Flash CacheThe most important thing to understand about Flash Cache, and read caching in general, is the dramatic difference in latency for reads from memory versus reads from disk. Latency is reduced by 10 times for a Flash Cache hit and 100 times for a system buffer cache hit when compared to a disk read.

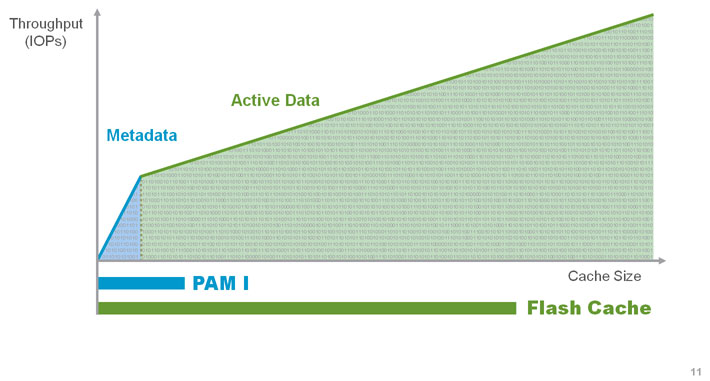

Figure 2) Impact of the system buffer cache and Flash Cache on read latency. In principle, Flash Cache is very similar to the NetApp first-generation performance acceleration module PAM I. The most significant difference is that — owing to the economics and density of flash memory — Flash Cache modules have much larger capacity than previous-generation, DRAM-based PAM I modules. Flash Cache is available in either 256GB or 512GB modules. Depending on your NetApp storage system model, the maximum configuration supports up to 4TB of cache (versus 80GB for PAM I). In practice, of course, this translates into a huge difference in the amount of data that can be cached, enhancing the impact that caching has on applications of all types. Flash Cache provides a high level of interoperability so it works with whatever you've already got in your environment:

How Flash Cache WorksData ONTAP® uses Flash Cache to hold blocks evicted from the system buffer cache. This allows the Flash Cache software to work seamlessly with the first-level read cache. As data flows from the system buffer cache, the priorities and categorization already performed on the data allow the Flash Cache to make decisions about what is or isn't accepted into the cache. With Flash Cache, a storage system first checks to see whether a requested read has been cached in one of its installed modules before issuing a disk read. Data ONTAP maintains a set of cache tags in system memory and can determine whether Flash Cache contains the desired block without accessing the cards, speeding access to the Flash Cache and reducing latency. The key to success lies in the algorithms used to decide what goes into the cache. By default, Flash Cache algorithms try to distinguish high-value, randomly read data from sequential and/or low-value data and maintain that data in cache to avoid time-consuming disk reads. NetApp provides the ability to change the behavior of the cache to meet unique requirements. The three modes of operation are:

Figure 3) Impact of cache size and the type of data cached on throughput. Using NetApp Predictive Cache Statistics (PCS), a feature of Data ONTAP 7.3 and later, you can determine whether Flash Cache will improve performance for your workloads and decide how much additional cache you need. PCS also allows you to test the different modes of operation to determine whether the default, metadata, or low-priority mode is best. Full details of NetApp Flash Cache, including PCS, are provided in TR-3832: Flash Cache and PAM Best Practices Guide. Flash Cache and Storage EfficiencyNetApp Flash Cache improves storage efficiency in two important ways:

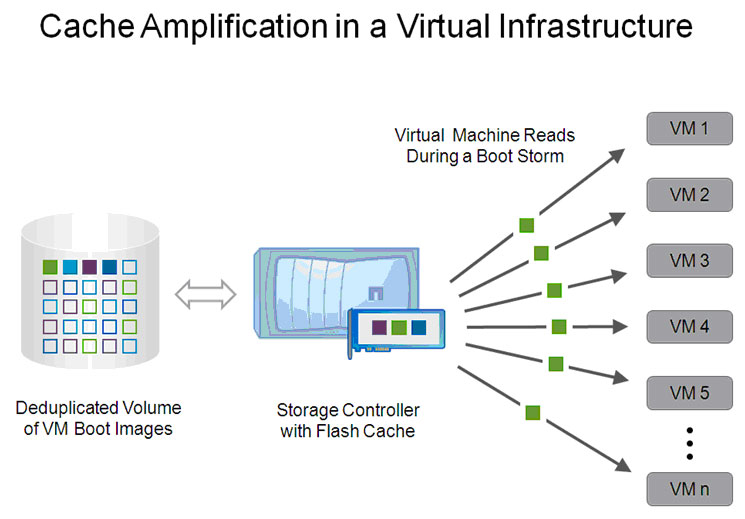

Figure 4) Cache amplification in a virtual infrastructure environment showing the advantage of having deduplicated blocks in cache. Many applications have high levels of block duplication. The result is that you not only end up wasting storage space storing identical blocks, you also waste cache space by caching identical blocks in system buffer cache and Flash Cache. NetApp deduplication and NetApp FlexClone technology enhance the value of caching by eliminating block duplication and increasing the likelihood that a cache hit will occur. Deduplication identifies and replaces duplicate blocks in your primary storage with pointers to a single block. FlexClone allows you to avoid the duplication that typically results from copying volumes, LUNs, or individual files — for example, for development and test operations. In both cases, the end result is that a single block could have many pointers to it. When such a block is in cache, the probability that it will be requested again is therefore much higher. Cache amplification is particularly advantageous in conjunction with server and desktop virtualization. In that context, cache amplification has also been referred to as Transparent Storage Cache Sharing (TSCS) as an analogy to the transparent page sharing (TPS) of VMware. The use of Flash Cache can significantly decrease the cost of your disk purchases and make your storage environment more efficient. Testing in a Windows® file services environment showed:

Flash Cache in the Real WorldA wide range of IT environments and applications benefit from Flash Cache and other NetApp intelligent caching technologies. Table 1) Applicability of intelligent caching to various environments and applications. (Hyperlinks are related to references for each environment/application.)

SERVER AND DESKTOP VIRTUALIZATION Both server virtualization and virtual desktop infrastructure (VDI) create some unique storage performance requirements that caching can help to address. Any time you need to boot a large number of virtual machines at one time — for instance, during daily desktop startup or, in the case of server virtualization, after a failure or restart — you can create a significant storage load. Large numbers of logins and virus scanning can also create heavy I/O load. For example, a regional bank had over 1,000 VMware View desktops and was seeing significant storage performance problems with its previous environment despite having 300 disk spindles. When that environment was replaced with a NetApp solution using just 56 disks plus Flash Cache, outages due to reboot operations dropped from 4 to 5 hours to just 10 minutes. Problems with nonresponsive VDI servers simply went away and logins, which previously had to be staggered, can now be completed in just four seconds. The addition of NetApp intelligent caching gave the bank more performance at lower cost. These results are in large part due to cache amplification. Because of the high degree of duplication in virtual environments (that results from having many nearly identical copies of the same operating systems and applications), they can experience an extremely high rate of cache amplification with shared data blocks. You can either eliminate duplication by applying NetApp deduplication to your existing virtual environment or — if you are setting up a new virtual environment — by using the NetApp Virtual Storage Console v2.0 provisioning and cloning capability to efficiently clone your virtual machines such that each virtual machine with the same guest operating system shares the same blocks. Either way, once the set of shared blocks has been read into cache, read access is accelerated for all virtual machines. CLOUD COMPUTING Since most cloud infrastructure is built on top of server virtualization, cloud environments will experience many of the same benefits from intelligent caching. In addition, the combination of intelligent caching and FlexShare lets you fully define classes of service for different tenants of shared storage in a multi-tenant cloud environment. This can significantly expand your ability to deliver IT as a service. DATABASE Intelligent caching provides significant benefits in online transaction processing environments as well. A recent NetApp white paper examined two methods of improving performance in an I/O-bound OLTP environment: adding additional disks or adding Flash Cache. Both approaches were effective at boosting overall system throughput. The Flash Cache configuration:

E-mail environments with large numbers of users quickly become extremely data intensive. As with database environments, the addition of Flash Cache can significantly boost performance at a fraction of the cost of adding more disks. For example, in recent NetApp benchmarking with Microsoft® Exchange 2010, the addition of Flash Cache doubled the number of IOPs achieved and increased the supported number of mailboxes by 67%. These results will be described in TR-3865: "Using Flash Cache for Exchange 2010," scheduled for publication in September 2010. OIL AND GAS EXPLORATION A variety of scientific and technical applications also benefit significantly from Flash Cache. For example, a large intelligent cache can significantly accelerate processing and eliminate bottlenecks during analysis of the seismic data sets necessary to oil and gas exploration. One successful independent energy company recently installed Schlumberger Petrel 2009 software and NetApp storage to aid in evaluating potential drilling locations. (A recent joint white paper describes the advantages of NetApp storage in conjunction with Petrel.) The company uses multiple 512GB NetApp Flash Cache cards in five FAS6080 nonblocking storage systems with SATA disk drives. Its shared seismic working environment is experiencing a 70% hit rate, meaning that, 70% of the time, the requested data is already in the cache. Applications that used to take 20 minutes just to open and load now do so in just 5 minutes. You can read more details in a recent success story. ConclusionNetApp Flash Cache serves as an optional second-level read cache that accelerates performance for a wide range of common applications and can reduce cost either by decreasing the number of disk spindles you need and/or by allowing you to use capacity-optimized disks rather than performance-optimized ones. Using fewer, larger disk drives with Flash Cache can reduce the purchase price of a storage system and provide ongoing savings for rack space, electricity, and cooling. The effectiveness of read caching is amplified when used in conjunction with NetApp deduplication or FlexClone technologies because the probability of a cache hit increases significantly when data blocks are shared. To learn more about all of the NetApp intelligent caching technologies, see our recent white paper.  Got opinions about Flash Cache?Ask questions, exchange ideas, and share your thoughts online in NetApp Communities. Got opinions about Flash Cache?Ask questions, exchange ideas, and share your thoughts online in NetApp Communities.

| Explore What's the Best Way to Deploy Flash? The flash memory technology used by Flash Cache has many advantages, particularly in comparison to DRAM. Advantages include higher density, lower power consumption, and lower cost per gigabyte. Because of these unique characteristics, NetApp is focusing on the targeted use of flash memory in storage systems and within your storage infrastructure in ways that can deliver the most performance acceleration for a minimum investment. You can learn more about the NetApp flash strategy at the following links: Architecting Desktop Infrastructure with Flash Cache Virtual desktop infrastructure (VDI) places significant burdens on virtual servers because of the large number of VMs per host as well as events such as simultaneous logins, boot storms, and virus scans. Find out how you can use Flash Cache to avoid these problems with VMware® View — the VMware VDI solution — in a recent tech report that covers best practices for design, deployment, and management. (The guide refers to Flash Cache by its older name: PAM II.) Join NetApp at VMworld 2010 If you are interested in learning about new solutions and technologies to streamline your business, VMworld 2010 is the place to be. Join NetApp at this landmark conference from August 30 to September 2 in San Francisco, California. We’ll feature a number of new storage technologies specifically targeted for:

We’ll also highlight our joint solutions with Cisco and VMware. You can even request a private session with a vExpert. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Please Note:

All content posted on the NetApp Community is publicly searchable and viewable. Participation in the NetApp Community is voluntary.

In accordance with our Code of Conduct and Community Terms of Use, DO NOT post or attach the following:

- Software files (compressed or uncompressed)

- Files that require an End User License Agreement (EULA)

- Confidential information

- Personal data you do not want publicly available

- Another’s personally identifiable information (PII)

- Copyrighted materials without the permission of the copyright owner

Continued non-compliance may result in NetApp Community account restrictions or termination.