ONTAP Hardware

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Hardware

- :

- Re: Alert: vifmgr.lifs.noredundancy: No redundancy in the failover configuration for 1 LIFs assigned

ONTAP Hardware

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Alert: vifmgr.lifs.noredundancy: No redundancy in the failover configuration for 1 LIFs assigned to

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

On NetApp FAS2750, the following alert is recorded in the logs:

Event:

vifmgr.lifs.noredundancy: No redundancy in the failover configuration for 1 LIFs assigned to node "netapp03-02". LIFs: netapp03: netapp03-02_mgmt1

Message Name:

vifmgr.lifs.noredundancy

Sequence Number:

112381

Description:

This message occurs when one or more logical interfaces (LIFs) are configured to use a failover policy that implies failover to one or more ports but have no failover targets beyond their home ports. If any affected home port or home node is offline or unavailable, the corresponding LIFs will be operationally down and unable to serve data.

Action:

Add additional ports to the broadcast domains or failover groups used by the affected LIFs, or modify each LIF's failover policy to include one or more nodes with available failover targets. For example, the "broadcast-domain-wide" failover policy will consider all failover targets in a LIF's failover group. Use the "network interface show -failover" command to review the currently assigned failover targets for each LIF. If the intent is to never fail over the LIF to any other failover targets, set the failover-policy for the LIF to 'disabled' to prevent this alert in the future. For additional information about configuring LIF failover targets, search the NetApp (R) support site for TR-4182.

---------------------------------------------------------------------------------------------------------------------------------------

Node netapp03-02 has one management interface. When this node is rebooted, communication through the management interface is lost for some time until the node boots up again.

How to do it right:

1. disable failover on this interface to get rid of this alert

network interface modify -vserver <vserver> -lif <lif_name> -failover-policy disabled

or

2. provide failover of this interface? But how can this be done in my case?

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@areti wrote:

If I assign management ports netapp03-01_mgmt and netapp03-02_mgmt to the same policy broadcast-domain-wide, is that correct?Will they then be failover targets for each other?

No, sorry, there is still a misunderstanding.

Both LIFs (network interface show, Logic Interfaces, or IP Aliases or whatever you name it, you name it "management ports") are Node Management LIFs. Node Management LIFs have a special purpose, they are used by the node to access the management Network, e.g. to send out autosupport or for other different special purposes.

The thing is - it makes no sense for a "Node Management LIF" to leave (migrate off) the node it is managing at any time, it has to stay with the node, always. Similar to InterCluster LIFs or SAN LIFs as another example.

Your broadcast-domain "Default" (or the Failover Group "Default" which is identical to the Broadcast-Domain here as it always should be), does only contain one physical port per Node, e0M - netapp03-01:e0M and netapp03-02:e0M. This means each node has a physical Port, e0M, placed as possible failover targets into the broadcast-domain.

So both LIFs, LIF netapp03-01_mgmt for node netapp03-01 and LIF netapp03-02_mgmt for node netapp03-02, do have no option to failover - as each node does only have one port, e0M in broadcast-domain "Default". Please do again read my previous reply - I explained how other customers handle this and presented 3 options at the end of my reply that you can choose.

You currently have choosen none of theses options I have explained, because failover-policy is local-only but the LIF's broadcast-domain does only contain one single local (to the node) port - and this is why you experience the warning.

Failover-policy "cluster-wide" is no option for these two LIFs or more specific - for any LIF with role "node-mgmt" or role "intercluster" or role "data" with "data-protocol" being "iscsi" or "fcp" or "fc-nvme". For the cluster_mgmt LIF this is different - you can place this on any node as any node can manage the cluster but again, a node management LIF can never leave its node.

you can check the man page of network interface create via command "man network interface create":

-role {cluster|data|node-mgmt|intercluster|cluster-mgmt} - (DEPRECATED)-Role

Note: This parameter has been deprecated and may be removed in a future version of ONTAP. Use the -service-policy parameter instead.

Use this parameter to specify the role of the LIF. LIFs can have one of five roles:

o Cluster LIFs, which provide communication among the nodes in a cluster

o Intercluster LIFs, which provide communication among peered clusters

o Data LIFs, which provide data access to NAS and SAN clients

o Node-management LIFs, which provide access to cluster management functionality

o Cluster-management LIFs, which provide access to cluster management functionality

LIFs with the cluster-management role behave as LIFs with the node-management role except that cluster-management LIFs can failover between nodes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

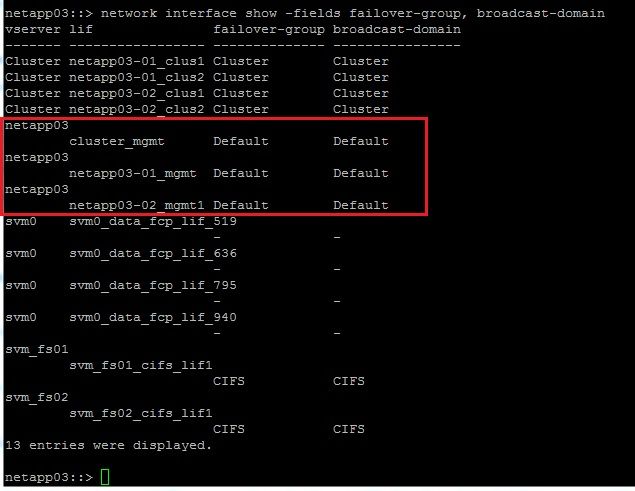

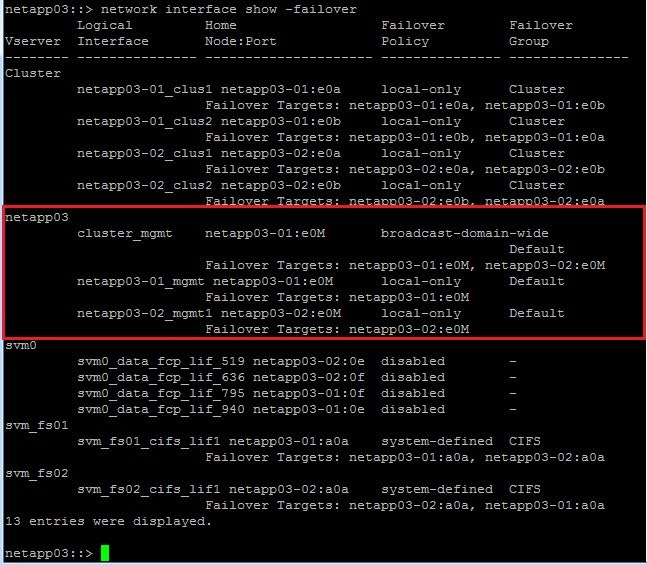

You would have to first check the failover group of that lif:

network interface show -fields failover-group , broadcast-domain

Ideally the failover-group is identical to the broadcast domain of the home-port of that LIF.

If this is not the case - ask yourself why and if you do not have a reason, fix it.

If the reason is only - because we forgot to clean it up when we upgraded from 9.x to 9.y - fix it too.

If there is a reason - you know yourself what to do, or, do not hesitate to ask here.

Then just add another port from the same node that also owns that home-port, into the broadcast domain and you would not have to switch failover policy to disabled anymore, but could then switch to local-only (which means any other port within the same broadcast domain on the same node).

As example, this looks like a node management lif. That one had a default failover-policy of braadcast-domain-wide.

Still, its a node management lif, so it will never leave the node.

If you only have e0M of each node in the corresponding broadcast-domain, the only failover a node-bound LIF can do is?

Its no-failover or failover-policy = disabled.

What would you loose if the failover-policy is disabled and the home-port fails?

It depends.

For a Node-Mgmt LIF you would loose Autosupport delivery for that node but data would still be served.

For an Intercluster LIF you would loose Snapmirror/Peering.

Other Node-Bound LIFs like "Cluster" require redundancy or you might loose data access on a port-failure there.

SAN LIFs never failover.

NAS LIFs are almost never node bound apart from very special situations you would be aware of.

Some customer just know that they have no redundancy on the node-management LIFs as example and only put e0M of each node in that "management" broadcast Domain. The Cluster-Mgmt lif would still be able to failover broadcast-domain-wide, so SSH and API etc. would still work.

Other customers just add the management VLAN to the same VLAN trunk as where data VLANs are served (or any other VLAN trunk port) and add that VLAN-port then additionally into the corresponding broadcast-domain where e.g. e0m is in. That however does introduce another warning you can not get rid of, currently - it would tell you that the broadcast domain has ports of different VLANs as it thinks e0M (access VLAN 100 as example is management) and as example a0a-100 (VLAN trunk) are different but you know they are identical, just one is tagged and the otehr untagged. So technically no issue, cosmetically you get another warning. But that warning is kind of little relevant, because additionally the cluster will send periodic broadcast frames to each port within one configured broadcast-domain and if that would not come in again at any other port configured in the same broadcast-domain, it would issue the event vifmgr.bcastDomainPartition (see event catalog show -message-name vifmgr.bcastDomainPartition)

Others just require to have another 1G Port next to e0M being available to be cabled identically as e0M per Node. Especially when you run the BNC/SP OOB Network in a different phyiscal broadcast domain or different VLAN than node-mgmt, you could not use e0M at all as it is still physically shared with BMC/SP on most platforms but there are platforms where this is not the case, e.g. A800.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ralfk wrote:

You would have to first check the failover group of that lif:

network interface show -fields failover-group , broadcast-domain

Ideally the failover-group is identical to the broadcast domain of the home-port of that LIF.

If this is not the case - ask yourself why and if you do not have a reason, fix it.

If the reason is only - because we forgot to clean it up when we upgraded from 9.x to 9.y - fix it too.If there is a reason - you know yourself what to do, or, do not hesitate to ask here.

Then just add another port from the same node that also owns that home-port, into the broadcast domain and you would not have to switch failover policy to disabled anymore, but could then switch to local-only (which means any other port within the same broadcast domain on the same node).

As example, this looks like a node management lif. That one had a default failover-policy of braadcast-domain-wide.Still, its a node management lif, so it will never leave the node.

If you only have e0M of each node in the corresponding broadcast-domain, the only failover a node-bound LIF can do is?

Its no-failover or failover-policy = disabled.

What would you loose if the failover-policy is disabled and the home-port fails?It depends.

For a Node-Mgmt LIF you would loose Autosupport delivery for that node but data would still be served.

For an Intercluster LIF you would loose Snapmirror/Peering.

Other Node-Bound LIFs like "Cluster" require redundancy or you might loose data access on a port-failure there.

SAN LIFs never failover.

NAS LIFs are almost never node bound apart from very special situations you would be aware of.

Some customer just know that they have no redundancy on the node-management LIFs as example and only put e0M of each node in that "management" broadcast Domain. The Cluster-Mgmt lif would still be able to failover broadcast-domain-wide, so SSH and API etc. would still work.

Other customers just add the management VLAN to the same VLAN trunk as where data VLANs are served (or any other VLAN trunk port) and add that VLAN-port then additionally into the corresponding broadcast-domain where e.g. e0m is in. That however does introduce another warning you can not get rid of, currently - it would tell you that the broadcast domain has ports of different VLANs as it thinks e0M (access VLAN 100 as example is management) and as example a0a-100 (VLAN trunk) are different but you know they are identical, just one is tagged and the otehr untagged. So technically no issue, cosmetically you get another warning. But that warning is kind of little relevant, because additionally the cluster will send periodic broadcast frames to each port within one configured broadcast-domain and if that would not come in again at any other port configured in the same broadcast-domain, it would issue the event vifmgr.bcastDomainPartition (see event catalog show -message-name vifmgr.bcastDomainPartition)

Others just require to have another 1G Port next to e0M being available to be cabled identically as e0M per Node. Especially when you run the BNC/SP OOB Network in a different phyiscal broadcast domain or different VLAN than node-mgmt, you could not use e0M at all as it is still physically shared with BMC/SP on most platforms but there are platforms where this is not the case, e.g. A800.

Hello. Thank you for the reply.

If we look at the results of the commands network interface show ... (see pictures), we will see that the management ports of node 1 (netapp03-01) and node 2 (netapp03-02) are part of the Default broadcast-domain in the Default failover-group and they are assigned failover policy: local-only.

Netapp03-01 and netapp03-02 only have failover targets of themselves.

Cluster management port cluster-mgmt has broadcast-domain-wide policy and failover targets: netapp03-01:e0M and netapp03-02:e0M.

If I assign management ports netapp03-01_mgmt and netapp03-02_mgmt to the same policy broadcast-domain-wide, is that correct?

Will they then be failover targets for each other?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@areti wrote:

If I assign management ports netapp03-01_mgmt and netapp03-02_mgmt to the same policy broadcast-domain-wide, is that correct?Will they then be failover targets for each other?

No, sorry, there is still a misunderstanding.

Both LIFs (network interface show, Logic Interfaces, or IP Aliases or whatever you name it, you name it "management ports") are Node Management LIFs. Node Management LIFs have a special purpose, they are used by the node to access the management Network, e.g. to send out autosupport or for other different special purposes.

The thing is - it makes no sense for a "Node Management LIF" to leave (migrate off) the node it is managing at any time, it has to stay with the node, always. Similar to InterCluster LIFs or SAN LIFs as another example.

Your broadcast-domain "Default" (or the Failover Group "Default" which is identical to the Broadcast-Domain here as it always should be), does only contain one physical port per Node, e0M - netapp03-01:e0M and netapp03-02:e0M. This means each node has a physical Port, e0M, placed as possible failover targets into the broadcast-domain.

So both LIFs, LIF netapp03-01_mgmt for node netapp03-01 and LIF netapp03-02_mgmt for node netapp03-02, do have no option to failover - as each node does only have one port, e0M in broadcast-domain "Default". Please do again read my previous reply - I explained how other customers handle this and presented 3 options at the end of my reply that you can choose.

You currently have choosen none of theses options I have explained, because failover-policy is local-only but the LIF's broadcast-domain does only contain one single local (to the node) port - and this is why you experience the warning.

Failover-policy "cluster-wide" is no option for these two LIFs or more specific - for any LIF with role "node-mgmt" or role "intercluster" or role "data" with "data-protocol" being "iscsi" or "fcp" or "fc-nvme". For the cluster_mgmt LIF this is different - you can place this on any node as any node can manage the cluster but again, a node management LIF can never leave its node.

you can check the man page of network interface create via command "man network interface create":

-role {cluster|data|node-mgmt|intercluster|cluster-mgmt} - (DEPRECATED)-Role

Note: This parameter has been deprecated and may be removed in a future version of ONTAP. Use the -service-policy parameter instead.

Use this parameter to specify the role of the LIF. LIFs can have one of five roles:

o Cluster LIFs, which provide communication among the nodes in a cluster

o Intercluster LIFs, which provide communication among peered clusters

o Data LIFs, which provide data access to NAS and SAN clients

o Node-management LIFs, which provide access to cluster management functionality

o Cluster-management LIFs, which provide access to cluster management functionality

LIFs with the cluster-management role behave as LIFs with the node-management role except that cluster-management LIFs can failover between nodes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for clarifying. I became convinced that my idea was wrong. Your previous answer was exhaustive and gave all possible solutions to this issue.