Today, electronic design automation (EDA) is challenged with exponential growth. Semiconductor (or EDA) firms are most interested in time to market (TTM). TTM is often predicated on the time it takes for workloads, such as chip design validation and pre-foundry work like tape-out, to complete. TTM concerns also help keep EDA licensing costs down: Less time spent on work means more time available for the licenses. That said, the more bandwidth and capacity available to the server farm, the better.

To make your EDA jobs go faster, Google Cloud NetApp Volumes introduced the Large Volumes feature, which offers the capacity and performance scalability characteristics that modern EDA design processes require.

Design process

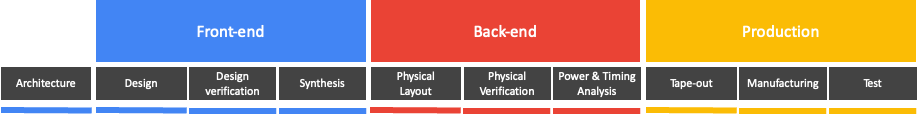

As shown in Figure 1, modern chip design is composed of multiple phases with different performance requirements.

Figure 1) Phases of chip design. Source: Google, Inc. 2024.

During the front-end phase, thousands of single threaded compute jobs are working on millions of small files. This phase is characterized by metadata-intensive data access, which requires high I/O rates with low latency due to massive, small-file random access.

During the back-end verification phase, a large number of CPU cores work on long-running compute jobs. This phase is characterized by large sequential data access, which requires high throughput.

Reference architecture

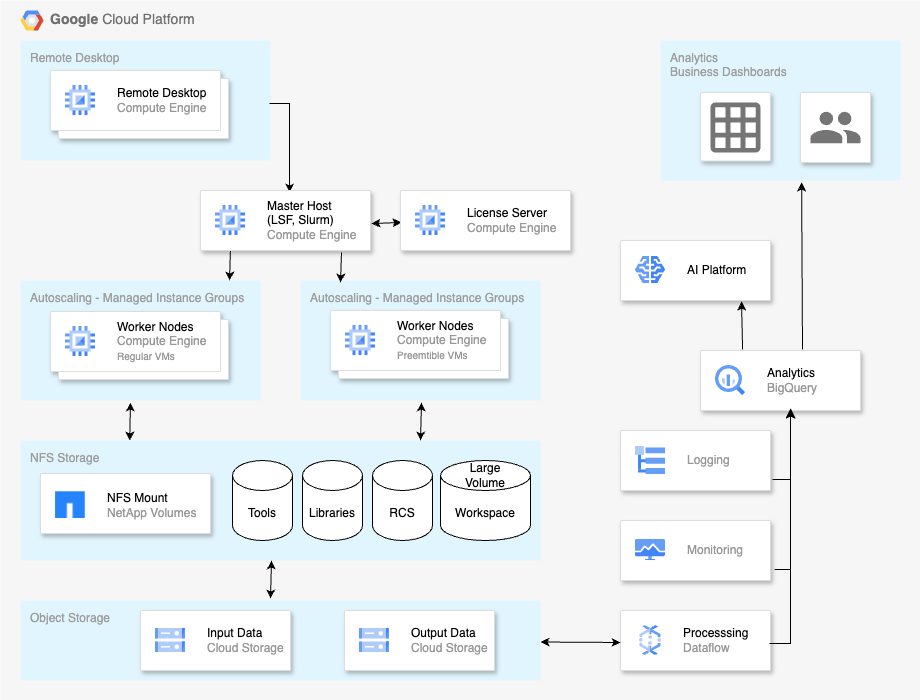

A modern EDA pipeline in Google Cloud provides scalable compute performance and cost-efficient storage and adds data insight capabilities that use analytics and AI services.

Figure 2 shows a typical example reference architecture in Google Cloud.

Figure 2) Typical reference architecture example. Source: Google, Inc. 2024.

The central compute farm is built out of autoscaling instance groups. The node type can be selected to optimize price/performance for the given requirements. Adding preemptible virtual machines for cost-efficient bursting is optional. All nodes have access to a common shared file system, which hosts the data.

Input data and results can be stored in the object store for archiving. The storage system can also tier cold data to object store to reduce costs. The right part with analytics and AI is an optional capability.

Storage requirements

EDA workflows rely heavily on sharing data among hundreds of compute nodes, which requires a shared POSIX-compliant file system that can scale in size and performance.

Google Cloud NetApp Volumes (NetApp Volumes) is an ideal choice. It offers NFSv3, NFSv4.1, and SMB file shares. File systems are provided as high-availability and reliable volumes that can be mounted by clients.

Read about Google Cloud NetApp Volumes capabilities:

Especially for EDA, NetApp Volumes offers a large volumes feature so that volumes can scale seamlessly between 15TiB and 1PiB in capacity, up and down.

In the front-end phase of the design process, the data access pattern is metadata I/O intensive. Using a SPEC SFS 2020 EDA_blended workload, a single large volume can deliver up to 819,200 IOPS at 4ms.

The back-end phase is about high sequential read/write throughput. It is dominated by 50% large sequential reads and 50% large sequential writes. A large volume can deliver up to 12.5GiB/s throughput.

Another important feature to reduce storage costs is auto-tiering, which reduces the overall cost of volume usage. Data that is rarely or never used after it has been written to the volume is called cold data. Auto-tiering can be enabled at the per-volume level. When auto-tiering is enabled for a volume, NetApp Volumes identifies data that is infrequently used and moves it transparently from the primary hot tier to a cheaper but slower cold tier.

NetApp Volumes determines whether to move cold data to the hot tier based on the access pattern. Reading the cold data with sequential reads, such as those associated with data copy, file-based backups, indexing, and antivirus scans, leaves the data on the cold tier. Reading the cold data with random reads moves the data back to the hot tier, where it will stay until it cools off again.

Data on the hot tier exhibits the same performance as a nontiered volume. Data on the cold tier exhibits higher read latencies and reduced read performance. All data is still visible to clients and can be accessed transparently.

By using auto-tiering, NetApp Volumes transparently self-optimizes cost, so that users don’t have to manually place data into different storage silos to optimize cost.

Best practices

To optimize the performance of a large volume, follow these recommendations:

- Use NFSv3 over TCP. NetApp Volumes also supports NFSv4.1 and SMB, but NFSv3 achieves the highest performance.

- Size your large volume big enough to meet your performance requirements. Every TiB of size grants additional throughput for service level Premium (64MiB/s per TiB) or Extreme (128MiB/s per TiB), until the limits of a volume are reached.

- Data tiered to the cold tier contributes only 2MiB/s per TiB to the volume's performance. If a lot of data is cold, the performance of the volume is reduced. Using the data calls it back into the hot tier, and subsequent access will be fast.

- Each large volume has six IP addresses. Distribute your clients evenly over these addresses.

Summary

EDA workloads require file storage that can handle high file counts, large capacity, and many parallel operations across potentially thousands of client workstations. EDA workloads also need to perform at a level that reduces the time it takes for testing and validation, to save money on licenses and to expedite time to market for the latest and greatest chipsets. Google Cloud NetApp Volumes is a reliable and scalable shared file system that can meet demanding metadata and throughput-intensive requirements.