Tech ONTAP Blogs

- Home

- :

- Blogs

- :

- Tech ONTAP Blogs

- :

- Doing More with Less: The Quiet Revolution Powering AI Through IoT and Edge

Tech ONTAP Blogs

Doing More with Less: The Quiet Revolution Powering AI Through IoT and Edge

Edge AI is transforming the way we approach deploying machine learning. Instead of confining intelligence to cloud servers or data centers, models are now being pushed to the farthest corners of our networks… to IoT sensors, industrial gateways, and even microcontrollers. We are talking about drones like the Skydio X10 surveying land, Rockwell Automation using vision models for factory quality control inspections, or Edge Impulse bringing voice control to ear buds. This isn't about shrinking a large model for fun. It's about making AI practical in places where bandwidth is scarce, latency matters, and power is a premium.

That's what makes this year's All Things Open session, "TinyML Meets PyTorch: Deploying AI at the Edge with Python Using ExecuTorch and TorchScript", so timely. The talk explores how to build and optimize edge-ready models in PyTorch, then deploy them efficiently with ExecuTorch or TorchScript without reinventing your entire MLOps stack. In short, you don't need to build an MLOps snowflake to run AI on IoT or the Edge.

Throughout this discussion, we'll examine why lessons from IoT and Edge AI are relevant far beyond low-power devices. Building models that can perform under tight constraints (limited compute, low memory, and inconsistent connectivity) forces us to think differently about efficiency. Whether you're optimizing inference pipelines in a data center or experimenting with quantized models on a Raspberry Pi, the pursuit is the same: do more with less. The techniques that make AI run on the edge today will define how we make it sustainable, accessible, and adaptable everywhere tomorrow.

Why All Eyes Are On Edge and IoT

If the first wave of AI was about scale, the next is about efficiency. Edge and IoT deployments aren't driven by sheer model size or GPU horsepower… they're powered by ingenuity. The idea is simple: make AI useful where it can't rely on a 400-watt GPU or a persistent cloud connection. In a world obsessed with large language models, it's easy to forget that most devices in the real world run on tiny batteries, slow networks, and modest CPUs. That's where the magic (and the challenge) of Edge AI begins.

This movement isn't fantasy. It's being pushed forward by necessity. Take the example of DeepSeek's R1 model, which made headlines for rivaling GPT-class systems while training on older generation GPUs due to restrictions by U.S. export rules. Their success wasn't built on cutting-edge hardware; it was built on optimization and squeezing more performance out of less computing power. That announcement was so disruptive that it caused a 3% sell-off in the US stock market, and NVIDIA saw a 17% drop because it showed what's possible when efficiency becomes the focus instead of scale.

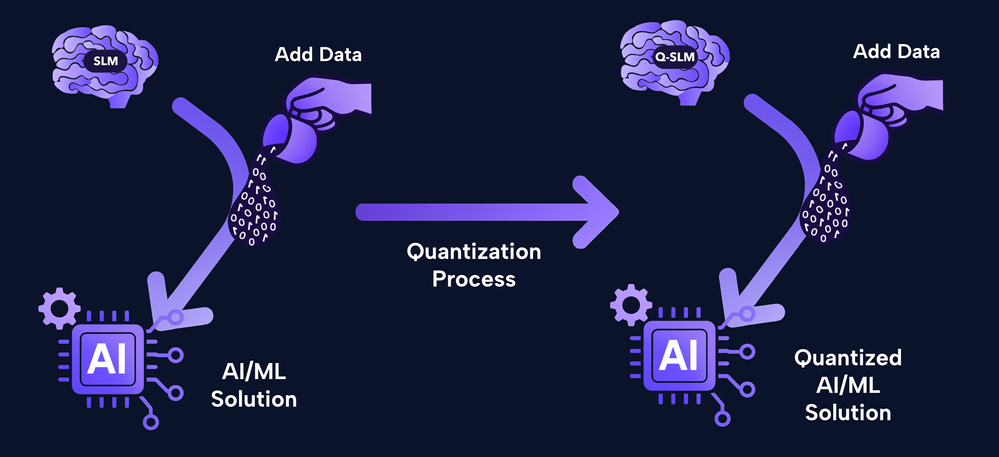

Edge computing follows the same logic. Most real-world deployments don't have racks of GPUs idling in the corner… they have sensors, ARM boards, and local CPUs that you can't afford to waste. If we can make models smaller through quantization, pruning, or distillation, we can unlock a universe of new applications, ranging from factory inspection cameras to health monitors that analyze data locally for privacy and speed. Efficiency doesn't just save cost; it enables possibility.

Ultimately, this is about doing more with less. The question isn't "Can we run AI at the edge?" That's already happening... you just need to check your pocket to look at your iPhone or Android device. The real question is how we make it sustainable, repeatable, and accessible without reinventing the wheel for every deployment. ExecuTorch or TorchScript provides a roadmap to achieve exactly that: a single build process, a unified model lineage, and a consistent approach to scaling intelligence from the cloud to the smallest device on the network.

Efficiency Unlocks Use Cases

Efficiency isn't a buzzword. It's the bridge between what's possible in research and what's practical in the real world. Every watt, byte, and millisecond saved through quantization or pruning opens a door to new deployments. If a model can run faster and consume less memory, it can move from the data center to a device, from a server rack to a handheld controller, or from an expensive GPU to a low-power CPU. That's not a compromise… It's a win for accessibility and scalability.

When we apply techniques like dynamic quantization, BF16 optimization, or model distillation, we're effectively democratizing AI. These optimizations reduce model size, cut latency, and preserve accuracy well enough for most real-world scenarios. And while cloud-scale GPUs are still essential for serving millions of users, most edge or IoT systems only need to serve one: the local operator, your iPhone or Android, or the embedded sensor making split-second decisions.

NetApp's AIPod Mini excels in this field. Built on Intel's Advanced Matrix Extensions (AMX), this platform showcases what's possible when you take the principles of Edge AI and give them enterprise muscle. It's a self-contained system that can deploy quantized ExecuTorch or TorchScript models directly on CPU… no discrete GPU required. Many Small Language Models (SLMs, such as Llama 3.2 8B) already have pre-quantized models available; however, in the event that these models aren’t available, Data Scientists and AI/ML Engineers should consider and evaluate quantization to apply to these models, reducing their resource use for the sake of efficiency. It's the perfect match for AI workloads that need inference at the edge but still demand reliability, performance, and low power draw.

The same approach that enables you to run a GraphRAG pipeline or a DocumentRAG workflow on the AIPod Mini can also be applied to smaller setups, such as Raspberry Pi or Jetson Nano. The stack doesn't change, but the mindset does. You train once, export once, and deploy everywhere. Efficiency becomes the multiplier. It expands what AI can do, who can utilize it, and where it can be deployed.

From Cloud To Edge: The Road Ahead

Efficiency isn't the end of the story… It's the foundation for what's next. As AI moves from the lab to the real world, the ability to deploy models anywhere (on a server, a gateway, or a battery-powered device) will define how accessible and sustainable our systems become. Tools like ExecuTorch or TorchScript make that future tangible. They simplify the complexity of multi-environment deployment into a unified workflow, where the same PyTorch model can run seamlessly on both a GPU cluster and an embedded controller.

In my session "TinyML Meets PyTorch: Deploying AI at the Edge with Python Using ExecuTorch and TorchScript", we'll walk through these concepts in detail. We will discuss how quantization, model exporting, and runtime decisions influence both performance and portability. This will be driven by a number of live demos that showcase AI models (yes, plural) built for GPUs, which are then used for inference on constrained hardware, revealing how small optimizations can deliver significant results.

If you're attending All Things Open, join us Monday, October 13, at 11:30 a.m. ET in Room 301 B. You'll leave with a deeper understanding of how to make your AI models leaner, faster, and more deployable without building a bespoke MLOps snowflake. You can find the session details here and the full schedule available here.