ONTAP Hardware

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Hardware

- :

- Re: Getting the most out of my disks

ONTAP Hardware

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am somewhat new to the storage game and working for a new company that recently bought a FAS2750 and 1 additional disk shelf.

I am trying to get our system set up in the best way and can't seem to get use out a large chunk of our disks.

The FAS2750 has 24 1.64TB disks.

the Disk shelf has 12 7.15TB disks.

When I look at my disk summary, it says that i have 85.67TB of spare disk space.

As far i understand I would need to place the disk shelf disks into a different aggregate because the are different sized disks from the ones in the controller unit. I can't seem to figure out how to get the existing aggregates to let go of these disks so that I can add them to their own aggregate. Is that even the best thing to do with the spare disks?

Please let me know if I should elaborate on anything.

Thanks,

Joe

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1.1.0 thought 1.1.11 are spares, so no need to delete any aggrs.

Have you verified that the new shelf cabling is good using something like Config Advisor?

Nodes (controllers) own disks, and disks get pooled to become aggrs.

for this reconfigure you'd want to do something like this:

note: there are other ways to do this using wildcards * and the -force flag. but i figured this is the safest way for a first time doing this.

storage disk options modify -autoassign off

storage disk removeowner -disk 1.1.0

storage disk removeowner -disk 1.1.2

storage disk removeowner -disk 1.1.4

storage disk removeowner -disk 1.1.6

storage disk removeowner -disk 1.1.8

storage disk removeowner -disk 1.1.10

storage disk assign -disk 1.1.0 -owner node-01

storage disk assign -disk 1.1.2 -owner node-01

storage disk assign -disk 1.1.4 -owner node-01

storage disk assign -disk 1.1.6 -owner node-01

storage disk assign -disk 1.1.8 -owner node-01

storage disk assign -disk 1.1.10 -owner node-01

storage disk show

storage disk options modify -autoassign on

at this point all the new 8TB SATA drives will be owned by node-01 and you can create a new AGGR 11 drives + spare.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With that config, I typically do all the "internal" (1.64T drives) aggr for controller 01. And the 1.8 drives as an aggr (+1 spare) on controller 02. It's not a 50/50 match, but it's probably the simplest way to do it.

There's other options though. You can mix drives inside an aggr. So you can have 1 raid group of 1.67 drives and one of 1.8. but that would make it all on one controller.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

He said 7 TB drives.

You'll have to use two of those at a minimum with RAID DP for parity, or three with RAID TEC.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i swear that said 1.8 sorry about that OP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for you input guys.

I understand that I am going to have lose the space on two or three of the disks for DP but how do i remove these 7TB disks from the aggrs that they are in right now to create a new aggregate?

Or am I able to just keep them in the aggregate they are in but use them for Data instead of spares? <--- This would be "No" right? because you can't mix disk size in the aggregates?

Thanks,

Joe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you can't remove disks from an aggr. You'll need to delete the aggr.

How many aggrs do you have?

Can you post the out of the following?

row 0

aggr show

storage disk show -partition-ownership

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Here are the results of the commands you asked about. the last one is pretty long!

BR90::> row 0

(rows)

BR90::> aggr show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

aggr0_BR90_01_root 159.9GB 7.73GB 95% online 1 BR90-01 raid_dp,

normal

aggr0_BR90_02_root 159.9GB 7.75GB 95% online 1 BR90-02 raid_dp,

normal

aggr1_BR90_01 13.05TB 12.95TB 1% online 1 BR90-01 raid_dp,

normal

aggr1_BR90_02 13.05TB 13.04TB 0% online 3 BR90-02 raid_dp,

normal

4 entries were displayed.

BR90::> storage disk show -partition-ownership

Disk Partition Home Owner Home ID Owner ID

-------- --------- ----------------- ----------------- ----------- -----------

Info: This cluster has partitioned disks. To get a complete list of spare disk capacity use

"storage aggregate show-spare-disks".

1.0.0 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.1 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.2 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.3 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.4 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.5 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.6 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.7 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.8 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.9 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.10 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.11 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.12 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.13 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.14 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.15 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.16 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.17 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.18 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.19 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.20 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.21 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.0.22 Container BR90-02 BR90-02 538113064 538113064

Root BR90-02 BR90-02 538113064 538113064

Data BR90-02 BR90-02 538113064 538113064

1.0.23 Container BR90-01 BR90-01 538114752 538114752

Root BR90-01 BR90-01 538114752 538114752

Data BR90-01 BR90-01 538114752 538114752

1.1.0 Container BR90-02 BR90-02 538113064 538113064

1.1.1 Container BR90-01 BR90-01 538114752 538114752

1.1.2 Container BR90-02 BR90-02 538113064 538113064

1.1.3 Container BR90-01 BR90-01 538114752 538114752

1.1.4 Container BR90-02 BR90-02 538113064 538113064

1.1.5 Container BR90-01 BR90-01 538114752 538114752

1.1.6 Container BR90-02 BR90-02 538113064 538113064

1.1.7 Container BR90-01 BR90-01 538114752 538114752

1.1.8 Container BR90-02 BR90-02 538113064 538113064

1.1.9 Container BR90-01 BR90-01 538114752 538114752

1.1.10 Container BR90-02 BR90-02 538113064 538113064

1.1.11 Container BR90-01 BR90-01 538114752 538114752

36 entries were displayed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@joesmith You replied, but unfortunately it got eaten by the community's overzealous spam filter. I've restored the original response and removed the "duplicate."

Sorry about that!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the output.

I would re-assign all the SATA drives to 01 (or 2) and just create a single aggr with a single spare. You'd get the best bang for you buck.

Let me know if you want the commands.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay, to do that would i need to delete both aggr1's and create 1 new one or am I able to add the disks in the after deleting just one of the aggr1?

Thanks,

Joe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Leave those as they are. you can't mix sas and sata in the same aggr.

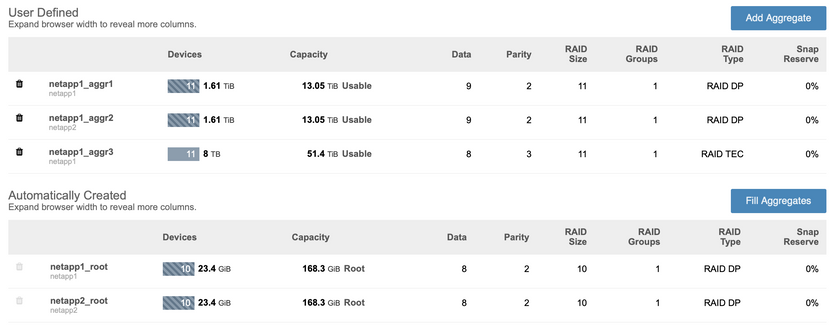

It'll look something like this:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thats looks like a great solution. So i guess all I would need to figure out is how to remove the 7tb disks from the current aggr they are assigned to.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you mean node? (node-02)

I can provide those, though can verify something for me first? what's the output of

row 0

storage disk show

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah sure. Please note that i have changed the aggregate names since the last time i posted to clean things up a little.

BR90::> row 0

(rows)

BR90::> storage disk show

Usable Disk Container Container

Disk Size Shelf Bay Type Type Name Owner

---------------- ---------- ----- --- ------- ----------- --------- --------

Info: This cluster has partitioned disks. To get a complete list of spare disk capacity use

"storage aggregate show-spare-disks".

1.0.0 1.63TB 0 0 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.1 1.63TB 0 1 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.2 1.63TB 0 2 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.3 1.63TB 0 3 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.4 1.63TB 0 4 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.5 1.63TB 0 5 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.6 1.63TB 0 6 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.7 1.63TB 0 7 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.8 1.63TB 0 8 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.9 1.63TB 0 9 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.10 1.63TB 0 10 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.11 1.63TB 0 11 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.12 1.63TB 0 12 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.13 1.63TB 0 13 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.14 1.63TB 0 14 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.15 1.63TB 0 15 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.16 1.63TB 0 16 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.17 1.63TB 0 17 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.18 1.63TB 0 18 SAS shared BR90_aggr2, aggr0_BR90_02_root BR90-02

1.0.19 1.63TB 0 19 SAS shared BR90_aggr1, aggr0_BR90_01_root BR90-01

1.0.20 1.63TB 0 20 SAS shared - BR90-02

1.0.21 1.63TB 0 21 SAS shared - BR90-01

1.0.22 1.63TB 0 22 SAS shared BR90_aggr2 BR90-02

1.0.23 1.63TB 0 23 SAS shared BR90_aggr1 BR90-01

1.1.0 7.14TB 1 0 FSAS spare Pool0 BR90-02

1.1.1 7.14TB 1 1 FSAS spare Pool0 BR90-01

1.1.2 7.14TB 1 2 FSAS spare Pool0 BR90-02

1.1.3 7.14TB 1 3 FSAS spare Pool0 BR90-01

1.1.4 7.14TB 1 4 FSAS spare Pool0 BR90-02

1.1.5 7.14TB 1 5 FSAS spare Pool0 BR90-01

1.1.6 7.14TB 1 6 FSAS spare Pool0 BR90-02

1.1.7 7.14TB 1 7 FSAS spare Pool0 BR90-01

1.1.8 7.14TB 1 8 FSAS spare Pool0 BR90-02

1.1.9 7.14TB 1 9 FSAS spare Pool0 BR90-01

1.1.10 7.14TB 1 10 FSAS spare Pool0 BR90-02

1.1.11 7.14TB 1 11 FSAS spare Pool0 BR90-01

36 entries were displayed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1.1.0 thought 1.1.11 are spares, so no need to delete any aggrs.

Have you verified that the new shelf cabling is good using something like Config Advisor?

Nodes (controllers) own disks, and disks get pooled to become aggrs.

for this reconfigure you'd want to do something like this:

note: there are other ways to do this using wildcards * and the -force flag. but i figured this is the safest way for a first time doing this.

storage disk options modify -autoassign off

storage disk removeowner -disk 1.1.0

storage disk removeowner -disk 1.1.2

storage disk removeowner -disk 1.1.4

storage disk removeowner -disk 1.1.6

storage disk removeowner -disk 1.1.8

storage disk removeowner -disk 1.1.10

storage disk assign -disk 1.1.0 -owner node-01

storage disk assign -disk 1.1.2 -owner node-01

storage disk assign -disk 1.1.4 -owner node-01

storage disk assign -disk 1.1.6 -owner node-01

storage disk assign -disk 1.1.8 -owner node-01

storage disk assign -disk 1.1.10 -owner node-01

storage disk show

storage disk options modify -autoassign on

at this point all the new 8TB SATA drives will be owned by node-01 and you can create a new AGGR 11 drives + spare.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are spot on.

Thank you so much for sticking with me! I really appreciate your help. You have gotten me going.

Thanks,

Joe