Tech ONTAP Blogs

- Home

- :

- Blogs

- :

- Tech ONTAP Blogs

- :

- Unleash the Power of JupyterHub with Google Cloud NetApp Volumes

Tech ONTAP Blogs

Unleash the Power of JupyterHub with Google Cloud NetApp Volumes

Today’s world is driven by high-tech infrastructure, and organizations are pushing the limits on extracting the maximum returns from their technology investments. The core principles of modern IT have evolved around automated resource allocation, collaborative platforms, scalability, cost-effectiveness, centralized access, and seamless user management. Organizations are constantly looking for platforms and technologies that can meet these needs and support current and next-generation workloads.

A perfect convergence to all these needs is , which has emerged as a vital tool for fostering collaboration and enhancing productivity across data-driven teams. JupyterHub is a scalable multiuser platform that enables organizations to deploy and manage Jupyter Notebooks for various kinds of users. Users can work in isolated environments while securely and efficiently accessing shared computational resources.

In the world of artificial intelligence and machine learning (AI/ML), JupyterHub has become a cornerstone for data scientists, analysts, researchers, and AI practitioners by providing a collaborative and interactive environment for exploring data, building models, and sharing insights—all enabled by the foundational workflows of sharing notebooks, code, and results in a controlled environment.

Additionally, JupyterHub’s ability to integrate with enterprise authentication systems and cloud services ensures that it aligns with organizational security policies and scalability needs. By centralizing notebook management and streamlining workflows, JupyterHub enhances data-driven decision-making and accelerates innovation, making it an indispensable component of contemporary enterprise IT infrastructure.

Simply put, JupyterHub and Jupyter Notebooks have made their impact across a wide spectrum of user personas in the modern IT garage. However, in working with JupyterHub, one of the core challenges is effectively presenting and managing data across different users in a shared environment. Whether it’s loading data for ML experiments, analyzing data, or presenting results, the key to enabling collaboration and efficiency is the way data is ingested, shared, and visualized within JupyterHub.

This is where Google Cloud NetApp Volumes steps in!

Google Cloud NetApp Volumes is a fully managed cloud file storage solution that allows users to easily host and manage their data on an enterprise-grade, high-performance storage system with support for NFS and SMB protocols.

To uncover this combination of JupyterHub and NetApp Volumes, this blog discusses highlights, integration points, implementation, and typical day-to-day workflows.

Why Google Cloud NetApp Volumes for JupyterHub?

Google Cloud NetApp Volumes is a powerful, scalable, and flexible file storage service offering a range of features that make it well suited as a data plane for JupyterHub.

- Persistent storage. Keep Jupyter Notebooks and data available, even when the user’s server pods are restarted. Google Cloud NetApp Volumes maintains data integrity and automatic reconnection with the new pods during restarts, which is crucial for continuity and preserving the state of the projects that are running on JupyterHub.

- Scalability and performance. Scale effortlessly to accommodate growing data needs and demanding workloads. The high-performance storage capabilities of NetApp Volumes make data access for Jupyter Notebooks smooth and efficient, even in dealing with large datasets. Users can base storage provisioning for their notebooks on the performance requirements by choosing the appropriate service levels.

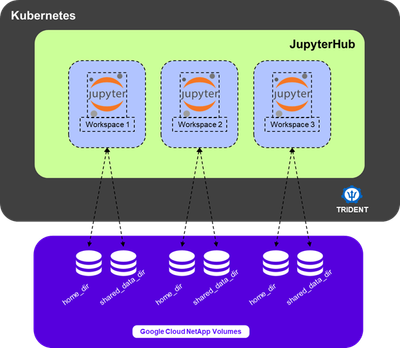

- Integration with Kubernetes. Integrate seamlessly with Kubernetes through the NetApp® , simplifying the process of provisioning and managing persistent volumes for the JupyterHub deployment. Persistent volume claims (PVCs) are automatically created when a Jupyter Notebook is spun up and a persistent volume is provisioned through the Trident CSI driver and bound to the PVC. Each user is allocated a dedicated amount of data storage for their private use.

- Security and data protection. Use robust security features, including encryption and access control, to protect sensitive data. With support for NetApp Snapshot™ copies and backups, recover data quickly in case of accidental deletion or system failures. By leveraging , you can protect Jupyter Notebooks and their persistent data by using Snapshot copies and backups, and you can support business-critical notebooks with a business continuity and disaster recovery plan.

- Cost-effectiveness. NetApp Volumes is a cost-effective solution for storing data in the cloud, reducing the need for additional storage infrastructure and minimizing overall costs. For large-scale datasets, optimize the storage footprint by auto-tiering unused data to lower-cost storage while continuing to maintain the same file system view to the client.

Implementing Google Cloud NetApp Volumes with JupyterHub

Setting up a JupyterHub environment with Google Cloud NetApp Volumes is a three-step process:

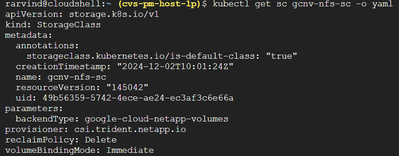

- Configure a Kubernetes cluster with the Trident CSI and Google Cloud NetApp Volumes as a back end.

For detailed instructions, refer to the NetApp Trident with Google Cloud NetApp Volumes blog. - Create a storage class in the Kubernetes cluster by using Trident CSI as the provisioner.

- Deploy JupyterHub on the previously created Kubernetes cluster by following the Zero to JupyterHub with Kubernetes approach.

As part of the deployment, update the config.yaml file for JupyterHub to point to the storage class that was created earlier.

Here on, all storage needs for JupyterHub will be serviced by Google Cloud NetApp Volumes.

A typical implementation for a team of data scientists

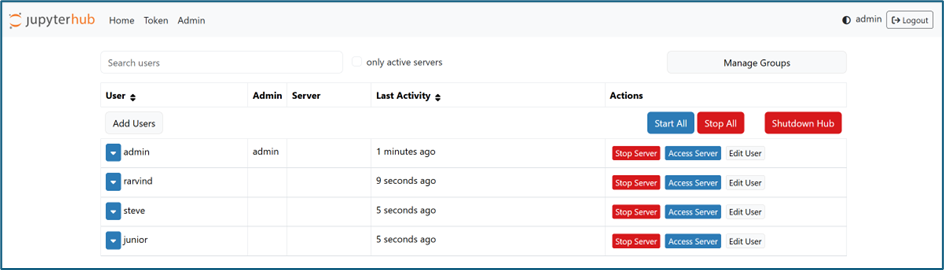

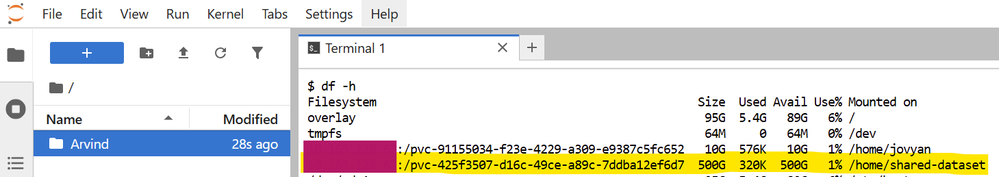

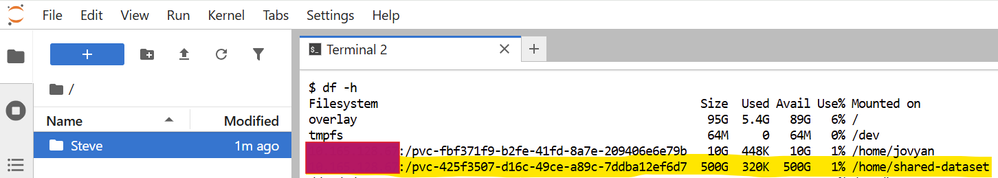

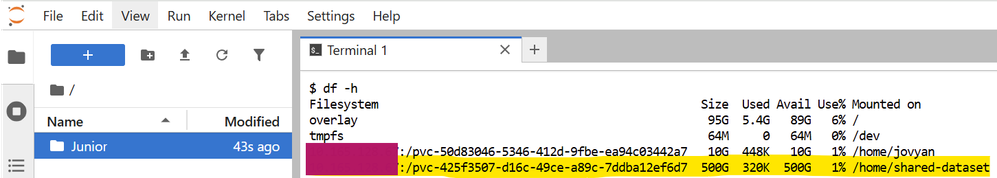

Consider this scenario: Arvind, Steve, and Junior are working on an AI project. Although they each have their own user space, they need to collaborate over a common dataset for their data science operations.

Each of them is assigned a personal dedicated storage space of 10GiB by default to their workspaces.

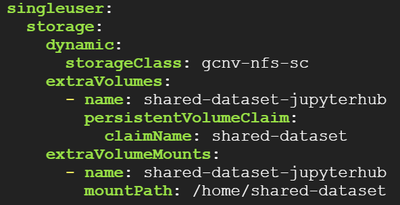

A PVC for a 500GiB shared storage space that caters to AI workloads is serviced by NetApp Volumes through a storage class gcnv-nfs-perf-sc that maps to a high-performance storage tier. This volume will host the dataset that the team will use for their AI/ML operations.

To present this high-performance shared storage to the user spaces, the configuration of JupyterHub is updated as follows using the config.yaml file.

For these changes to be effective, upgrade the JupyterHub deployment by using helm upgrade:

helm upgrade <helm-release-name> jupyterhub/jupyterhub --version=<chart-version> -n <namespace-name> --values config.yaml

The shared volume is then available within all the user spaces, facilitating seamless collaboration.

Conclusion

The integration of Google Cloud NetApp Volumes with JupyterHub offers a powerful and flexible solution for managing data in cloud-based application development, data science, and ML workflows. By combining NetApp Volumes’ robust, scalable storage capabilities with JupyterHub’s collaborative, multiuser environment, teams can efficiently access, store, and work on large datasets while maintaining seamless integration with cloud-native tools. This integration enhances performance, data accessibility, and collaboration, and it allows developers, data scientists, and researchers to focus on their work rather than managing infrastructure. As businesses continue to adopt cloud-first strategies, this integration provides a scalable, reliable, and cost-effective solution for cutting-edge computing and storage needs.