Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: Create a nfs volume with an export rule by entering multiple ip adress

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Create a nfs volume with an export rule by entering multiple ip adress

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'm a new user for WFA and i began to build my first workflow to create a NFS volume by entering the name,size and a multiple ip adress.

The workflow works just by entering one ip adress and not when i specified a multiple IP adress.

I changed the workflow by set "TABLE" in the field "value" of the user input ipadress and it doesn't work, the second and the third ip address are not set in the workflow

And my second question is How can call this workflow by Api ?

You can find files describe my workflow in attachment

Thanks a lot for your help

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problems with your workflow:

1. A single Client-match value can be taken for every single export rule created. A comma separated list doesn't work. So if you want to multiple client-match ips, then you need to created rules for every single one of them. Do it using WFA feature row looping

2. Values entered in Table inputs, do not remain strings. Though WFA Planning can't detect it and will pass it, Workflow execution will throw an error. The error "Cannot convert 'System.Object[]' to the type 'System.String' required by parameter 'ClientMatch'." is thrown by the powershell. So you need to use WFA functions getValueAt2D to obtain the value entered.

The corrected workflow has been sent to your mail id.

sinhaa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Which User Input are you trying to set to Type Table? In your setup.png I don't any user input declared of type Table. Is it possible to send your workflow?

@And my second question is How can call this workflow by Api ?

===

You can use WFA REST and SOAP apis to execute the workflow. See the WFA Developers Guide for detailed help on this topic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sinha,

Thanks for your help.

Please find file attachment for my workflow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not looked in very depth but I can say that this error is not due to User Input of Type Table. I've not been able to reproduce this error by the information you have provided. Kindly Export the workflow( see the tool-bar icon for exporting a workflow) and send the .dar file to my mail id : sinhaa at netapp dot com

I'll send you the cause of the failure and also the corrected workflow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problems with your workflow:

1. A single Client-match value can be taken for every single export rule created. A comma separated list doesn't work. So if you want to multiple client-match ips, then you need to created rules for every single one of them. Do it using WFA feature row looping

2. Values entered in Table inputs, do not remain strings. Though WFA Planning can't detect it and will pass it, Workflow execution will throw an error. The error "Cannot convert 'System.Object[]' to the type 'System.String' required by parameter 'ClientMatch'." is thrown by the powershell. So you need to use WFA functions getValueAt2D to obtain the value entered.

The corrected workflow has been sent to your mail id.

sinhaa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sinha,

The workflow work's very well by entering multiple ip adress.Thanks a lot for your help.

It only remains for me only two things to do :

1- Display the date for the volume name > for example by entering '12345' for the name and create vol_12345_09072014 (today's date)

I don't find how to retrieve the date ( maybe by API ?)

2 - Call the workflow by API > I find this document "WFA Web services primer - REST - rev 0.3" and i'll read it to find out how does it work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ 1- Display the date for the volume name > for example by entering '12345' for the name and create vol_12345_09072014 (today's date)

-----

Multiple ways to do it depending on the date format you need. The simplest one would be getting the date in UTC format. In Command Create Volume, for the name field enter this: 'vol_' + $VolumeName + System.currentTimeMillis()

If you want more human readable formats then you might need to clone and modify the Create Volume powershell Command and adding the following line below the parameters definitions.

$VolumeName = $VolumeName + "_" + [string](get-date -Format ddMMyyyy)

and then use this new command into your workflow.

warm regards,

sinhaa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used this command : : 'vol_' + $VolumeName + System.currentTimeMillis() but it doesn’t work

I’ll try to use create volume powershell and modify it.

Please follow te output :

Envoyé : mercredi 9 juillet 2014 13:02

À : Redouane Liani

Objet : - Re: Create a nfs volume with an export rule by entering multiple ip adress

<https://communities.netapp.com/index.jspa>

Re: Create a nfs volume with an export rule by entering multiple ip adress

created by sinhaa<https://communities.netapp.com/people/sinhaa> in OnCommand Workflow Automation - View the full discussion<https://communities.netapp.com/message/132714#132714>

@ 1- Display the date for the volume name > for example by entering '12345' for the name and create vol_12345_09072014 (today's date)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@

I used this command : : 'vol_' + $VolumeName + System.currentTimeMillis() but it doesn’t work

====

Strange. I tried similar thing on another simpler workflow but that perfectly worked.

Do the following, this will have to work

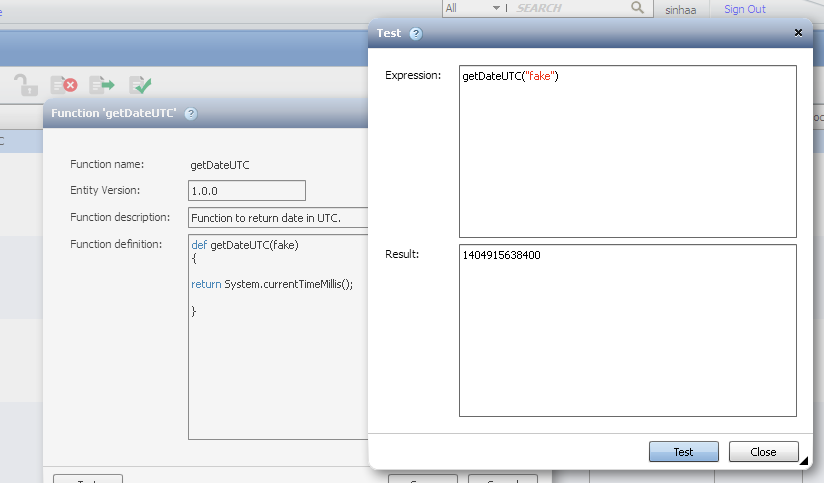

Create a new function with below function definition:

def getDateUTC(fake)

{

return System.currentTimeMillis();

}

Now the function name is getDateUTC.

Now in your workflow, for name parameter use this: 'vol_' + $VolumeName + getDateUTC("")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to create a function as you advised me but can not launch it

Envoyé : mercredi 9 juillet 2014 13:52

À : Redouane Liani

Objet : - Re: Create a nfs volume with an export rule by entering multiple ip adress

<https://communities.netapp.com/index.jspa>

Re: Create a nfs volume with an export rule by entering multiple ip adress

created by sinhaa<https://communities.netapp.com/people/sinhaa> in OnCommand Workflow Automation - View the full discussion<https://communities.netapp.com/message/132716#132716>

@

I used this command : : 'vol_' + $VolumeName + System.currentTimeMillis() but it doesn’t work

====

Strange. I tried similar thing on another simpler workflow but that perfectly worked.

Do the following, this will have to work

Create a new function with below function definition:

def getDateUTC(fake)

{

return System.currentTimeMillis();

}

Now the function name is getDateUTC.

Now in your workflow, for name parameter use this: 'vol_' + $VolumeName + getDateUTC("")

https://communities.netapp.com/servlet/JiveServlet/downloadImage/2-132716-26282/450-343/getDateUTC.png <https://communities.netapp.com/servlet/JiveServlet/showImage/2-132716-26282/getDateUTC.png>

Reply to this message by replying to this email -or- go to the message on NetApp Community<https://communities.netapp.com/message/132716#132716>

Start a new discussion in OnCommand Workflow Automation by email<mailto:discussions-community-productsandsolutions-datastoragesoftware-storagemanagementsoftware-oncommandworkflowautomation@communities.netapp.com> or at NetApp Community<https://communities.netapp.com/choose-container.jspa?contentType=1&containerType=14&container=4097>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Testing a function , you need to call like this:

def getDateUTC("fake")

fake is the parameter name, when you call the function the value needs to be in quotes.

See image:

In volume name, I'm calling the function passing empty string.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok that’s work.

But why from time to time the export rule function is disabled for any reason while i changed nothing ?

Envoyé : mercredi 9 juillet 2014 16:32

À : Redouane Liani

Objet : - Re: Create a nfs volume with an export rule by entering multiple ip adress

<https://communities.netapp.com/index.jspa>

Re: Create a nfs volume with an export rule by entering multiple ip adress

created by sinhaa<https://communities.netapp.com/people/sinhaa> in OnCommand Workflow Automation - View the full discussion<https://communities.netapp.com/message/132729#132729>

Testing a function , you need to call like this:

def getDateUTC("fake")

fake is the parameter name, when you call the function the value needs to be in quotes.

See image:

https://communities.netapp.com/servlet/JiveServlet/downloadImage/2-132729-26284/450-263/getDateUTC_Test.png <https://communities.netapp.com/servlet/JiveServlet/showImage/2-132729-26284/getDateUTC_Test.png>

In volume name, I'm calling the function passing empty string.

Reply to this message by replying to this email -or- go to the message on NetApp Community<https://communities.netapp.com/message/132729#132729>

Start a new discussion in OnCommand Workflow Automation by email<mailto:discussions-community-productsandsolutions-datastoragesoftware-storagemanagementsoftware-oncommandworkflowautomation@communities.netapp.com> or at NetApp Community<https://communities.netapp.com/choose-container.jspa?contentType=1&containerType=14&container=4097>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ But why from time to time the export rule function is disabled for any reason while i changed nothing ?

===

That's how your workflow is designed. You are searching for an existing export rule using the the filter and only if its not found, you are creating it by giving teh attributes to create one. If found, this execution instance will be disabled. Also see the "Advanced" tab the command definition.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes.You’re right i forgot this parameter.

Thanks for all.

I’ll try to get back to you for API.

Envoyé : jeudi 10 juillet 2014 10:01

À : Redouane Liani

Objet : - Re: Create a nfs volume with an export rule by entering multiple ip adress

<https://communities.netapp.com/index.jspa>

Re: Create a nfs volume with an export rule by entering multiple ip adress

created by sinhaa<https://communities.netapp.com/people/sinhaa> in OnCommand Workflow Automation - View the full discussion<https://communities.netapp.com/message/132789#132789>

@ But why from time to time the export rule function is disabled for any reason while i changed nothing ?

===

That's how your workflow is designed. You are searching for an existing export rule using the the filter and only if its not found, you are creating it by giving teh attributes to create one. If found, this execution instance will be disabled. Also see the "Advanced" tab the command definition.

Reply to this message by replying to this email -or- go to the message on NetApp Community<https://communities.netapp.com/message/132789#132789>

Start a new discussion in OnCommand Workflow Automation by email<mailto:discussions-community-productsandsolutions-datastoragesoftware-storagemanagementsoftware-oncommandworkflowautomation@communities.netapp.com> or at NetApp Community<https://communities.netapp.com/choose-container.jspa?contentType=1&containerType=14&container=4097>