Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: Help: Grafana/Harvest with OCUM and OPM

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Currently I am trying to setup Grafan and Harvest, using OCUM and OPM. I have the data being piped all the way to Grafana at this point, however the Graphite structure and Grafana template variables are not matching up. I have attached screenshots and my configuration. I had to obfuscate the real hostnames for privacy reasons.

(NetAppHarvest template)

This is my configuration at the moment.

##

## Configuration file for NetApp Harvest

##

## This file is organized into multiple sections, each with a [] header

##

## There are two reserved section names:

## [global] - Global key/value pairs for installation

## [default] - Any key/value pairs specified here will be the default

## value for a poller should it not be listed in a poller section.

##

## Any other section names are for your own pollers:

## [cluster-name] - cDOT cluster (match name from cluster CLI prompt)

## [7-mode-node-name] - 7-mode node name (match name from 7-mode CLI prompt)

## [OCUM-hostname] - OCUM server hostname (match hostname set to system)

## Quick Start Instructions:

## 1. Edit the [global] and [default] sections and replace values in all

## capital letters to match your installation details

## 2. For each system to monitor add a section header and populate with

## key/value parameters for it.

## 3. Start all pollers that are not running: /opt/netapp-harvest/netapp-manager start

##

## Note: Full instructions and list of all available key/value pairs is found in the

## NetApp Harvest Administration Guide

##

#### Global section for installation wide settings

##

[global]

grafana_api_key = MASKEDKEY

grafana_url = https://myhostname

##

#### Default section to set defaults for any user created poller section

##

[default]

graphite_enabled = 1

graphite_server = 127.0.0.1

graphite_port = 2003

## polled hosts defaults

username = MASKEDDUSER

password = MASKEDPASSWORD

## If using ssl_cert (and not password auth)

## uncomment and populate next three lines

# auth_type = ssl_cert

# ssl_cert = netapp-harves.pem

# ssl_key = netapp-harvest.key

##

#### Poller sections; Add one section for each cDOT cluster, 7-mode node, or OCUM server

#### If any krys are different from those in default duplicate them in the poller section to override.

##

[netappcluster01]

hostname = 192.168.0.1

group = netapp_group

# username = MYUSERNAME

# password = MYPASSWORD

# host_enabled = 0

[netappcluster02]

hostname = 192.168.0.2

group = netapp_group

# username = MYUSERNAME

# password = MYPASSWORD

# host_enabled = 0

[netappcluster03]

hostname = 10.10.0.1

group = netapp_group

# username = MYUSERNAME

# password = MYPASSWORD

# host_enabled = 0

# ocum (defualt for um)

[cd1001-o86]

hostname = ocum-hostname

group = netapp_group

host_type = OCUM

data_update_freq = 900

normalized_xfer = gb_per_sec

template = ocum-opm-hierarchy.conf

graphite_root = netapp-capacity.Clusters.{display_name}

graphite_meta_metrics_root = netapp-capacity-poller.{group}

I have tried without these lines which gave me the same result, these are the settings suggested for OCUM with OPM in the manual though.

template = ocum-opm-hierarchy.conf

graphite_root = netapp-capacity.Clusters.{display_name}

graphite_meta_metrics_root = netapp-capacity-poller.{group}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @markandrewj

Harvest can collect perf info directly from the clusters, and capacity info from OCUM, and send this on to Graphite. It then provides Grafana dashboards to view this data.

OPM with the 'external data provider' feature can send data to Graphite. Capacity info is not sent, and there are no Grafana dashboards provided.

If I understand correctly, your goal is to use OPM for performance information and Harvest for capacity information. To achieve you would do:

1) Add a Graphite carbon.conf entry to set the retention and frequency intervals for the netapp-perf and netapp-capacity metrics hierarchies

-- Did you do this?

2) Set the 'external data provider' feature of OPM to forward metrics.

-- I don't see any metrics in the Graphite view for netapp-perf.* as would be expected?

3) In netapp-harvest.conf add an entry for each ONTAP cluster and then set host_enabled = 0 so that no perf collection will occur

-- You can set all clusters back to host_enabled = 0, or leave as-is and you get perf metrics from Harvest too

4) In netapp-harvest.conf add an entry for the OCUM cluster with a modified graphite_root and graphite_meta_metrics_root

--Looks ok as-is

5) [re]start harvest using 'netapp-manager -restart'

6) Build Grafana dashboards for OPM perf and Harvest/OCUM capacity info

Some things to check if still having issues: (1) Check your disk is not full on your graphite server and (2) Check the /opt/netapp-harvest/logs directory for poller logs to see if there are any errors.

Most users of Harvest don't configure the OPM integration and just use Harvest for perf and capacity metrics especially because of the out-of-the-box Grafana dashboards. So this could also be an option for you.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

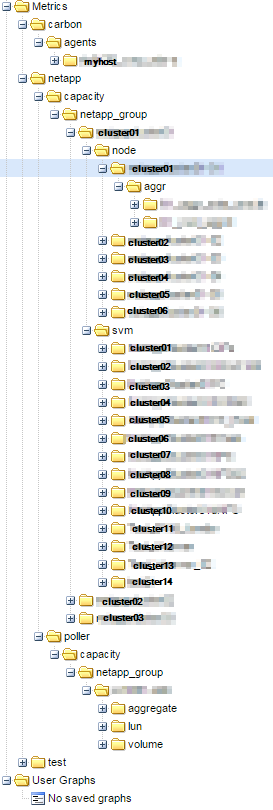

Thanks for the detailed reply. I now have more data in Graphite, but I am still having issues getting it to display in Grafana. I am working with another team which typcially handles storage. I got the team to forward the metrics as requested (we had created the user, but not turned on forwarding for external data providers). It is possible something still needs to be set on that end. This is what is currently being created in Graphite and the logs. In the logs I was being returned 'without quota metric [files-limit]; skipping further metrics for this instance' that I am not sure of the cause of.

Graphite

/opt/graphite/conf/storage-schemas.conf

[carbon] pattern = ^carbon\. retentions = 60:90d [default_1min_for_1day] pattern = .* retentions = 60s:1d [netapp_perf] pattern = ^netapp(\.poller)?\.perf7?\. retentions = 1m:35d,5m:100d,15m:395d,1h:5y [netapp_capacity] pattern = ^netapp(\.poller)?\.capacity\. retentions = 15m:100d,1d:5y

/opt/graphite/conf/carbon.conf

[cache] # Configure carbon directories. # # OS environment variables can be used to tell carbon where graphite is # installed, where to read configuration from and where to write data. # # GRAPHITE_ROOT - Root directory of the graphite installation. # Defaults to ../ # GRAPHITE_CONF_DIR - Configuration directory (where this file lives). # Defaults to $GRAPHITE_ROOT/conf/ # GRAPHITE_STORAGE_DIR - Storage directory for whipser/rrd/log/pid files. # Defaults to $GRAPHITE_ROOT/storage/ # # To change other directory paths, add settings to this file. The following # configuration variables are available with these default values: # # STORAGE_DIR = $GRAPHITE_STORAGE_DIR # LOCAL_DATA_DIR = STORAGE_DIR/whisper/ # WHITELISTS_DIR = STORAGE_DIR/lists/ # CONF_DIR = STORAGE_DIR/conf/ # LOG_DIR = STORAGE_DIR/log/ # PID_DIR = STORAGE_DIR/ # # For FHS style directory structures, use: # # STORAGE_DIR = /var/lib/carbon/ # CONF_DIR = /etc/carbon/ # LOG_DIR = /var/log/carbon/ # PID_DIR = /var/run/ # #LOCAL_DATA_DIR = /opt/graphite/storage/whisper/ # Enable daily log rotation. If disabled, carbon will automatically re-open # the file if it's rotated out of place (e.g. by logrotate daemon) ENABLE_LOGROTATION = True # Specify the user to drop privileges to # If this is blank carbon runs as the user that invokes it # This user must have write access to the local data directory USER = # # NOTE: The above settings must be set under [relay] and [aggregator] # to take effect for those daemons as well # Limit the size of the cache to avoid swapping or becoming CPU bound. # Sorts and serving cache queries gets more expensive as the cache grows. # Use the value "inf" (infinity) for an unlimited cache size. MAX_CACHE_SIZE = inf # Limits the number of whisper update_many() calls per second, which effectively # means the number of write requests sent to the disk. This is intended to # prevent over-utilizing the disk and thus starving the rest of the system. # When the rate of required updates exceeds this, then carbon's caching will # take effect and increase the overall throughput accordingly. MAX_UPDATES_PER_SECOND = 500 # If defined, this changes the MAX_UPDATES_PER_SECOND in Carbon when a # stop/shutdown is initiated. This helps when MAX_UPDATES_PER_SECOND is # relatively low and carbon has cached a lot of updates; it enables the carbon # daemon to shutdown more quickly. # MAX_UPDATES_PER_SECOND_ON_SHUTDOWN = 1000 # Softly limits the number of whisper files that get created each minute. # Setting this value low (like at 50) is a good way to ensure your graphite # system will not be adversely impacted when a bunch of new metrics are # sent to it. The trade off is that it will take much longer for those metrics'

# database files to all get created and thus longer until the data becomes usable.

# Setting this value high (like "inf" for infinity) will cause graphite to create

# the files quickly but at the risk of slowing I/O down considerably for a while.

MAX_CREATES_PER_MINUTE = 600

LINE_RECEIVER_INTERFACE = 0.0.0.0

LINE_RECEIVER_PORT = 2003

# Set this to True to enable the UDP listener. By default this is off

# because it is very common to run multiple carbon daemons and managing

# another (rarely used) port for every carbon instance is not fun.

ENABLE_UDP_LISTENER = False

UDP_RECEIVER_INTERFACE = 0.0.0.0

UDP_RECEIVER_PORT = 2003

PICKLE_RECEIVER_INTERFACE = 0.0.0.0

PICKLE_RECEIVER_PORT = 2004

# Set to false to disable logging of successful connections

LOG_LISTENER_CONNECTIONS = True

# Per security concerns outlined in Bug #817247 the pickle receiver

# will use a more secure and slightly less efficient unpickler.

# Set this to True to revert to the old-fashioned insecure unpickler.

USE_INSECURE_UNPICKLER = False

CACHE_QUERY_INTERFACE = 0.0.0.0

CACHE_QUERY_PORT = 7002

# Set this to False to drop datapoints received after the cache

# reaches MAX_CACHE_SIZE. If this is True (the default) then sockets

# over which metrics are received will temporarily stop accepting

# data until the cache size falls below 95% MAX_CACHE_SIZE.

USE_FLOW_CONTROL = True

# By default, carbon-cache will log every whisper update and cache hit. This can be excessive and

# degrade performance if logging on the same volume as the whisper data is stored.

LOG_UPDATES = False

LOG_CACHE_HITS = False

LOG_CACHE_QUEUE_SORTS = True

# The thread that writes metrics to disk can use on of the following strategies

# determining the order in which metrics are removed from cache and flushed to

# disk. The default option preserves the same behavior as has been historically

# available in version 0.9.10.

#

# sorted - All metrics in the cache will be counted and an ordered list of

# them will be sorted according to the number of datapoints in the cache at the

# moment of the list's creation. Metrics will then be flushed from the cache to

# disk in that order.

#

# max - The writer thread will always pop and flush the metric from cache

# that has the most datapoints. This will give a strong flush preference to

# frequently updated metrics and will also reduce random file-io. Infrequently

# updated metrics may only ever be persisted to disk at daemon shutdown if

# there are a large number of metrics which receive very frequent updates OR if

# disk i/o is very slow.

#

# naive - Metrics will be flushed from the cache to disk in an unordered

# fashion. This strategy may be desirable in situations where the storage for

# whisper files is solid state, CPU resources are very limited or deference to

# the OS's i/o scheduler is expected to compensate for the random write

# pattern.

#

CACHE_WRITE_STRATEGY = sorted

# On some systems it is desirable for whisper to write synchronously.

# Set this option to True if you'd like to try this. Basically it will

# shift the onus of buffering writes from the kernel into carbon's cache.

WHISPER_AUTOFLUSH = False

# By default new Whisper files are created pre-allocated with the data region

# filled with zeros to prevent fragmentation and speed up contiguous reads and

# writes (which are common). Enabling this option will cause Whisper to create

# the file sparsely instead. Enabling this option may allow a large increase of

# MAX_CREATES_PER_MINUTE but may have longer term performance implications

# depending on the underlying storage configuration.

# WHISPER_SPARSE_CREATE = False

# Only beneficial on linux filesystems that support the fallocate system call.

# It maintains the benefits of contiguous reads/writes, but with a potentially

# much faster creation speed, by allowing the kernel to handle the block

# allocation and zero-ing. Enabling this option may allow a large increase of

# MAX_CREATES_PER_MINUTE. If enabled on an OS or filesystem that is unsupported

# this option will gracefully fallback to standard POSIX file access methods.

WHISPER_FALLOCATE_CREATE = True

# Enabling this option will cause Whisper to lock each Whisper file it writes

# to with an exclusive lock (LOCK_EX, see: man 2 flock). This is useful when

# multiple carbon-cache daemons are writing to the same files

# WHISPER_LOCK_WRITES = False

# Set this to True to enable whitelisting and blacklisting of metrics in

# CONF_DIR/whitelist and CONF_DIR/blacklist. If the whitelist is missing or

# empty, all metrics will pass through

# USE_WHITELIST = False

# By default, carbon itself will log statistics (such as a count,

# metricsReceived) with the top level prefix of 'carbon' at an interval of 60

# seconds. Set CARBON_METRIC_INTERVAL to 0 to disable instrumentation

# CARBON_METRIC_PREFIX = carbon

# CARBON_METRIC_INTERVAL = 60

# Enable AMQP if you want to receve metrics using an amqp broker

# ENABLE_AMQP = False

# Verbose means a line will be logged for every metric received

# useful for testing

# AMQP_VERBOSE = False

# AMQP_HOST = localhost

# AMQP_PORT = 5672

# AMQP_VHOST = /

# AMQP_USER = guest

# AMQP_PASSWORD = guest

# AMQP_EXCHANGE = graphite

# AMQP_METRIC_NAME_IN_BODY = False

# The manhole interface allows you to SSH into the carbon daemon

# and get a python interpreter. BE CAREFUL WITH THIS! If you do

# something like time.sleep() in the interpreter, the whole process

# will sleep! This is *extremely* helpful in debugging, assuming

# you are familiar with the code. If you are not, please don't

# mess with this, you are asking for trouble :)

#

# ENABLE_MANHOLE = False

# MANHOLE_INTERFACE = 127.0.0.1

# MANHOLE_PORT = 7222

# MANHOLE_USER = admin

# MANHOLE_PUBLIC_KEY = MASKEDKEY

# Patterns for all of the metrics this machine will store. Read more at

# http://en.wikipedia.org/wiki/Advanced_Message_Queuing_Protocol#Bindings

#

# Example: store all sales, linux servers, and utilization metrics

# BIND_PATTERNS = sales.#, servers.linux.#, #.utilization

#

# Example: store everything

# BIND_PATTERNS = #

# To configure special settings for the carbon-cache instance 'b', uncomment this:

#[cache:b]

#LINE_RECEIVER_PORT = 2103

#PICKLE_RECEIVER_PORT = 2104

#CACHE_QUERY_PORT = 7102

# and any other settings you want to customize, defaults are inherited

# from [carbon] section.

# You can then specify the --instance=b option to manage this instance

[relay]

LINE_RECEIVER_INTERFACE = 0.0.0.0

LINE_RECEIVER_PORT = 2013

PICKLE_RECEIVER_INTERFACE = 0.0.0.0

PICKLE_RECEIVER_PORT = 2014

# Set to false to disable logging of successful connections

LOG_LISTENER_CONNECTIONS = True

# Carbon-relay has several options for metric routing controlled by RELAY_METHOD

#

# Use relay-rules.conf to route metrics to destinations based on pattern rules

#RELAY_METHOD = rules

#

# Use consistent-hashing for even distribution of metrics between destinations

#RELAY_METHOD = consistent-hashing

#

# Use consistent-hashing but take into account an aggregation-rules.conf shared

# by downstream carbon-aggregator daemons. This will ensure that all metrics

# that map to a given aggregation rule are sent to the same carbon-aggregator

# instance.

# Enable this for carbon-relays that send to a group of carbon-aggregators

#RELAY_METHOD = aggregated-consistent-hashing

RELAY_METHOD = rules

# If you use consistent-hashing you can add redundancy by replicating every

# datapoint to more than one machine.

REPLICATION_FACTOR = 1

# This is a list of carbon daemons we will send any relayed or

# generated metrics to. The default provided would send to a single

# carbon-cache instance on the default port. However if you

# use multiple carbon-cache instances then it would look like this:

#

# DESTINATIONS = 127.0.0.1:2004:a, 127.0.0.1:2104:b

#

# The general form is IP:PORT:INSTANCE where the :INSTANCE part is

# optional and refers to the "None" instance if omitted.

#

# Note that if the destinations are all carbon-caches then this should

# exactly match the webapp's CARBONLINK_HOSTS setting in terms of

# instances listed (order matters!).

#

# If using RELAY_METHOD = rules, all destinations used in relay-rules.conf

# must be defined in this list

DESTINATIONS = 127.0.0.1:2004

# This defines the maximum "message size" between carbon daemons.

# You shouldn't need to tune this unless you really know what you're doing.

MAX_DATAPOINTS_PER_MESSAGE = 500

MAX_QUEUE_SIZE = 10000

# This is the percentage that the queue must be empty before it will accept

# more messages. For a larger site, if the queue is very large it makes sense

# to tune this to allow for incoming stats. So if you have an average

# flow of 100k stats/minute, and a MAX_QUEUE_SIZE of 3,000,000, it makes sense

# to allow stats to start flowing when you've cleared the queue to 95% since

# you should have space to accommodate the next minute's worth of stats

# even before the relay incrementally clears more of the queue

QUEUE_LOW_WATERMARK_PCT = 0.8

# Set this to False to drop datapoints when any send queue (sending datapoints

# to a downstream carbon daemon) hits MAX_QUEUE_SIZE. If this is True (the

# default) then sockets over which metrics are received will temporarily stop accepting

# data until the send queues fall below QUEUE_LOW_WATERMARK_PCT * MAX_QUEUE_SIZE.

USE_FLOW_CONTROL = True

# Set this to True to enable whitelisting and blacklisting of metrics in

# CONF_DIR/whitelist and CONF_DIR/blacklist. If the whitelist is missing or

# empty, all metrics will pass through

# USE_WHITELIST = False

# By default, carbon itself will log statistics (such as a count,

# metricsReceived) with the top level prefix of 'carbon' at an interval of 60

# seconds. Set CARBON_METRIC_INTERVAL to 0 to disable instrumentation

# CARBON_METRIC_PREFIX = carbon

# CARBON_METRIC_INTERVAL = 60

[aggregator]

LINE_RECEIVER_INTERFACE = 0.0.0.0

LINE_RECEIVER_PORT = 2023

PICKLE_RECEIVER_INTERFACE = 0.0.0.0

PICKLE_RECEIVER_PORT = 2024

# Set to false to disable logging of successful connections

LOG_LISTENER_CONNECTIONS = True

# If set true, metric received will be forwarded to DESTINATIONS in addition to

# the output of the aggregation rules. If set false the carbon-aggregator will

# only ever send the output of aggregation. Default value is set to false and will not forward

FORWARD_ALL = False

# Filenames of the configuration files to use for this instance of aggregator.

# Filenames are relative to CONF_DIR.

#

# AGGREGATION_RULES = aggregation-rules.conf

# REWRITE_RULES = rewrite-rules.conf

# This is a list of carbon daemons we will send any relayed or

# generated metrics to. The default provided would send to a single

# carbon-cache instance on the default port. However if you

# use multiple carbon-cache instances then it would look like this:

#

# DESTINATIONS = 127.0.0.1:2004:a, 127.0.0.1:2104:b

#

# The format is comma-delimited IP:PORT:INSTANCE where the :INSTANCE part is

# optional and refers to the "None" instance if omitted.

#

# Note that if the destinations are all carbon-caches then this should

# exactly match the webapp's CARBONLINK_HOSTS setting in terms of

# instances listed (order matters!).

DESTINATIONS = 127.0.0.1:2004

# If you want to add redundancy to your data by replicating every

# datapoint to more than one machine, increase this.

REPLICATION_FACTOR = 1

# This is the maximum number of datapoints that can be queued up

# for a single destination. Once this limit is hit, we will

# stop accepting new data if USE_FLOW_CONTROL is True, otherwise

# we will drop any subsequently received datapoints.

MAX_QUEUE_SIZE = 10000

# Set this to False to drop datapoints when any send queue (sending datapoints

# to a downstream carbon daemon) hits MAX_QUEUE_SIZE. If this is True (the

# default) then sockets over which metrics are received will temporarily stop accepting

# data until the send queues fall below 80% MAX_QUEUE_SIZE.

USE_FLOW_CONTROL = True

# This defines the maximum "message size" between carbon daemons.

# You shouldn't need to tune this unless you really know what you're doing.

MAX_DATAPOINTS_PER_MESSAGE = 500

# This defines how many datapoints the aggregator remembers for

# each metric. Aggregation only happens for datapoints that fall in

# the past MAX_AGGREGATION_INTERVALS * intervalSize seconds.

MAX_AGGREGATION_INTERVALS = 5

# By default (WRITE_BACK_FREQUENCY = 0), carbon-aggregator will write back

# aggregated data points once every rule.frequency seconds, on a per-rule basis.

# Set this (WRITE_BACK_FREQUENCY = N) to write back all aggregated data points

# every N seconds, independent of rule frequency. This is useful, for example,

# to be able to query partially aggregated metrics from carbon-cache without

# having to first wait rule.frequency seconds.

# WRITE_BACK_FREQUENCY = 0

# Set this to True to enable whitelisting and blacklisting of metrics in

# CONF_DIR/whitelist and CONF_DIR/blacklist. If the whitelist is missing or

# empty, all metrics will pass through

# USE_WHITELIST = False

# By default, carbon itself will log statistics (such as a count,

# metricsReceived) with the top level prefix of 'carbon' at an interval of 60

# seconds. Set CARBON_METRIC_INTERVAL to 0 to disable instrumentation

# CARBON_METRIC_PREFIX = carbon

# CARBON_METRIC_INTERVAL = 60

/opt/netapp-harvest/netapp-manager -status -v

[root@MASKEDHOST log]# /opt/netapp-harvest/netapp-manager -status -v

[OK ] Line [29] is Section [global]

[OK ] Line [30] in Section [global] has Key/Value pair [grafana_api_key]=[**********]

[OK ] Line [31] in Section [global] has Key/Value pair [grafana_url]=[https://MASKEDHOST]

[OK ] Line [36] is Section [default]

[OK ] Line [37] in Section [default] has Key/Value pair [graphite_enabled]=[1]

[OK ] Line [38] in Section [default] has Key/Value pair [graphite_server]=[127.0.0.1]

[OK ] Line [39] in Section [default] has Key/Value pair [graphite_port]=[2003]

[OK ] Line [42] in Section [default] has Key/Value pair [username]=[harvest]

[OK ] Line [43] in Section [default] has Key/Value pair [password]=[**********]

[OK ] Line [55] is Section [netappcluster01]

[OK ] Line [56] in Section [netappcluster01] has Key/Value pair [hostname]=[MASKEDIP]

[OK ] Line [57] in Section [netappcluster01] has Key/Value pair [group]=[netapp_group]

[OK ] Line [62] is Section [netappcluster02]

[OK ] Line [63] in Section [netappcluster02] has Key/Value pair [hostname]=[MASKEDIP]

[OK ] Line [64] in Section [netappcluster02] has Key/Value pair [group]=[netapp_group]

[OK ] Line [69] is Section [netappcluster03]

[OK ] Line [70] in Section [netappcluster03] has Key/Value pair [hostname]=[MAKSEDHOST]

[OK ] Line [71] in Section [netappcluster03] has Key/Value pair [group]=[netapp_group]

[OK ] Line [77] is Section [MAKSEDHOST]

[OK ] Line [78] in Section [MAKSEDHOST] has Key/Value pair [hostname]=[cd1001-o86]

[OK ] Line [79] in Section [MAKSEDHOST] has Key/Value pair [group]=[netapp_group]

[OK ] Line [80] in Section [MAKSEDHOST] has Key/Value pair [host_type]=[OCUM]

[OK ] Line [81] in Section [MAKSEDHOST] has Key/Value pair [data_update_freq]=[900]

[OK ] Line [82] in Section [MAKSEDHOST] has Key/Value pair [normalized_xfer]=[gb_per_sec]

[OK ] Line [83] in Section [MAKSEDHOST] has Key/Value pair [template]=[ocum-opm-hierarchy.conf]

[OK ] Line [84] in Section [MAKSEDHOST] has Key/Value pair [graphite_root]=[netapp-capacity.Clusters.{display_name}]

[OK ] Line [85] in Section [MAKSEDHOST] has Key/Value pair [graphite_meta_metrics_root]=[netapp-capacity-poller.{group}]

/opt/netapp-harvest/log

ocum/opm host (was comming back from the clusters):

without quota metric [files-limit]; skipping further metrics for this instance

the clusters were giving this as expected:

[2017-02-28 09:46:49] [WARNING] [sysinfo] Update of system-info cache DOT Version failed with reason: Authorization failed [2017-02-28 09:46:49] [WARNING] [main] system-info update failed; will try again in 10 seconds.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at the graphite screen grab it shows netapp.capacity but no netapp.perf. That shows that Graphite is not getting perf data, but only capacity data. The Grafana screen grab shows that the templates are looking for netapp.perf.xxx.

To use the included dashboards, you need to configure Harvest to pull data from each cluster. It won't get it from OCUM/OPM. Make sure that you use the same name in Harvest for perf gathering from each cluster that OCUM/OPM send to Graphite for netapp.capacity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @markandrewj

I suggest you configure using the default config whereby Harvest collects perf data from the cluster, and capacity data from OCUM. This gives you default dashboards and you then have no need for custom templates, or new storage-schemas.conf entries other than the ones you already have.

I'd implement chapter 4, 5, and 7 from the Harvest admin guide; 6 is already done from the info you provided. This is a more straightforward task and will get you working faster. You could also go for the NAbox, a packaged OVA which gets you up and running fast and offers a GUI to configure Harvest.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

I was able to get everything working. I still have the custom template, but I possibly don't need it anymore. I just wanted to let it run a bit before removing the custom template. Sorry for the delay in response. I had an issue where the disk filled, and left a pid file after I attached some extra disk, which caused carbon-cache not to start.

Thanks for the response. I tried the NA Box OVA, but I was having issues getting it to work properly with RHEV. I may try again in the future.

I had a couple questions.

One is about Graphite, is there no way to make the web GUI require authentication to view? My solution has been to put it on the loopback device. When I read about it, it looks like it an open ticket https://github.com/graphite-project/graphite-web/issues/681.

My other question was about sperating the the files out from the OS. At the moment I have an lvol made from attached disk that I have moved the files from /opt into. I want to seperate all the application and database files from the OS as much as possibe. Is it ture that all the files installed by default are in /opt, and /etc/httpd, besides the Python modules? I am going to be writting an Ansible playbook for internal use and I would ike to seperate everything out from the OS disk as much as possible so we can reattach the disks to new hosts if need be.

##

## Configuration file for NetApp Harvest

##

## This file is organized into multiple sections, each with a [] header

##

## There are two reserved section names:

## [global] - Global key/value pairs for installation

## [default] - Any key/value pairs specified here will be the default

## value for a poller should it not be listed in a poller section.

##

## Any other section names are for your own pollers:

## [cluster-name] - cDOT cluster (match name from cluster CLI prompt)

## [7-mode-node-name] - 7-mode node name (match name from 7-mode CLI prompt)

## [OCUM-hostname] - OCUM server hostname (match hostname set to system)

## Quick Start Instructions:

## 1. Edit the [global] and [default] sections and replace values in all

## capital letters to match your installation details

## 2. For each system to monitor add a section header and populate with

## key/value parameters for it.

## 3. Start all pollers that are not running: /opt/netapp-harvest/netapp-manager start

##

## Note: Full instructions and list of all available key/value pairs is found in the

## NetApp Harvest Administration Guide

#### Global section for installation wide settings

##

[global]

grafana_api_key = MASKEDKEY

grafana_url = MASKEDHOST

##

#### Default section to set defaults for any user created poller section

##

[default]

graphite_enabled = 1

graphite_server = 127.0.0.1

graphite_port = 2003

## polled hosts defaults

username = harvest

password = MASKEDPASSWORD

## If using ssl_cert (and not password auth)

## uncomment and populate next three lines

# auth_type = ssl_cert

# ssl_cert = netapp-harves.pem

# ssl_key = netapp-harvest.key

##

#### Poller sections; Add one section for each cDOT cluster, 7-mode node, or OCUM server

#### If any krys are different from those in default duplicate them in the poller section to override.

##

[MASKED]

hostname = MASKEDIP

group = netapp_group

username = MASKEDUSER

password = MASKEDPASSWORD

# host_enabled = 0

[MASKED]

hostname = MASKEDIP

group = netapp_group

username = MASKEDUSER

password = MASKEDPASSWORD

# host_enabled = 0

[MASKED]

hostname = MASKEDIP

group = netapp_group

username = MASKEDUSER

password = MASKEDPASSWORD

# host_enabled = 0

# ocum (defualt for um)

[MASKED]

hostname = MASKED

group = netapp_group

host_type = OCUM

data_update_freq = 900

normalized_xfer = gb_per_sec

template = ocum-opm-hierarchy.conf

graphite_root = netapp-capacity.Clusters.{display_name}

graphite_meta_metrics_root = netapp-capacity-poller.{group}