Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- NetApp Harvest v1.3 is available!

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am pleased to announce that Harvest v.1.3is now available on the NetApp Support Toolchest! This feature release includes lots of new counters, dashboard panels, and of course, bug fixes. For those looking for support with ONTAP 9 and 9.1, and OCUM 6.4/7.0/7.1 its in there too.

More info is in my blog here: Announcing NetApp Harvest v1.3

And the software can be downloaded here: NetApp Harvest v1.3

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome! - thanks Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks great, the only issue I'm seeing so far is no Flash Pool stats on my lab 2250 running 9.0 GA.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @michaelll

Thanks for the feedback. Unfortunately I don't have a flashpool system with ONTAP 9 in my lab. I will send you a private message to see if you are available to collect and provide me logs so I can diagnose.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Questions,

Do Harvest/Grafana support RBAC(LDAP)?

Also does this product allow for monitoring of certain NetApp process like Kahuna?

Thanks,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>Do Harvest/Grafana support RBAC(LDAP)?

Yes. In the Harvest admin guide are steps to create a least-privilege RBAC read-only role. This role can be applied to a local or remotely authenticated user account.

>>Also does this product allow for monitoring of certain NetApp process like Kahuna?

Yes. Harvest tracks average CPU per node and CPU per processing domain, including Kahuna.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

I followed the source upgrade instructions (upgrading from version 1.2.2). When I went to start the services in step 9 I was given the following error:

Can't locate IO/Socket/SSL.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl5 /usr/lib64/perl5 /usr/share/perl5 .) at /opt/netapp-harvest/netapp-manager line 25 BEGIN failed--compilation aborted at /opt/netapp-harvest/netapp-manager line 25

The system is RHEL6 and was previously installed with the source instructions.

From what I can see "use IO::Socket:SSL;" on line 25 of netapp-manager is a new line (feature?) in harvest 1.3.

Is there a pre-req needed to get this working?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jonathon_lanzon,

Here is the list of prerequisites for RHEL/CentOS as found in the Harvest v1.3 admin guide:

sudo yum install -y epel-release unzip perl perl-JSON \ perl-libwww-perl perl-XML-Parser perl-Net-SSLeay \ perl-Time-HiRes perl-LWP-Protocol-https sudo yum install -y perl-Excel-Writer-XLSX

Can you execute these and advise if that resolves the issue?

If that doesn't work maybe one more is required:

sudo yum install -y perl-IO-Socket-SSL

My test system is CentOS so it could be that default modules differ with RHEL so I would appreciate your feedback on the fix.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome, Thanks for all the hard work, We use Harvest/Graphite/Grafana everyday!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another question, does Harvest offer a feature to email alerts based on thresholds or is it meant more as a dashboard suppliement to OCUM? I know OCUM can do some alerting.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Harvest is a data source that sends collected data to a time series database, usually Graphite, and then you visualize it somehow, usually Grafana.

Harvest itself does not have an alerting capability. Graphite doesn't either, but there are a few add-ons you can use, the most interesting of which looks like Moira. Grafana also just added alerting in the v4.0 release that is in beta now.

OnCommand Unified Manager has alerting built in natively. It also understands much more about the relationships between instances in a cluster, or between clusters, and includes things like alerting. I would recommend to start with Unified Manager and if it doesn't meet your needs to then look at adding Harvest/Graphite/Grafana for a deeper look. The solutions are complementary....

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Harvest itself is not resposible for email alerting. It is simply grabbing time-series metrics and dumping to a databse.

However, Grafana ( dashboard software) does have email alerting as of v4.0 which is currently in beta. It looks promising.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Chris! Been awaiting this release since Insight! Just upgraded, and it looks great. Thanks again

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

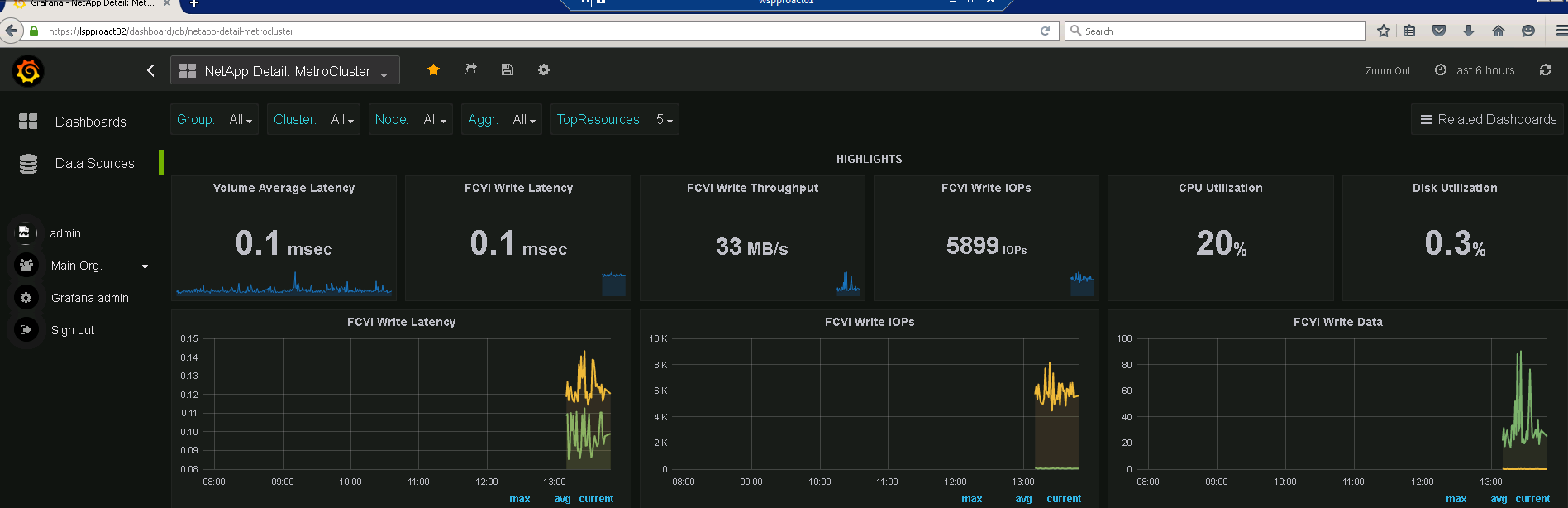

after upgrading some of my "related dashboards" dont work any more. it looks like it is all the new dashboards. e.g. Metro Cluster.

it is a metro cluster that I am monitoring so it should work.

if I take it from the drop down in the uppper left corner it works most of the time. but the related dashboard never work.

it is clearly some of the settings that the related dashboard is using.

https://lspproact02/dashboard/db/netapp-detail-metrocluster?from=now-6h&to=now&var-Group=site1&var-Cluster=sapdc110&var-Node=scpdc110&var-TopResources=5

If I change that to

https://lspproact02/dashboard/db/netapp-detail-metrocluster

then it works just fone.

so I was wondering if it is a general issue, or my dashboads configuration that is defect

My CPU and Disk utilization is still just the %-number, there is no fancy speed-o-meter

but the data is really great, and I like that we are now able to monitor FCVI traffic and so on

so all in all, really great upgrade

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some dashboards have template drop-down boxes that allow a single item to be selected while others allow multi-pick or 'All'. If you view a dashboard that allows multi-pick or all choosing one of the options, and then use related dashboards to go to another dashboard that does not allow multi-pick or all then indeed the dashboard won't populate correctly. To resolve on the related dashboard choose from the template items again, or before you use the related dashboards make sure your lists only include a single item in each dropdown.

On the CPU and disk util % these should be the spedometers and I just doublechecked and the v1.3 toolchest posting includes them. If you have a pre-final release of 1.3 (X release) maybe update your dashboards again to resolve.

Hope this helps. If you still have open questions please create a new post on communities (this thread is getting too long) with more details.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, all i did is run the .deb becuase I'm running Ubunto, and restarted. I'm I missing somehting ? I dont see anything different. I see a netapp-harvest folder but not sure of any other steps to take according to upgrade process in the PDF.

Thank you,

Below is what I did...

:/tmp# dpkg -i netapp-harvest_1.3_all.deb

Selecting previously unselected package netapp-harvest.

(Reading database ... 70267 files and directories currently installed.)

Preparing to unpack netapp-harvest_1.3_all.deb ...

### Creating netapp-harvest group ... OK

### Creating netapp-harvest user ... OK

Unpacking netapp-harvest (1.3) ...

Setting up netapp-harvest (1.3) ...

### Autostart NOT configured. Execute the following statements to configure auto start:

sudo update-rc.d netapp-harvest defaults

### Configure your pollers in /opt/netapp-harvest/netapp-harvest.conf.

### After pollers are configured start netapp-harvest by executing:

sudo service netapp-harvest start

Processing triggers for ureadahead (0.100.0-16) ...

ureadahead will be reprofiled on next reboot

root@s1lsnvcp1:/tmp# reboot

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, looks amazing, love it . I came this morning and for some reason it showed up I guess I had to refresh my browser.

Love the CPU and Disk Utilization of all Cluster Node at a glance... Great Job Chris.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris, what I have noticed is that the new netapp-harvest 1.3 performace charts have a 10 min delay in displaying the data in Grafana graph, in the previous version if not mistaken it gave me up to the minute, anyway to change this or is just the nature of it ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rcasero

Glad you like the solution!

After installing / upgrading to v1.3 (linux package installer or source technique) you do need to import the new dashboards. Note, these will overwrite the old Harvest supplied ones so if you customized them make sure you 'save as' to a new name before running the command below or you will lose your customizations:

/opt/netapp-harvest/netapp-manager -import

Regarding a time skew of 10 minutes I suspect your linux VM where Harvest runs is not synced to time properly. Setup NTP on the VM, or NTP on your ESX virtualization hosts (the emulated clock from them will be picked up by the VMs living on it), to resolve. As a best practice I would make sure all components (ONTAP, OCUM, Harvest, Graphite) are all synced with NTP.

If this doesn't solve your issue please create a new post with more details because this thread is gettting to long with very mixed topics.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

Last Week I've created our Grafana/Graphite site alredy with NetApp Harvest v1.3 (that's running on CentOS 7).

Almost everything is working really well...! Thanks for putting so much effort on this...!

The "almost" is only related to the "Netapp Detail: 7-Mode LUN" dashboard.

When I select it, a red message box shows up on the top right corner of the screen with the folowing message:

"Dashboard init failed

Template variables could not be initialized: undefined"

I tried to find similar situation on the forums but could not.

Would you have an idea where I should start troubleshooting? I'm stil learning...

Thanks a lot,

Alex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Alex_W

Great you like the solution! Regarding your question, I'm not sure what could cause this. On a fresh install the template drop-downs are empty and Grafana will query Graphite to populate them. Some fields are dependant (nested) on others so maybe this is causing an issue. I would try picking valid values from left to right in the template drop-downs for a LUN that you know has activity and see if the dashboard will populate properly. If it does, save the dashboard which will persist your choices to the DB. Then navigate to some other dashboard, and come back to the LUN dashboard and see the results.

If this doesn't answer your question please create a new post with more details and I'll try to give a more complete answer.

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!