Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: Table data shows in KB instead of MB

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It appears whenever I pull from the qtree.disk_limit_mb table in WFA, the values returned are actually in KB instead of MB (like the table name would suggest). But when I pull a similar table for volumes (volume.size_mb), it does report in MB like the name says.

Am also curious why a query as an administrator takes half the time as the exact same query as an operator. The data comes from the same source regardless, so makes no sense why it would take longer.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kevin,

It appears whenever I pull from the qtree.disk_limit_mb table in WFA, the values returned are actually in KB instead of MB (like the table name would suggest). But when I pull a similar table for volumes (volume.size_mb), it does report in MB like the name says.

Can you tell us the version of WFA that you are using. Also I assume this is 7mode. Correct me if I am wrong.

Am also curious why a query as an administrator takes half the time as the exact same query as an operator. The data comes from the same source regardless, so makes no sense why it would take longer.

It would help us to debug the issue if you could share your query. If possible also share the execution logs which contains the time for both as admin as well as operator.

Regards

adai

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Adai,

It's WFA version 2.1.0.70.32, running against cDOT (8.2P2 and P3)systems.

The query is as follows:

SELECT

vserver.name

FROM

cm_storage.cluster cluster,

cm_storage.vserver vserver

WHERE

vserver.cluster_id = cluster.id

AND cluster.name = '${ClusterName}'

AND vserver.is_repository IS NOT TRUE

AND (

vserver.type = 'cluster'

OR vserver.type = 'data'

)

ORDER BY

vserver.name ASC

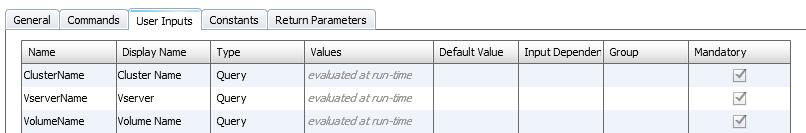

We don't have execution logs as these SQL queries are just during the user input steps, before the workflow is ever told to preview/execute. Put a screenshot below that shows the evaluated at run-time value for the query. ClusterName selects all clusters that OnCommand knows about… based on that selection, the VserverName query will update to only show the vservers owned by that cluster. And likewise the VolumeName will only show the volumes owned by that vserver. Those are just SQL queries defined in the user inputs but I don’t believe there is any log for them as they are dynamic.

Please let me know what other information will help. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kevin,

To answer to your first question: qtree.disk_limit_mb table in WFA, the values returned are actually in KB instead of MB

I don't see it. Its returned in MB.

On Cluster:

f3270-251-16-18::*> quota policy rule show -fields disk-limit

vserver policy-name volume type target qtree disk-limit

------- ----------- -------- ---- --------------------- ----- ----------

dhruvd default vol_test tree qtree_21022014_133557 "" 200MB

----

Data from WFA tables, query executed outside of WFA:

PS C:\Users\Administrator> Get-MySqlData -Query "SELECT *

FROM

cm_storage.qtree

WHERE qtree.name LIKE '%133557%'"

1

id : 160

name : qtree_21022014_133557

oplock_mode : 0

path : /vol_test/qtree_21022014_133557

security_style : ntfs

volume_id : 106

disk_limit_mb : 200

disk_soft_limit_mb : 0

files_limit : 0

soft_files_limit : 0

threshold_mb : 0

disk_used_mb : 0

Data from query execution within WFA:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kevin,

Is your OCUM 5.X Cluster Mode? My above post was using OCUM 6.1, and hence the results were fine. Thanks to Shailaja who pointed out that the Cache query for OCUM5.X Cluster Data Ontap doesn't divide qtreeKBytesLimit by 1024 and hence the Data obtained is in KB instead of MB. This is a bug in WFA cache query for OCUM 5.X Cluster Data Ontap. Also disk_soft_limit_mb will be shown in KB instead of MB due to the same bug. The bug will be filed and should be fixed in future WFA releases.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kevin,

Thanks for all your info. Can you also open a case with NetApp for the same and attach it to bug id 803424 ?

To open a case, use a FAS controller serial number with, WFA System ID and ASUP logs.

Pls do let us know the case # once opened.

Thanks

Regards

adai

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Sinhaa and Adai. I have relayed the info back to the customer to open case and reference the bug. Thanks for your quick support!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I would like to add that the issue has been fixed for future releases now.

Thanks,

Anil