Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: netapp-harvest ocum capacity metrics

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not seeing capacity metrics from ocum into netapp-harvest db

if any other config needs to be added,checked for ocum other than below as in netapp-harvest document

updated our environment info for below in our netapp-harvest.conf

[INSERT_OCUM_HOSTNAME_HERE]

hostname = INSERT_IP_ADDRESS_OR_HOSTNAME_OF_OCUM_HOSTNAME

site = INSERT_SITE_IDENTIFIER_HERE

host_type = OCUM

data_update_freq = 900

normalized_xfer = gb_per_sec

template = ocum-opm-hierarchy.conf

graphite_root = netapp-capacity.Clusters.{display_name}

graphite_meta_metrics_root = netapp-capacity-poller.INSERT_OCUM_HOSTNAME_HERE

from logs see this for OCUM

[2015-10-02 10:59:08] [WARNING] [sysinfo] Discovered [CDOT-1] on OCUM server with no matching conf section; to collect this cluster please add a section

[2015-10-02 10:59:08] [WARNING] [sysinfo] Discovered [CDOT-2] on OCUM server with no matching conf section; to collect this cluster please add a section

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Chris, checking the logs provided the answer. I had made a change to the mhnap-cls entry and never restarted the OCUM poller! Once I restarted it it picked up both entries.

However, I noticed another error in the mhnap-cls log:

[WARNING] [lun] update of data cache failed with reason: For lun object, no instances were found to match the given query.

This cluster has no luns. is that why i am getting these errors? they appear every minute! any way to stop them?

Moshe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you also need to add a section in the .conf for the cluster you are monitoring. The "site" value ties OCUM to the cluster. Example:

#====== 7DOT (node) or cDOT (cluster LIF) for performance info ================

[my-clst-01]

hostname = 10.0.0.0

site = GCC

#====== OnCommand Unified Manager (OCUM) for cDOT capacity info ===============

[ocum-gcc]

hostname = 10.0.0.1

site = GCC

host_type = OCUM

data_update_freq = 900

normalized_xfer = gb_per_sec

[...]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i had different names for sites as clusters were in different locations updated site to same name for al

after restart I am still getting no matching conf section in the logs

if you added one cluster for perf or twice once for ocum capacity and other time for perf ?

[my-clst-01]

hostname = 10.0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

one of the reason for my issue was I used hostname.subdomain.com dns name instead of just hostname in graphite config [hostname]

fixed this and then it worked

Thanks for help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If using Harvest for Capacity and Performance the metrics hierarchies are adjacent to each other, on my system from a linux perspective:

/opt/graphite/storage/whisper/netapp/capacity/nl/sdt-cdot1/node/sdt-cdot1-01/aggr/n01fc01/

/opt/graphite/storage/whisper/netapp/perf/nl/sdt-cdot1/node/sdt-cdot1-01/aggr/n01fc01/

To build that hierarchy Harvest needs to know the clustername and the site for all monitored systems.

For Perf it uses the poller name (the one in the section header []) and the site name.

With the OCUM integration you add the OCUM server itself and Harvest then learns all clusternames monitored by OCUM. Harvest then looks for a poller section that matches the clustername to find the site to post the capacity metrics.

So the recommended way to do it is to have the cDOT poller section name match the clustername. Then when adding an OCUM poller it will be able to find the sites for each cDOT system.

An example of pollers:

[sdt-cdot1] hostname = 10.64.28.242 site = nl [sdt-um] hostname = 10.64.28.77 site = uk host_type = OCUM data_update_freq = 900 normalized_xfer = gb_per_sec

So the first poller section is named after the clustername (from 'cluster identity show', or also default prompt from cDOT CLI) and has the IP address and site listed. The second poller section is for OCUM at the IP address and site (for poller metrics only) listed. It will then discover all clusters known to OCUM and lookup for a poller section to get the site for those capacity metrics and submit them. So in this case the cluster sdt-cdot1 capacity metrics will be loaded under site of nl, and metadata metrics for the poller itself will be loaded under site of uk.

I hope this makes sense. You aren't the first person to have an issue configuring this OCUM integration so I think I need to update the docs to explain it better.

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if we have one OCUM monitoring clusters in different locations

how to define config for multiple site clusters with one OCUM at one site ?

Adding Chris reply to this

madden

Capacity metrics for a cluster are submitted using the site from the cluster’s own poller entry. So the capacity data is in the same site as the performance data because it learns site from the cluster’s poller definition. The site in the OCUM poller is only for metrics from the poller itself like api_time, # metrics submitted, etc.

J_Curl

The "site" value ties OCUM to the cluster.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Here could be an example:

[uk-cluster1] hostname = 10.64.28.242 site = uk

[nl-cluster1]

hostname = 10.64.28.243

site = nl

[jp-unifiedmanager1] hostname = 10.64.28.77 site = jp host_type = OCUM data_update_freq = 900 normalized_xfer = gb_per_sec

- Harvest will collect capacity info for all clusters defined in jp-unifiedmanager1.

- Capacity metrics for uk-cluster1 will be submitted under site uk.

- Capacity metrics for nl-cluster1 will be submitted under site nl.

- Poller metrics for jp-unifiedmanager1 (like how many metrics it posted, how long it took the UM API to respond, etc) will be submitted under site jp.

- Any other clusters known to jp-unifiedmanager1 but that don't have a poller entry will not submit metrics and will log a message like: "[2015-10-02 10:59:08] [WARNING] [sysinfo] Discovered [CDOT-2] on OCUM server with no matching conf section; to collect this cluster please add a section". If you want capacity metrics for CDOT-2 you need to add a poller entry so that the site can be learned.

Hope that helps!

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

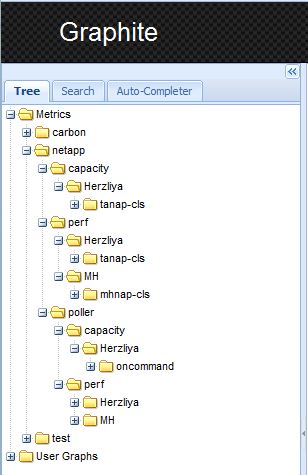

If that's the case, why does my Graphite server only show one site under the "capacity" metrics in the tree?

see attached screenshot. I would expect to see both sites listed under "capacity" the way they both appear under "perf"

Thanks,

Moshe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Moshe,

Correct, you should see two sites under netapp.capacity. Did you check the oncommand logfile in /opt/netapp-harvest/log to get a hint what could be wrong?

My guess is one of:

(a) mhnap-cls is not added to the oncommand server

(b) it has a different name on the oncommand server

(c) you need to do a F5 refresh in the web browser because the metrics arrived after you loaded the page

(d) you ran out of diskspace and those metrics files can't be created

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Chris, checking the logs provided the answer. I had made a change to the mhnap-cls entry and never restarted the OCUM poller! Once I restarted it it picked up both entries.

However, I noticed another error in the mhnap-cls log:

[WARNING] [lun] update of data cache failed with reason: For lun object, no instances were found to match the given query.

This cluster has no luns. is that why i am getting these errors? they appear every minute! any way to stop them?

Moshe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Moshe,

Good to hear you found the issue with regards to capacity metrics.

For the LUN WARNING message every minute this can be encountered if you have a cluster which has LUNs but none of them are online. These show up in a list of LUNs but do not have performance metrics available because they are not active. This bug was in my backlog to fix and will be in the next Toolchest release.

Cheers,

Chris

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

My problem is similar, but I did not manage to solve it. The OCUM worker exits with an error.

Here is the OCUM worker log file :

cat /opt/netapp-harvest/log/NDC-PS-OCU-CDOT_netapp-harvest.log

[2016-07-06 17:07:28] [NORMAL ] WORKER STARTED [Version: 1.2.2] [Conf: netapp-harvest.conf] [Poller: NDC-PS-OCU-CDOT]

[2016-07-06 17:07:28] [NORMAL ] [main] Poller will monitor a [OCUM] at [10.197.208.166:443]

[2016-07-06 17:07:28] [NORMAL ] [main] Poller will use [password] authentication with username [netapp-harvest] and password [**********]

[2016-07-06 17:07:33] [WARNING] [sysinfo] Discovered [ndc-ps-cluster1] on OCUM server with no matching conf section; to collect this cluster please add a section

[2016-07-06 17:07:33] [WARNING] [sysinfo] Discovered [ndc-ps-cluster2] on OCUM server with no matching conf section; to collect this cluster please add a section

[2016-07-06 17:07:33] [NORMAL ] [main] Collection of system info from [10.197.208.166] running [6.4P1] successful.

[2016-07-06 17:07:33] [ERROR ] [main] No best-fit collection template found (same generation and major release, minor same or less) found in [/opt/netapp-harvest/template/default]. Exiting;

Here is the monitored hosts section from my netapp-harvest.conf

##

## Monitored host examples - Use one section like the below for each monitored host

##

#====== 7DOT (node) or cDOT (cluster LIF) for performance info ================

#

[ndc-ps-fas01]

hostname = 10.197.208.20

site = Grenoble_BTIC

[ndc-ps-fas02]

hostname = 10.197.208.30

site = Grenoble_BTIC

[ndc-ps-fas3240-1]

hostname = 10.197.208.80

site = Grenoble_BTIC

[ndc-ps-fas3240-2]

hostname = 10.197.208.90

site = Grenoble_BTIC

[ntap-energetic]

hostname = 10.197.189.167

site = Grenoble_BTIC

username = netapp-harvest

password = XXXXXXX

[ndc-ps-cluster1]

hostname = 10.197.208.29

site = Grenoble_BTIC

[ndc-ps-cluster2]

hostname = 10.197.208.32

site = Grenoble_BTIC

#====== OnCommand Unified Manager (OCUM) for cDOT capacity info ===============

#

[NDC-PS-OCU-CDOT]

hostname = 10.197.208.166

site = Grenoble_BTIC

host_type = OCUM

data_update_freq = 900

normalized_xfer = gb_per_sec

Thanks for your help.

Best regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if you had this working with OCUM 6.3 or 6.2 ?

[2016-07-06 17:07:33] [NORMAL ] [main] Collection of system info from [10.197.208.166] running [6.4P1] successful.

[2016-07-06 17:07:33] [ERROR ] [main] No best-fit collection template found (same generation and major release, minor same or less) found in [/opt/netapp-harvest/template/default]. Exiting;

if netapp-harvest is tested with OCUM 6.4p1 with full integration OPM ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@patrice_dronnier @SrikanthReddy

Harvest v1.2.2 does work with OCUM 6.4 and a default template will be included in the next release.

For now you can simply copy the OCUM 6.3 one to 6.4:

# cp /opt/netapp-harvest/template/default/ocum-6.3.0.conf /opt/harvest-harvest/template/default/ocum-6.4.0.conf

# netapp-manager -start

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had a question to Chris about Multiple sites/clusters on one OCUM server

Only One OCUM server with one site is enough as confirmed by Chris

"You should only add one poller for each OCUM server. The Poller connects to the OCUM server and discovers all clusters known to it. The poller then checks the conf file for FILER poller entries to learn the cluster -> site mapping for each cluster it discovered. "

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Chris,

With some delay, thanks for your answer.

Your solution solved my problem.

Best regards.

Patrice

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

I have the same problem. No error in the log but I get no data.

I have no idea what mhnap-cls is...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @p_w

There should be something in the poller log (/opt/netapp-harvest/log) explaining why data can't be collected or forwarded to Graphite. You could try restarting the poller (/opt/netapp-harvest/netapp-manager -restart) to see if something is logged at startup that would indicate a config error. The "mhnap-cls" mentioned in the earlier post was the hostname from that other user and not something related to Harvest.

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hope it's OK to post a reply/question to a thread that is marked closed.... I am having similar problems of not seeing capacity data on the capacity graphs of the NetAppHarvest dashboards ... and am having a hard time grasping whether I have things set up right or not.

Here is my OCUM poller entry from /opt/netapp-harvest/netapp-harvest.conf:

[OCUM_CEPINVAP296]

hostname = cepinvap296.centura.org

site = CENTURA

host_type = OCUM

data_update_freq = 300

auth_type = password

username = netapp-harvest

password = XXXXXX

normalized_xfer = gb_per_sec

template = ocum-opm-hierarchy.conf

graphite_root = netapp-capacity.Clusters.{display_name}

graphite_meta_metrics_root = netapp-capacity-poller.{site}

Here is an example poller entry for a CDOT NetApp within the same /opt/netapp-harvest/netapp-harvest.conf file that the OCUM poller entry resides:

[r1ppkrntap01]

hostname = r1ppkrntap01.corp.centura.org

site = CENTURA

host_enabled = 1

- NOTE: All other CDOT entries look the same, except, oviously, the hostname

In our OCUM (6.4) server, all the CDOT NetApp clusters are defined in lower case

On my Harvest linux machine, these are the directories that have been created by Harvest:

/var/lib/graphite/whisper/netapp/perf/CENTURA/

/var/lib/graphite/whisper/netapp/capacity/CENTURA/

/var/lib/graphite/whisper/netapp-performance/Clusters/

/var/lib/graphite/whisper/netapp-capacity/Clusters/

All directories have 34 subdirs - one for each NetApp cluster... except the .../netapp/capacity/... directory... it only has 18 subdirs

When I do a whisper-dump on any of the files in cluster subdirs of .../netapp/capacity/..., I see one entry, way back in 2016, and all other entries are "0, 0"

When I do whisper-dump on any of the files in cluster subdirs of .../netapp-capacity/... I see up-to-date entries with valid values

And also, the capacity graphs in graphana all seem to use queries from the .../netapp/capacity/... data:

- Example (the Top SVM Capacity Used Percent graph from the "Cluster Group" dashboard):

- aliasByNode(sortByMaxima(highestAverage(netapp.capacity.$Group.$Cluster.svm.*.vol_summary.afs_used_percent, $TopResources)), 3, 5)

I would think these graphs would need to pull data from netapp-capacity.Clusters.$Cluster.

In my OCUM Harvest Poller log file, I think I have everything set up, as I see this, whenever I start it up:

[2017-04-20 11:58:36] [NORMAL ] WORKER STARTED [Version: 1.2.2] [Conf: netapp-harvest.conf] [Poller: OCUM_CEPINVAP296]

[2017-04-20 11:58:36] [NORMAL ] [main] Poller will monitor a [OCUM] at [cepinvap296.centura.org:443]

[2017-04-20 11:58:36] [NORMAL ] [main] Poller will use [password] authentication with username [netapp-harvest] and password [**********]

[2017-04-20 11:58:39] [WARNING] [sysinfo] Discovered [rclinvntap01] on OCUM server with no matching conf section; to collect this cluster please add a section

[2017-04-20 11:58:39] [WARNING] [sysinfo] Discovered [rclascntap01] on OCUM server with no matching conf section; to collect this cluster please add a section

[2017-04-20 11:58:39] [NORMAL ] [main] Collection of system info from [cepinvap296.centura.org] running [6.4] successful.

[2017-04-20 11:58:39] [NORMAL ] [main] Using specified collection template: [ocum-opm-hierarchy.conf]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1psahntap01] for host [r1psahntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.cepmmcntap01] for host [cepmmcntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.b1pinvntap01] for host [b1pinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.impascntap01] for host [impascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1psmcntap01] for host [r1psmcntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1psfmntap01] for host [r1psfmntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pluhntap01] for host [r1pluhntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.b1pascntap01] for host [b1pascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1psumntap01] for host [r1psumntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.vnpascntap01] for host [vnpascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pstmntap01] for host [r1pstmntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.cepascntap01] for host [cepascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.eppascntap01] for host [eppascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.uatinvntap01] for host [uatinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pcrantap01] for host [r1pcrantap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1psncntap01] for host [r1psncntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.v1pascntap01] for host [v1pascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1ppahntap01] for host [r1ppahntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.impinvntap01] for host [impinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1paahntap01] for host [r1paahntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pbwhntap01] for host [r1pbwhntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.cepinvntap01] for host [cepinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.vnpinvntap01] for host [vnpinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1ppkrntap01] for host [r1ppkrntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.eppinvntap01] for host [eppinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.v1pinvntap01] for host [v1pinvntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1ppenntap01] for host [r1ppenntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pmmcntap01] for host [r1pmmcntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1plahntap01] for host [r1plahntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.r1pschntap01] for host [r1pschntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Calculated graphite_root [netapp-capacity.Clusters.g3pascntap01] for host [g3pascntap01]

[2017-04-20 11:58:39] [NORMAL ] [main] Using graphite_meta_metrics_root [netapp-capacity-poller.CENTURA]

[2017-04-20 11:58:39] [NORMAL ] [main] Startup complete. Polling for new data every [300] seconds.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The dashboards imported by Harvest assume you use Harvest to collect both performance and capacity information. By default information is submitted for perf under netapp.perf.<site>.<cluster> and capacity under netapp.capacity.<site>.<cluster>. To use these defaults your OCUM entry should look like:

[OCUM_CEPINVAP296] hostname = cepinvap296.centura.org site = CENTURA host_type = OCUM data_update_freq = 300 auth_type = password username = netapp-harvest password = XXXXXX

With a config like above the default dashboards should show information.

Your current config uses a customized config that aligns the Harvest submitted capacity metrics hiearchy (netapp-capacity.Clusters.*) with the OPM 'external data provider' submitted metrics (netapp-performance.Clusters.*). Harvest does not provide any default dashboards that are aligned with the OPM hierarchy so you'd have to create your own Grafana dashboard panels if you go this route.

Hope this helps!

Cheers,

Chris Madden

Solution Architect - 3rd Platform - Systems Engineering NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO or both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

Thanks for the quick response! If I understand your response correctly, I need to modify my [OCUM_CEPINVAP296] entry by commenting-out/whacking the entries shown lined-out below:

[OCUM_CEPINVAP296]

hostname = cepinvap296.centura.org

site = CENTURA

host_type = OCUM

data_update_freq = 300

auth_type = password

username = netapp-harvest

password = XXXXXXnormalized_xfer = gb_per_sectemplate = ocum-opm-hierarchy.confgraphite_root = netapp-capacity.Clusters.{display_name}graphite_meta_metrics_root = netapp-capacity-poller.{site}

If I do that, do I just then need to stop/start all my pollers and Harvest-collected capacity stats will start to be collected, or do I need to do anything more extreme, such as purge the /var/lib/graphite/whisper/netapp/capacity/CENTURA/ directory and subdirs?

Rick