EF & E-Series, SANtricity, and Related Plug-ins

- Home

- :

- Products and Services

- :

- EF & E-Series, SANtricity, and Related Plug-ins

- :

- Re: First storage installation - NetApp e-2824 - questions about iSCSI performance

EF & E-Series, SANtricity, and Related Plug-ins

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

First storage installation - NetApp e-2824 - questions about iSCSI performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My name is Carlos and I'm a System Administrator at a payroll company in Canada.

Recently, we purchased a NetApp e-2824 to be connected to our 2 VMware servers in order to improve performance and availability.

However, when we finally finished the installation and started doing some testing we noticed that the performance was very low.

The SAN has 2 x controllers working in load balancing, and each of those has 2 x 10GB/s ports.

We basically connected that SAN to a 24 port x 1GB/s switch and connected our VMware servers 1GB/s ports to the same switch.

We also isolated this system in a specific VLAN with an IP addressing different from the other network connected to the same switch.

Below there is a summary of the connections:

SAN Controller A por e0a (10GB/s) <------------> Switch port (1GB/s) [VLAN 2] Switch port (1GB/s) <------------> VMware server 1 eth0

SAN Controller A por e0b (10GB/s) <------------> Switch port (1GB/s) [VLAN 2] Switch port (1GB/s) <------------> VMware server 1 eth1

SAN Controller B por e0a (10GB/s) <------------> Switch port (1GB/s) [VLAN 2] Switch port (1GB/s) <------------> VMware server 2 eth0

SAN Controller B por e0b (10GB/s) <------------> Switch port (1GB/s) [VLAN 2] Switch port (1GB/s) <------------> VMware server 2 eth1

We're using iSCSI and Jumbo Frames (in all equipment).

I believe the performance is low because we're using 1GB/s links among all equipment, but I'm not familiar with storage installations neither with iSCSI.

Anybody knows if there is any way to make this system perform good with the current hardware?

Or we'll have to upgrade all switch and server's NIC's to 10GB/s?

Thanks,

Carlos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Carlos,

You are correct - since the switch only does 1Gbit connectivity, the devices, including E-Series controller, will only do 1Gbit over any connection, meaning at most ~90MByte/sec speeds. If throughput is much below 70Mbyte/sec, you probably need to check and doublecheck all of your jumbo frame settings.

If you connect multiple connections on the vmware servers, you can use MPIO to balance IO somewhat over multiple connections, but it won't produce significant levels of improved throughput. For that, you would need a 10Gbit switch and cards for each of your vmware servers

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alex,

Thanks for your answer.

Initially, we've installed the SAN directly connected to the servers, but we've been told that this kind of installation wouldn't work.

It's actually worked, but then we decided to connect those to one of our switchs to see if the performance would be better.

The performance stayed the same on both architectures, but if we go with the second one we'll need to upgrade the switch as well.

My plan would be to install 10GB/s ethernet modules on the servers and roll back the installation to connect SAN and servers directly.

Anybody knows if doing that I'll be able to get those connected at 10GB/s? (I think it should work)

Then, if that works, we'll setup a project to add 2 x 10GB/s switches (for HA) for the next 6 months in order to make the installation better.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Carlos,

On the E-Series of controllers, we do support Direct Connect for iSCSI for vmware, meaning you don't need a switch, but having one does allow for better resiliency and multipathing in your storage network. To get 10Gb connectivity, you will need appropriate cables (optical, twinax or 10GBaseT), supported by both controller and server, and ideally any future switch you get.

The approval of the direct connect configuration is detailed in our Interoperability Matrix Tool (IMT), and more information is given in our VMware Power Guide for E-Series document (page 34).

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Alex,

Just to check if I understood it correctly.

If I want connect the SAN directly to the VMware servers, I'll need to add a pair of 10GB/s ports to each of those servers and also buy the specific cables to interconnect them, right?

Thanks,

Carlos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct - you can do it with single cards/single port cards too.

I've had good luck with Intel X520 cards and Cisco TwinAx cables

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding performance, I meant the experience in general.

Our servers have a localstorage and I presented the storage to those.

When I turn on a VM hosted in the localstorage it takes maybe 20 seconds to start.

When I do the same on a VM hosted in the SAN, it takes probably more than a minute.

I configured jumbo frames on all devices to optimize performance, but I think it's a matter of connection speed among all devices.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That sounds more like a latency issue than a throughput issue as booting a server is unlikely to be maxing out a 1GB link.

Take a look at the delayed ack config.

Beyond that, I think you need to get familiar with the stats counters in vmware rather than relying solely on observing system performance.

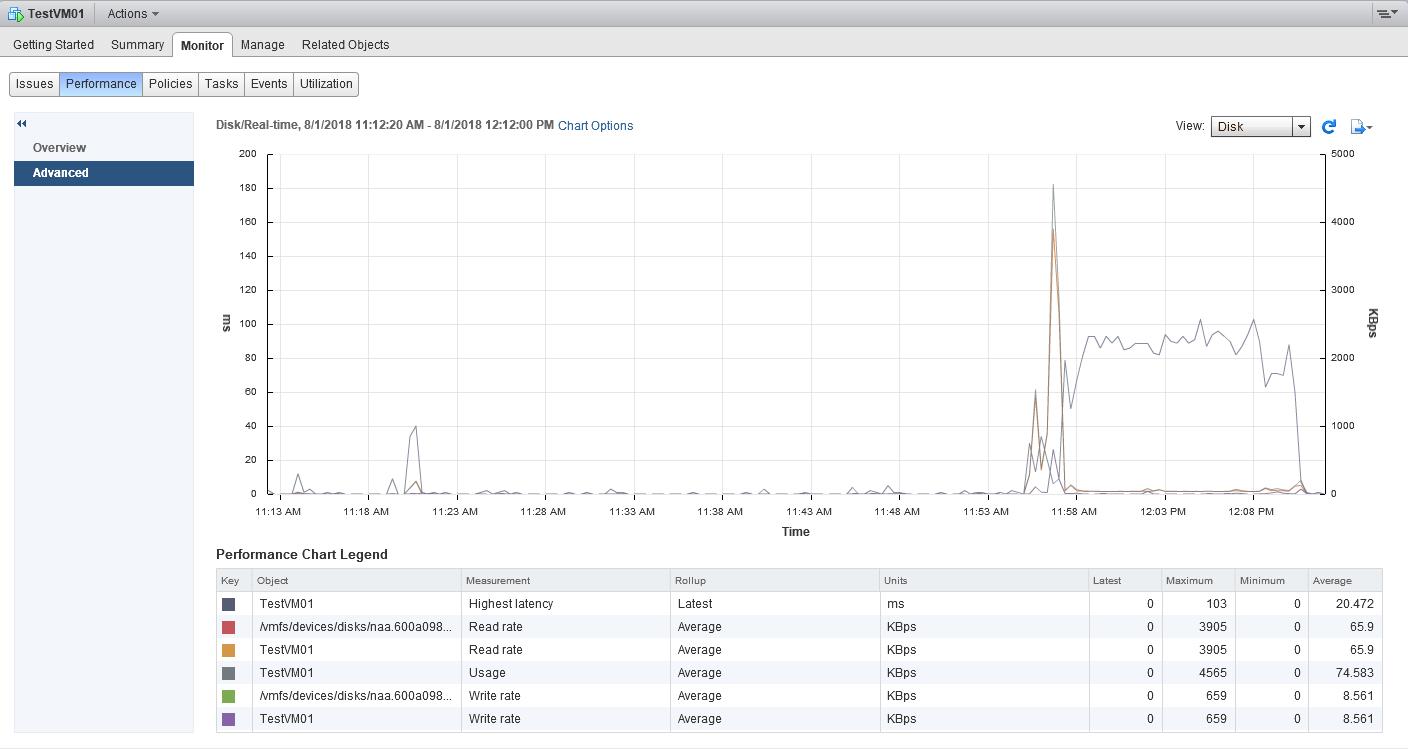

Log in to vcenter, browse to your VM>monitor>perfromance then select disk from the drop down.

By default, this shows you the latency and throughput of your storage.

For flash storage, even over a 1GB link, you shouldn't be seeing much over 1ms in latency. For disk storage with no flash caching, expect to see not too much difference from your internal storage on the host (~20ms if busy, <10ms ideally)

I suspect that you will be seeing much higher values (ie. disable delayed ack)

Handy hint: vmware stats are accurate but the interface is slow and clunky. Get Veeam One (free), install on a windows box and point it at your virtual infrastructure. It will scoop up all your stats and give you a nice snappy interface to view up to 1 week of data. There are also a host of alarms you can confugure if you don't already have monitoring in place.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fizzer,

I followed your instructions and posted the results on this thread.

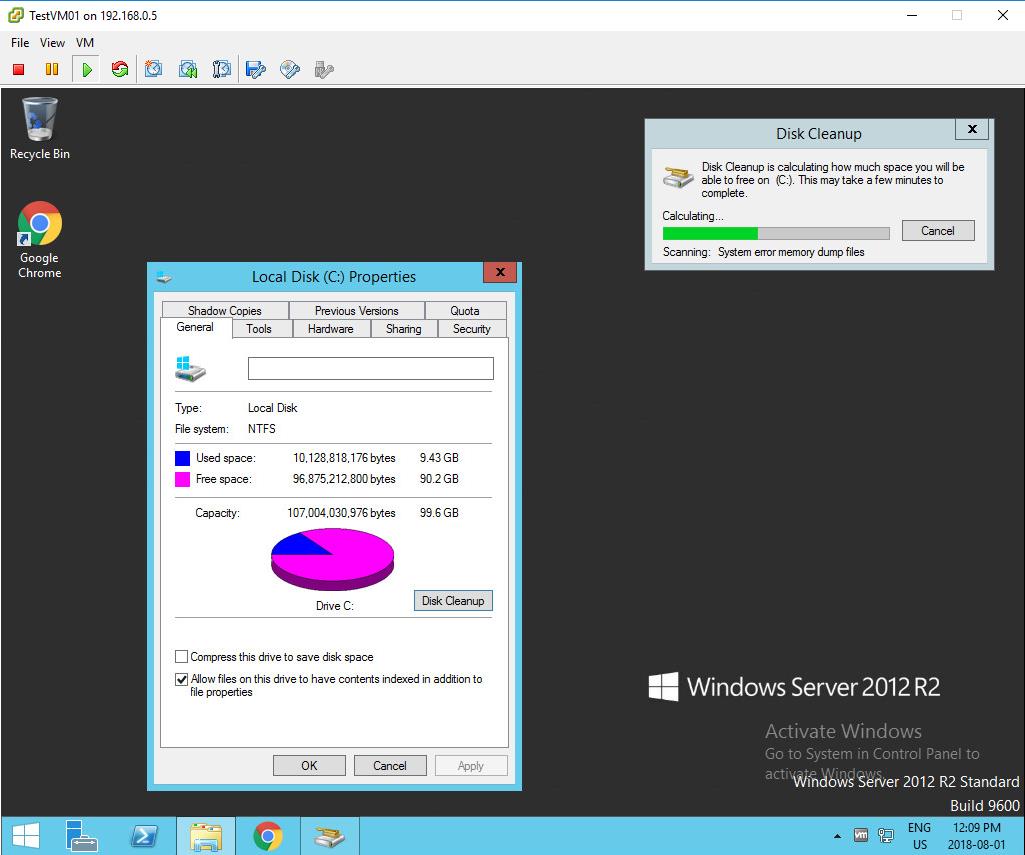

The fist screen shows what I was doing on the VM (below).

Then, looking at the vCenter graphic we see that before and after cleaning C: there was almost no activity, but during that the figures were much higher (below).

I used Veeam One about 1.5 year ago and I had a bad experience with that, but obviously I can take another shot at that.

I'm just not sure on how to fix this. 🙂

Thanks,

Carlos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would wager someone elses monthly pay packet that this is a delayed ack issue.

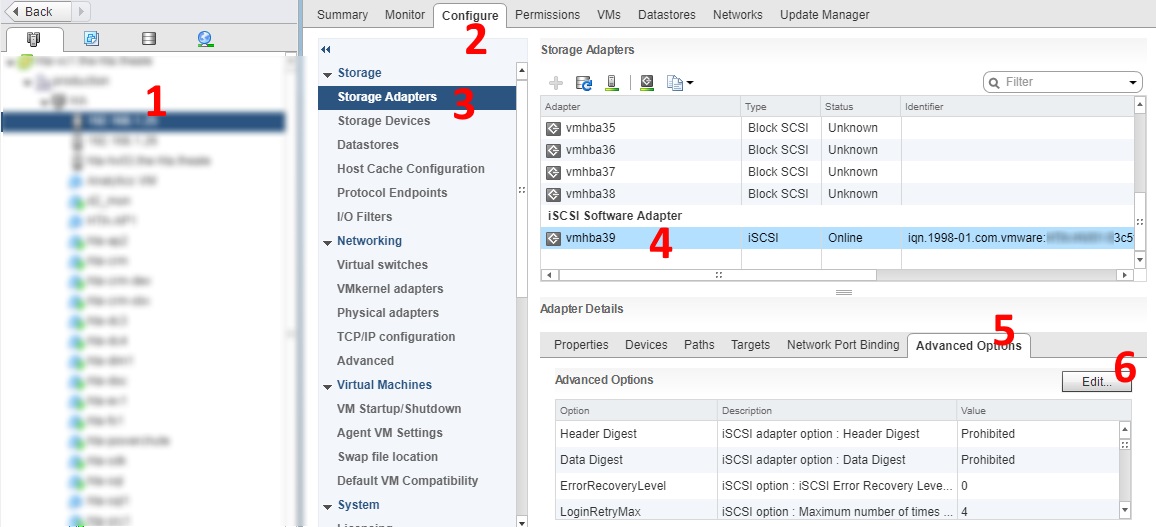

Login to vsphere

- Select your host - put it into maintenance mode

- configure tab

- select storage adapters

- select your iscsi adapter

- advanced options

- edit

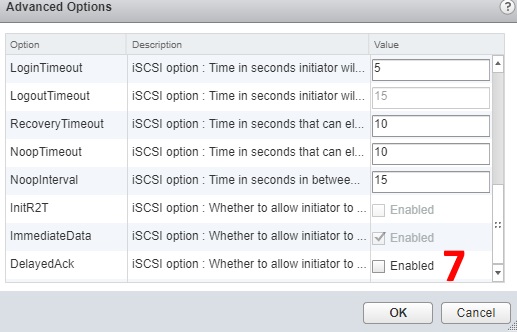

- Scroll down to 'DelayedAck' - uncheck

- restart host, exit maintenance mode

- retry your tests

- repeat for all other hosts connected to the array

Let us know how you get on.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fizzer,

Thank you very much for the valuable information and sorry about the late reply.

I needed to schedule a maintenance window in order to be able to turn off all the servers in one of the hosts and try that configuration.

I did that yesterday and it was a heck of a day.

We're still running version 5.5 of ESXi on the 2 servers we have and version 6.0 on vCenter in the management server.

After doing the configuration on VMware server 2, I had to reboot that host and that was the beginning of my nightmare.

I wasn't aware of a bug in that version of ESXi when using iSCSI that makes the restart realy slow.

When I restarted the server it wouldn't come back, then after 45 minutes of waiting I decided to go to the company office.

When I arrived there, the ESXi loading progress bar was at around 5% and I had to wait for 5 hours until the system load completely and go back online.

Then I turned on all the VMs and everything went to normal again.

Today morning I did some testing comparing the VM in the host where I disabled the DelayedAck (TestVM02) and the other one with that enabled (TestVM01).

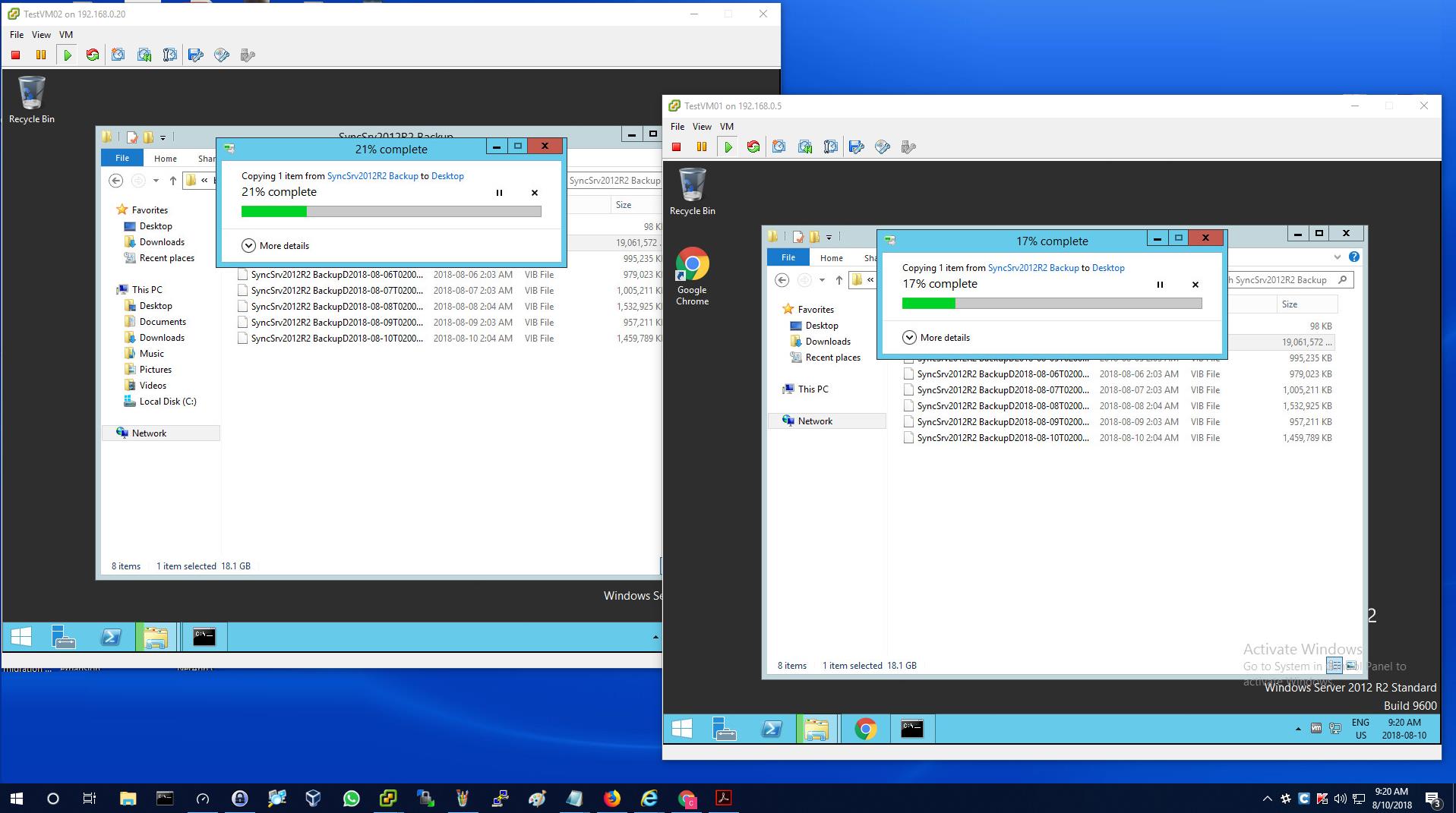

To do that, I downloaded a 20GB file from a NAS to both VMs at the same time (picture below).

Then, I opened vSphere -> Performance for each VM and compared the 2 servers to see if TestVM02 had an improved performance (picture below).

So far, the results seem inconclusive for me.

Both graphics seem to have similar patterns and vary on the same range (observe that the axis scale in each graphic is slighly different).

However, there are some numbers (marked with a red rectangle) that are very different on each host.

Now I need to understand what exactly those numbers represent, and if those mean that the performance would be better on TestVM02.

We're planning to move one of our real VMs from VMware server 2 to the storage and check how it behaves, but I still want to do more testing before.

Please, let me know if you have any other idea that could help to improve that performance.

Thanks again!!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@AlexDawson wrote:

Correct - you can do it with single cards/single port cards too.

I've had good luck with Intel X520 cards and Cisco TwinAx cables

Thanks for the tip!

I'll look for those.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you say performance is low, what do you mean?

If you mean throughput is pegged at less than 100MB/s then yes, the switch you are using is the bottlneck. You could confirm this in your switch web interface or cli and checking port stats.

However if you are seeing high latency then there could be a number of reasons for the issue:

Check and recheck jumbo frame config!

If you are seeing high read latency only then you may want to disable delayed ack on you esxi hosts:

https://kb.vmware.com/s/article/1002598

You can check the throughput you are geting with a tool like Atto disk benchmark in one of your VMs. This will generate lots of storage traffic which can be handy for finding issues and also check if changes you have made have had an impact.