ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: AFF300 Problems with ADP shelf disk

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I've moved an HA pair to a new site and followed the repurpose procedure:

I was moving from aggregate container to shared but I've a problem with one shelf (I got 2). All the disk are in spare state and not in shared as I'd like.

I've tried twice with option 9a-9b but I have always the same result. Below the storage disk show output:

AFF-MI::> storage disk show

Usable Disk Container Container

Disk Size Shelf Bay Type Type Name Owner

---------------- ---------- ----- --- ------- ----------- --------- --------

Info: This cluster has partitioned disks. To get a complete list of spare disk

capacity use "storage aggregate show-spare-disks".

1.0.0 894.0GB 0 0 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.1 894.0GB 0 1 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.2 894.0GB 0 2 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.3 894.0GB 0 3 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.4 894.0GB 0 4 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.5 894.0GB 0 5 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.6 894.0GB 0 6 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.7 894.0GB 0 7 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.8 894.0GB 0 8 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.9 894.0GB 0 9 SSD shared aggr0_AFF_MI_02 AFF-MI-02

1.0.10 894.0GB 0 10 SSD shared - AFF-MI-02

1.0.11 894.0GB 0 11 SSD shared - AFF-MI-02

1.0.12 894.0GB 0 12 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.13 894.0GB 0 13 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.14 894.0GB 0 14 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.15 894.0GB 0 15 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.16 894.0GB 0 16 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.17 894.0GB 0 17 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.18 894.0GB 0 18 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.19 894.0GB 0 19 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.20 894.0GB 0 20 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.21 894.0GB 0 21 SSD shared aggr0_AFF_MI_01 AFF-MI-01

1.0.22 894.0GB 0 22 SSD shared - AFF-MI-01

1.0.23 894.0GB 0 23 SSD shared - AFF-MI-01

1.1.0 3.49TB 1 0 SSD spare Pool0 AFF-MI-01

1.1.1 3.49TB 1 1 SSD spare Pool0 AFF-MI-01

1.1.2 3.49TB 1 2 SSD spare Pool0 AFF-MI-01

1.1.3 3.49TB 1 3 SSD spare Pool0 AFF-MI-01

1.1.4 3.49TB 1 4 SSD spare Pool0 AFF-MI-01

1.1.5 3.49TB 1 5 SSD spare Pool0 AFF-MI-01

1.1.6 3.49TB 1 6 SSD spare Pool0 AFF-MI-01

1.1.7 3.49TB 1 7 SSD spare Pool0 AFF-MI-01

1.1.8 3.49TB 1 8 SSD spare Pool0 AFF-MI-01

1.1.9 3.49TB 1 9 SSD spare Pool0 AFF-MI-01

1.1.10 3.49TB 1 10 SSD spare Pool0 AFF-MI-01

1.1.11 3.49TB 1 11 SSD spare Pool0 AFF-MI-01

36 entries were displayed.

How can I do to convert spare to shared?

Thanks

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

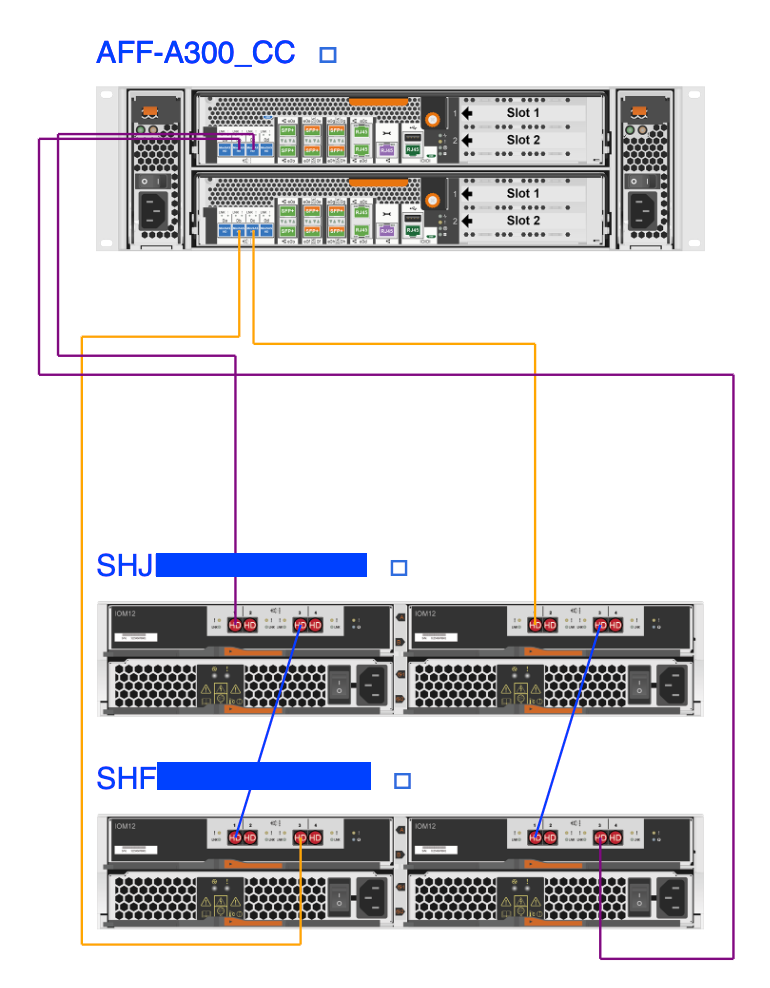

That can happen if cables are not properly connected.

if you have enough 2m sas (8), I would do:

node 1, 0a to shelf 1 iom a port 1

node 1, 0d to shelf 1 iom b port 3

node 2, 0a to shelf 1 iom b port 1

node 2, 0d to shelf 1 iom a port 3

node 1, 0c to shelf 2 iom a port 1

node 1, 0b to shelf 2 iom b port 3

node 2, 0c to shelf 2 iom b port 1

node 2, 0b to shelf 2 iom a port 3

if not, then using 4 long cables:

node 1, 0a to shelf 1 iom a port 1

node 1, 0d to shelf 2 iom b port 3

node 2, 0a to shelf 1 iom b port 1

node 2, 0d to shelf 2 iom a port 3

two daisy chain cables

shelf 1 iom a port 3 to shelf 2 iom a port 1

shelf 1 iom b port 3 to shelf 2 iom b port 1

If the cabling is not correct, halt the nodes, correct the cabling and try again. If you are able to cable with the shelves in two stacks instead of one, you should end up with adp across all 48 SSDs. If not I typically see the adp only happen on one shelf. ☹️

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am hoping you have followed the steps as mentioned in the kb. For 9b, it says allow first node to complete before executing on the partner node.

Option 9a: Unpartition all disks and remove their ownership information:Execute on each node of the HA pair first.

Option 9b: Clean configuration and initialize system with partitioned disks. Allow first node to complete before executing on the partner node.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That can happen if cables are not properly connected.

if you have enough 2m sas (8), I would do:

node 1, 0a to shelf 1 iom a port 1

node 1, 0d to shelf 1 iom b port 3

node 2, 0a to shelf 1 iom b port 1

node 2, 0d to shelf 1 iom a port 3

node 1, 0c to shelf 2 iom a port 1

node 1, 0b to shelf 2 iom b port 3

node 2, 0c to shelf 2 iom b port 1

node 2, 0b to shelf 2 iom a port 3

if not, then using 4 long cables:

node 1, 0a to shelf 1 iom a port 1

node 1, 0d to shelf 2 iom b port 3

node 2, 0a to shelf 1 iom b port 1

node 2, 0d to shelf 2 iom a port 3

two daisy chain cables

shelf 1 iom a port 3 to shelf 2 iom a port 1

shelf 1 iom b port 3 to shelf 2 iom b port 1

If the cabling is not correct, halt the nodes, correct the cabling and try again. If you are able to cable with the shelves in two stacks instead of one, you should end up with adp across all 48 SSDs. If not I typically see the adp only happen on one shelf. ☹️

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It’s possible with this as one stack it will only initialize ADP on one shelf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I suggest updating ONTAP from the bootmenu first (option 7) then, try 9a and 9b again.

If the disks are still not properly partitioned, you can partition the remaining Disks manually after cluster setup:

set d

disk create-partition -source-disk 1.0.0 -target-disk 1.1.0

disk create-partition -source-disk 1.0.0 -target-disk 1.1.1

---

Maybe you’ll have to manually assign the partitions to the controllers afterwards:

disk assign -disk 1.1.* -data1 true -owner <controller 1>

disk assign -disk 1.1.* -data2 true -owner <controller 2>

Hope this helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Respectfully disagree. To maximize utilization, re-cable to two stacks as I indicated above. Updating ONTAP first is very wise, but making the two stacks will make the root partition slightly smaller and yield more capacity for data

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this behaviour documented somewhere? I mean that ADPv2 partitions only 24 disks per stack?

As far as I knew, ADPv2 partitions up to 48 Disks, I did not know about the rule with stacks.

I figured in this case it might not auto-partition because of the different disk sizes...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure if it is documented.

I know from experience this is what I do. I have had it happen to me and making two stacks worked. I know there is some logic on how disks are assigned (bay, stack, shelf) and I believe it has changed.

I just make it a rule, especially with SSDs, use as many stacks as possible to squeak out all possible performance and to facilitate the ADPv2 over up to 48 drives.

I always have my sales teams use two stacks when there are two SSD shelves.