ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: FAS2620: A disk is member of 2 aggregates

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

We've just initialized a dual controller FAS2620 running OS 9.4P3. After initializing, the storage shows 2 aggr.

#storage aggr show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

aggr0_FAS2620_01

736.8GB 35.73GB 95% online 1 FAS2620-01 raid_dp,

normal

aggr0_FAS2620_02

736.8GB 35.73GB 95% online 1 FAS2620-02 raid_dp,

normal

FAS2620::> aggr show -aggregate aggr0_FAS2620_01

Aggregate: aggr0_FAS2620_01

Storage Type: hdd

Checksum Style: block

Number Of Disks: 8

Mirror: false

Disks for First Plex: 1.0.1, 1.0.3, 1.0.5, 1.0.7,

1.0.9, 1.0.11, 1.1.1, 1.1.5

Disks for Mirrored Plex: -

Partitions for First Plex: -

Partitions for Mirrored Plex: -

Node: FAS2620-01

Free Space Reallocation: off

HA Policy: cfo

Ignore Inconsistent: off

Space Reserved for Snapshot Copies: 5%

Aggregate Nearly Full Threshold Percent: 97%

Aggregate Full Threshold Percent: 98%

Checksum Verification: on

RAID Lost Write: on

Enable Thorough Scrub: off

Hybrid Enabled: false

Available Size: 35.73GB

Checksum Enabled: true

Checksum Status: active

Cluster: FAS2620

Home Cluster ID: 5ba70874-3a19-11ed-b553-00a098b7f9ce

DR Home ID: -

DR Home Name: -

Inofile Version: 4

Has Mroot Volume: true

Has Partner Node Mroot Volume: false

Home ID: 537099464

Home Name: FAS2620-01

Total Hybrid Cache Size: 0B

Hybrid: false

Inconsistent: false

Is Aggregate Home: true

Max RAID Size: 14

Flash Pool SSD Tier Maximum RAID Group Size: -

Owner ID: 537099464

Owner Name: FAS2620-01

Used Percentage: 95%

Plexes: /aggr0_FAS2620_01/plex0

RAID Groups: /aggr0_FAS2620_01/plex0/rg0 (block)

RAID Lost Write State: on

RAID Status: raid_dp, normal

RAID Type: raid_dp

SyncMirror Resync Snapshot Frequency in Minutes: 5

Is Root: true

Space Used by Metadata for Volume Efficiency: 0B

Size: 736.8GB

State: online

Maximum Write Alloc Blocks: 0

Used Size: 701.1GB

Uses Shared Disks: true

UUID String: 37bac9c4-6276-4df1-a4e6-7c0683275a65

Number Of Volumes: 1

Is Flash Pool Caching: -

Is Eligible for Auto Balance Aggregate: false

State of the aggregate being balanced: ineligible

Total Physical Used Size: 8.16GB

Physical Used Percentage: 1%

State Change Counter for Auto Balancer: 0

Is Encrypted: false

SnapLock Type: non-snaplock

Encryption Key ID: -

Is in the precommit phase of Copy-Free Transition: false

Is a 7-Mode transitioning aggregate that is not yet committed in clustered Data ONTAP and is currently out of space: false

Threshold When Aggregate Is Considered Unbalanced (%): 70

Threshold When Aggregate Is Considered Balanced (%): 40

Resynchronization Priority: -

Space Saved by Data Compaction: 0B

Percentage Saved by Data Compaction: 0%

Amount of compacted data: 0B

Timestamp of Aggregate Creation: 9/22/2022 01:49:19

Enable SIDL: off

Composite: false

Capacity Tier Used Size: 0B

Space Saved by Storage Efficiency: 0B

Percentage of Space Saved by Storage Efficiency: 0%

Amount of Shared bytes count by Storage Efficiency: 0B

Inactive Data Reporting Enabled: false

azcs-read-optimization Enabled: false

FAS2620::> aggr show -aggregate aggr0_FAS2620_02

Aggregate: aggr0_FAS2620_02

Storage Type: hdd

Checksum Style: block

Number Of Disks: 8

Mirror: false

Disks for First Plex: 1.0.0, 1.0.2, 1.0.4, 1.0.6,

1.0.8, 1.0.10, 1.1.0, 1.1.4

Disks for Mirrored Plex: -

Partitions for First Plex: -

Partitions for Mirrored Plex: -

Node: FAS2620-02

Free Space Reallocation: off

HA Policy: cfo

Ignore Inconsistent: off

Space Reserved for Snapshot Copies: 5%

Aggregate Nearly Full Threshold Percent: 97%

Aggregate Full Threshold Percent: 98%

Checksum Verification: on

RAID Lost Write: on

Enable Thorough Scrub: off

Hybrid Enabled: false

Available Size: 35.73GB

Checksum Enabled: true

Checksum Status: active

Cluster: FAS2620

Home Cluster ID: 5ba70874-3a19-11ed-b553-00a098b7f9ce

DR Home ID: -

DR Home Name: -

Inofile Version: 4

Has Mroot Volume: true

Has Partner Node Mroot Volume: false

Home ID: 537119449

Home Name: FAS2620-02

Total Hybrid Cache Size: 0B

Hybrid: false

Inconsistent: false

Is Aggregate Home: true

Max RAID Size: 14

Flash Pool SSD Tier Maximum RAID Group Size: -

Owner ID: 537119449

Owner Name: FAS2620-02

Used Percentage: 95%

Plexes: /aggr0_FAS2620_02/plex0

RAID Groups: /aggr0_FAS2620_02/plex0/rg0 (block)

RAID Lost Write State: on

RAID Status: raid_dp, normal

RAID Type: raid_dp

SyncMirror Resync Snapshot Frequency in Minutes: 5

Is Root: true

Space Used by Metadata for Volume Efficiency: 0B

Size: 736.8GB

State: online

Maximum Write Alloc Blocks: 0

Used Size: 701.1GB

Uses Shared Disks: true

UUID String: 692130e4-183b-42fd-97cf-26b1fba877c3

Number Of Volumes: 1

Is Flash Pool Caching: -

Is Eligible for Auto Balance Aggregate: false

State of the aggregate being balanced: ineligible

Total Physical Used Size: 7.65GB

Physical Used Percentage: 1%

State Change Counter for Auto Balancer: 0

Is Encrypted: false

SnapLock Type: non-snaplock

Encryption Key ID: -

Is in the precommit phase of Copy-Free Transition: false

Is a 7-Mode transitioning aggregate that is not yet committed in clustered Data ONTAP and is currently out of space: false

Threshold When Aggregate Is Considered Unbalanced (%): 70

Threshold When Aggregate Is Considered Balanced (%): 40

Resynchronization Priority: -

Space Saved by Data Compaction: 0B

Percentage Saved by Data Compaction: 0%

Amount of compacted data: 0B

Timestamp of Aggregate Creation: 9/22/2022 01:51:17

Enable SIDL: off

Composite: false

Capacity Tier Used Size: 0B

Space Saved by Storage Efficiency: 0B

Percentage of Space Saved by Storage Efficiency: 0%

Amount of Shared bytes count by Storage Efficiency: 0B

Inactive Data Reporting Enabled: false

azcs-read-optimization Enabled: false

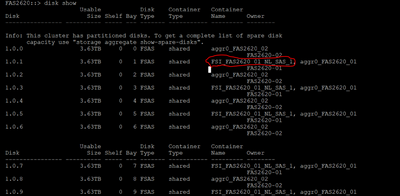

Here is the #disk show result. I find that some disks are member of 2 aggregates. One of these aggregates is an orphan aggregate.

How can I remove 8 disks from orphan aggregate ( FSI_FAS2620_01_NL_SAS_1).

Thank you,

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you need to get to the 'Special Boot menu'. Have a look at the two links have shared.

Read - 2nd link, quite detailed: (option 9a and then 9b)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I find that some disks are member of 2 aggregates?

That's expected as part of the ONTAP Advanced Drive Partitioning (ADP) feature for entry-level FAS2xxx, FAS9000, FAS8200, FAS80xx. Root-data partitioning reduces the parity tax by apportioning the root aggregate across disk partitions, reserving one small partition on each disk as the root partition and one large partition for data.

This article explains this:

https://docs.netapp.com/us-en/ontap/concepts/root-data-partitioning-concept.html

One of these aggregates is an orphan aggregate?

What do you mean by orphan here ?

How can I remove 8 disks from orphan aggregate ( FSI_FAS2620_01_NL_SAS_1)?

I doubt you can do that, as these partitioned disks. You may be able to destroy (unwanted aggregate) but I am guessing that will simply make the partitioned areas available for adding/creating a new aggregate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

During the reboot process, I press Ctrl-C to display the boot menu when prompted to do so.

The storage system displays the following options for the boot menu:

(1) Normal Boot.

(2) Boot without /etc/rc.

(3) Change password.

(4) Clean configuration and initialize all disks.

(5) Maintenance mode boot.

(6) Update flash from backup config.

(7) Install new software first.

(8) Reboot node.

Selection (1-8)?

I choose option 4 to clear the configuration and initialize all disks. Create a new cluster and join the other node to the cluster.

FSI_FAS2620_01_NL_SAS_1 is the old data aggregate and no longer in use. It should be cleared after the reinitialization. I just want to remove that aggregate to release disks from it.

Thank you,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you need to get to the 'Special Boot menu'. Have a look at the two links have shared.

Read - 2nd link, quite detailed: (option 9a and then 9b)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @fsi ,

As @Ontapforrum mentioned, option 9, specifically 9b, is used to initialize configurations using Advanced Disk Partitioning and ption 4 is for non-partitioned systems.

If you want to utilize Advanced Disk Partitioning then you will have to re-initialize the system using option 9b. If you are content with the existing configuration and only want to remove the stale orphan aggregate then you can use the following command from the Cluster shell "storage aggregate delete -aggregate AGGRNAME".

Option 4 wasn't designed to encompass partitioned disks, which contains different complexity for SCSI Reservations as there are multiple reservations for each partition and the physical disk itself. Traditionally only one SCSI Reservation would be placed on any given disk at a time. Mixed SCSI Reservations can prevent a system from initializing a disk/and or partition. The stale data then results in orphan aggregates.

Here is a link to ONTAP 9.4 Command Reference.

Regards,

Team NetApp

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have removed the orphan aggregate successful. Here is what I did

1. Halt node2

2. Boot node 1 in maintenance mode > disk remove_ownership all > reboot > choose option 4 – wipeconfig.

Once the wipe is finished, go with option 9

Do the same to node 2.

Thank both of you for yours recommendation.