ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: Node Panic message

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

We have a node panic that occures every weekend at the same time. We don't have support so I can't create a case.

But at least I would like to search myself for a possible cause. I've found a tool

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I assumed it was 7-mode filer.

Considering this is a ONTAP (cDOT) and it is happening on weekends. It may be worth checking what is the overall "load" on the FAS8040 ? How is it performing over the weekends, especially when there are more workloads in terms of Backups/Mirror transfer. If the load is constantly high to the extent that it is coming to freeze, then try to re-distribute the work-load as much as possible (probably less priority work-loads can be moved around) or may be stagger the transfers that happens during the weekends, try to reduce the frequency.

Following bug (in the link below) is found in the version your filer is currently on i.e 9.5P16. However, as I said, only the core-file analysis could give us the clearer picture here. But, there is no harm in upgrading the ONTAP to a higher version that contains the bug-fix. So, take a look at the following links and see if you could do an upgrade.

https://mysupport.netapp.com/site/bugs-online/product/ONTAP/BURT/1273914

Also, check the recommended release for the Ontap version you have and try to get it up to recommended release or higher release if supported. I think it can go up to 9.8 (https://hwu.netapp.com/Controller/Index?platformTypeId=2032)

https://kb.netapp.com/Support_Bulletins/Customer_Bulletins/SU2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When a system panic or crash occurs, the memory contents are saved as core files on the system. NetApp can analyze these core files to diagnose the reason for the panic and suggest corrective action. However, In the absence of a support contract, try to obtain the related EMS and audit logs just to get some hints.

As you mentioned, this occurs every weekend at the same time, that suggests there must be a bug associated with it. However, to map it to this behavior its needs investigation/some leads. Could you share the following:

1) Filer Model? (Is all firmwares including + DQP up-2-date)

2) data ontap / ONTAP version?

3) What are the protocols served ?

4) If CIFS/SMB is used, then what is the output of the following command?

:>options cifs.smb2.durable_handle.enable

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer.

in EMS I can see that everything is started with the following error:

1/29/2023 08:00:52 CLUSTER002 ALERT vifmgr.cluscheck.droppedall: Total packet loss when pinging from cluster lif CLUSTER-02_clus1 (node NODE002) to cluster lif CLUSTER-01_clus2 (node NODE001).

the ports should be connected directly ( I will vérify this)

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

Cluster

CLUSTER-01_clus1 up/up 169.254.238.124/16 NODE1 e0a true

CLUSTER-01_clus2 up/up 169.254.18.86/16 NODE1 e0c true

CLUSTER-02_clus1 up/up 169.254.50.129/16 NODE2 e0a true

CLUSTER-02_clus2 up/up 169.254.2.44/16 NODE2 e0c true

1) FAS8040

2) NetApp Release 9.5P16: Tue Jan 12 10:52:07 UTC 2021

3) CIFS, NFS, FC

4) Option "cifs.smb2.durable_handle.enable" is not supported

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

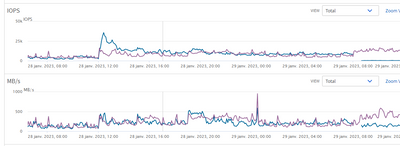

I can see that at the same time the node2 usage is 100%

It could possibly be the reason of not responding to ping.

Or a consequence... because I don't see any extremely increased load on node2 at this moment...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. I assumed it was 7-mode filer.

Considering this is a ONTAP (cDOT) and it is happening on weekends. It may be worth checking what is the overall "load" on the FAS8040 ? How is it performing over the weekends, especially when there are more workloads in terms of Backups/Mirror transfer. If the load is constantly high to the extent that it is coming to freeze, then try to re-distribute the work-load as much as possible (probably less priority work-loads can be moved around) or may be stagger the transfers that happens during the weekends, try to reduce the frequency.

Following bug (in the link below) is found in the version your filer is currently on i.e 9.5P16. However, as I said, only the core-file analysis could give us the clearer picture here. But, there is no harm in upgrading the ONTAP to a higher version that contains the bug-fix. So, take a look at the following links and see if you could do an upgrade.

https://mysupport.netapp.com/site/bugs-online/product/ONTAP/BURT/1273914

Also, check the recommended release for the Ontap version you have and try to get it up to recommended release or higher release if supported. I think it can go up to 9.8 (https://hwu.netapp.com/Controller/Index?platformTypeId=2032)

https://kb.netapp.com/Support_Bulletins/Customer_Bulletins/SU2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The excess load was my first guess but as you can see from the graphics below, we don't observer something exceptional at the time when Node usage climbs to 100%

Thank you for the links. We are definitely determined to upgrade to ONTAP 9.8, just on hold at the moment waiting the support to be renewed. Don't want to risk un upgrade in case of any unpredictable behaviour by the disk array.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agree. In some cases (depending upon various other factors/Including bug), even mgwd unresponsiveness could trigger node panic. So it could be anything. Please take your time to access and then go for an upgrade.