Object Storage

- Home

- :

- Products and Services

- :

- Object Storage

- :

- Re: StorageGrid certificate renewal -Need the final push to finish this

Object Storage

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

StorageGrid certificate renewal -Need the final push to finish this

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Simply put: Our load balancer certificate is expiring today. It won`t affect our FabricPool connection, because it has not been setup to verify a certificate. Both the global certificates (2) have been setup with default (Grid CA). I want to renew these with our custom certificates and then setup the load balancer to use the S3 and Swift API certificate.

I contacted Netapp Support and got these two links below. I write what I intend to do beneath it.

-Install a wildcard certificate for the primary admin and the other admin

But how do this? I have seen examples of certificates made in this form:

DNS.1 = s3.example.com DNS.2 = *.s3.example.com DNS.3 = s3 DNS.4 = *.s3

Can we actually enter ?

DNS.1 = LB1.companydomain.com

DNS.2 = LB".companydomain.com

What abot IP-adresses?

S3 and Swift API certificate: https://docs.netapp.com/us-en/storagegrid-116/admin/configuring-custom-server-certificate-for-storage-node-or-clb.html#add-a-custom-s3-and-swift-api-c...

-Install a wildcard certificate covering all the storage nodes.

Same question: How include all the storage nodes in one certificate? Can we just enter them all? What about IP-adresses?

And finally, in the middle of this, I stumbled upon this problem. Is this something I need to worry about if certificate validation is not enabled in the Fabricpool object store?

It is adressing a problem when renewing the StorageGrid self-signed certificate and NOT renewing it on the ONTAP side. IT should first be renewed in SG, then in ONTAP.

Here is more info:

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I won't attempt to give answers 1 by 1 because I don't want to give a recipe - maybe someone from the SG team can do that.

1) Wildcards aren't SG-specific, any valid wildcards ought to work. So yeah, lb*.something.com would work if it'd work anywhere else. Which you can test by issuing several snake oil certs with the same wild card and see if they can be recognized on test VM machine (e.g. with Apache or NGINX) or container.

2) IPs (SANs) are possible, but not mandatory which is why they were omitted. You wouldn't be accessing anything based on IPs because it can't provide the right security.

3) How to include SG's LBs: the example that you pasted includes s3.example.com and sub-domains, so all SNs and LBs would be covered, assuming their DNS maps to that.

DNS.1 = s3.example.com DNS.2 = *.s3.example.com DNS.3 = s3 DNS.4 = *.s3

It's been years since I created certs for SG, so I wouldn't advise to use my reply on production systems, but maybe they make it easier to understand the docs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forget to ask: How to include the load balancers in the S3 certificate? Is it again just to add the endpoint name in that certificate? What about IP adresses? The LB is using 2 VIP adresses for each SG cluster. Should these 4 VIP be included in the S3 certificate?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I won't attempt to give answers 1 by 1 because I don't want to give a recipe - maybe someone from the SG team can do that.

1) Wildcards aren't SG-specific, any valid wildcards ought to work. So yeah, lb*.something.com would work if it'd work anywhere else. Which you can test by issuing several snake oil certs with the same wild card and see if they can be recognized on test VM machine (e.g. with Apache or NGINX) or container.

2) IPs (SANs) are possible, but not mandatory which is why they were omitted. You wouldn't be accessing anything based on IPs because it can't provide the right security.

3) How to include SG's LBs: the example that you pasted includes s3.example.com and sub-domains, so all SNs and LBs would be covered, assuming their DNS maps to that.

DNS.1 = s3.example.com DNS.2 = *.s3.example.com DNS.3 = s3 DNS.4 = *.s3

It's been years since I created certs for SG, so I wouldn't advise to use my reply on production systems, but maybe they make it easier to understand the docs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding this article "https://kb.netapp.com/hybrid/StorageGRID/Protocols/How_to_configure_a_new_StorageGRID_self-signed_server_certificate_on_an_existing_ONTAP_FabricPool_d... , it is not relevant in your scenario for two reasons:

- You are not using self signed certificates.

- You are not validating certificate on ONTAP.

If you are to enable certificate validation on ONTAP and use custom CA signed certificate then all you need to do before enabling certificate validation on ONTAP is to make sure has the CA root/intermediate certificate chain installed there. Then ONTAP would be able to valid SG certificate if signed by the same CA.

Regarding wildcard certificate, you can use those as you described. There should not be any concern.

Regarding the storage node IP addresses, it is not necessary to include them in the certificate as it won't add any additional security unless if you believe it is required for the external load balancer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks. But what about the HA VIP adresses? Since we are using that, I think the certificate should include the SG node names + the 4 VIP adresses for the load balancers + the DNS names of these load balancers. Does this seem right?T

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I answered that above: you can add IP addresses (SANs) to certificates (or create stand-alone, although that probably won't work except for self-signed/snake-oil), but for what purpose?

S3 clients shouldn't be using IP addresses for services that have TLS certificates involved.

Any TLS client should use the FQDN or hostname of the target it connects to.

As the docs explain the load balancer's TLS is the main one because that's the one S3 clients use. "Client applications use the load balancer certificate when connecting to StorageGRID to save and retrieve object data."

As Storage Nodes are "behind" the balancer, clients don't connect to it; their "clients" are the load balancer nodes if TLS terminates there, then from the load balancer to Storage Service connections are initiated - and requests forwarded - by the load balancer on behalf of its S3 clients.

The manual isn't clear on the IP address question ("One or more IP addresses to include in the certificate") which is confusing (https://docs.netapp.com/us-en/storagegrid-117/admin/configuring-custom-server-certificate-for-storage-node.html) and annoying.

I wouldn't include any IPs addresses in new TLS certs. Create proper DNS names for all IP addresses instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks.

@elementx wrote:

As the docs explain the load balancer's TLS is the main one because that's the one S3 clients use. "Client applications use the load balancer certificate when connecting to StorageGRID to save and retrieve object data."

As Storage Nodes are "behind" the balancer, clients don't connect to it; their "clients" are the load balancer nodes if TLS terminates there, then from the load balancer to Storage Service connections are initiated - and requests forwarded - by the load balancer on behalf of its S3 clients.

-OK, but we should use our VIP FQDN for our HA-Groups to connect to S3 and create the certificate with these names, right? Not the Load balancer names.

The manual isn't clear on the IP address question ("One or more IP addresses to include in the certificate") which is confusing (https://docs.netapp.com/us-en/storagegrid-117/admin/configuring-custom-server-certificate-for-storage-node.html) and annoying.

-Where did you find this? I could not find it.

OK, but we should use our VIP FQDN for our HA-Groups to connect to S3 and create the certificate with these names, right? Not the Load balancer names.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you referring to SGRID load balancer endpoints and HA VIP?

If you are using SGRID load balancer endpoints, you will need to update their certificate as well. That's because the global S3 client API certificate is mainly returned by the storage nodes and legacy CLB service on Gateway nodes. The load balancer endpoints have their own certificate.

You may include the IP addresses of the HA VIPs. Having the IPs listed is not mandatory in order to leverage the certificates unless if the external clients require it to be included as they validate the SGRID returned certificate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> Having the IPs listed is not mandatory in order to leverage the certificates unless if the external clients require it to be included as they validate the SGRID returned certificate.

How can they "validate" a cert when they have no basis on which to do that?

If 10.10.10.10 has a cert that says it's for 10.10.10.10, what does that mean?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes, I am going to include the endpoint load balancers in the global S3 certificate, an dI think I then should include the FQDN for the 4 VIP adresses we have for the HA groups.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Typically, the key in validating certificates with a client or server is that it is signed by a trusted CA. I don't think adding IPs adds any additional level of validation unless if the client or server requires it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that was also said in earlier comments, but it was also said it's not a good practice and there's probably no valid use case for it today.

A 10.10.10.10 with a valid cert for 10.10.10.10 could be a node from another A class network in the same domain.

If DNS resolution of vip1.api.s3.domain.com provided by the load balancer api.s3.domain.com takes the client to 10.10.10.10 *and* the server responds with a TLS for vip1.api.s3.domain.com, that's reasonably secure.

If you go to 10.10.10.0 and that redirects you to 10.10.10.10, all you know the certs were issued by the CA whom you trust. You don't know if you're in the right place.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's not necessarily a major concern as the client would not be able to access anything anonymously. They must provide valid S3 credentials to access anything in StorageGRID cluster.

It is ok to configure any of the IPs desired, however it is not scalable because the StorageGRID cluster can grow to hundreds of nodes and span across multiple sites (globally). The cluster can also grow and shrink at any time, so any new or removed nodes would have to be added and certificate updated every time such maintenance takes place.

Additionally, network configuration including IP addresses and subnets are subject to change.

While these are things to consider, it is acceptable configuration to add all the nodes' IPs. It is just not required for certificate validation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay, we agree to disagree, then.

I just need to point out about this:

> That's not necessarily a major concern as the client would not be able to access anything anonymously.

> They must provide valid S3 credentials to access anything in StorageGRID cluster.

These may be true, but aren't necessarily true, so for the sake of users who read this thread and do need to care:

- It may not be a "major" concern in your or my opinion, but it may be major for some users. For example, we know if you get redirected to a host with a valid TLS cert and the same IP in another A class network, you would access that node without rejecting its TLS certificate.

- They (S3 clients) may be accessing data in public buckets on internal LAN (simple office forms, ISO files) in which case they would need no authentication of any kind. Now, if I know there's s3:///hr/forms/resume.docx and can redirect you to my 10.10.10.10 with a Web server S3 serving the same object with infected malware, in the worst case that can be the end of your organization. Or you can be redirected to a host set up to exploit a browser 0-day bug and you get owned even without downloading anything. And this includes SG admins, if Admin Service is set up with a Virtual IP and no DNS.

So, not knowing someone's concerns, use cases and other details, we can't say something that's not very secure isn't a major concern.

Many security-focused documents warn about the practice of issuing TLS certs to IP addresses. Example from IBM DB2 documentation:

Using IP addresses as SAN values

You can specify one or more IP addresses in your GSKit command using the -san_ippaddr field.

- An IP address is not necessarily a reliable identifier for a server, due to private networks, NAT, etc.

---

The SG documentation states that IP addresses are optional, and that's fine.

But it's not a good security practice and may be a source of concern for some customers.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree. It may be a concern for some clients and therefore I stated that depending on the client and server including the IPs in SAN may be needed / required.

For StorageGRID as a service this is not required, and the notes I made about scalability is one of the reasons why that's not necessarily needed.

But I understand and agree that this may not be true for all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just a follow up question on the global management certificate. The documentation states:

"Because a single custom management interface certificate is used for all Admin Nodes, you must specify the certificate as a wildcard or multi-domain certificate if clients need to verify the hostname when connecting to the Grid Manager and Tenant Manager. Define the custom certificate such that it matches all Admin Nodes in the grid."

What about the Tenant Manager? How do I configure its name and IP-address? If I click the Tenant Manager within StorageGrid Manager it takes me there, but I see no way to configure anything? Hence I cannot add this info in the managemt certificate. What did I miss here?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your certificate should have every DNS entry clients will use to access that system. The tenant management and Grid management UI login's are the same, the only difference is the tenant login page has "/?accountId=" appended to the end that does not need to be in the certificate. What will you be providing to the users as the URL to log into the system. this is what needs to be in the certificate. The same is true for your load balancer endpoint certificate. what ever you will be providing clients as the URL for S3 requests are the DNS entries that needs to be in that certificate. Wild card entries are mainly for using virtual hosted style URLs vs path style. You would need a wild card DNS entry for that to work as well.

To summarize, what ever DNS entries you have that you are going to provide to clients to access a component of the system need to be in the certificate installed on that component.

Santricity certificates are managed in Santricity.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And a "Final 2" follow-up question: The Santricity System Manager also have certificates to manage. Are these covered by the management certificate I already configured, or do I need to administer these? IT seems to me that the latter is true, because the nodes are still using the old default certificate that expires after 3 years. Butif I need to renew this, which name should I use for it? The node name certificate is already in use for the management certificate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> The node name certificate is already in use for the management certificate.

Yeah but a node can have many names (service IP, virtual IP, management controller IP, etc.). Which is in use?

StorageGRID-related services running on SG100 (for example) would have 1 IP for its hostname (e.g. sg1ka.data.company.com), another "floating" as vip1 in HA group 1, and maybe another from HA group 2. These are all "front end" services, and there may be other networks ("grid" network, etc.) and hostnames.

At the same time there may be another hostname for SANtricity on-board management which is on the "back" and usually connected to infrastructure management LAN.

SANtricity whose management UI you took a screenshot of has an IP at which you accessed it.

That IP may or may not be mapped to the SANtricity system name (that you obscured in the screenshot). If the IP/hostname to which you connected in the browser has a DNS entry, then you could create a DNS-based TLS for that name. Or a mix (DNS + IP) or just IP-based.

What I would not do is create and upload a cert for the name you obscured without any checking, because that name may not map to any IP or "real" DNS host entry.

In other words, if creating a DNS-based TLS, make sure you get the name right and that clients who manage SANtricity can resolve it. Sometimes they can't, e.g. corporate DNS may not be available on "management LAN" (I've seen those cases), or DNS names are different from actual host names, etc so in those cases IP-based TLS may become the only (and bad) choice.

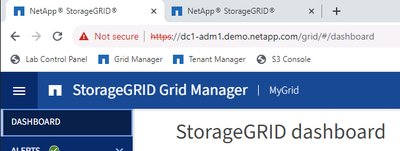

Grid vs. Tenant TLS - these are commonly on the same FQDN/IP, but only you know if that's the case for you. In this screenshot, you can see FQDN of a Grid Admin endpoint:

And FQDN for Tenants is the same.

So you could have 3 DNS entries for one and the same virtual IP for admin:

admin.s3.company.corp

tenant1admin.s3.company.corp

tenant2admin.s3.company.corp

Now, each of these 3 admins could go to their "dedicated" FQDN but it'd be the same VIP and *.s3.company.corp would work okay. If you instead issued a cert for [admin,tenant1admin.tenant2admin].s3.company.corp, each of them would see these called out.

Although there's nothing "secret" about it (it's easy to reverse-query DNS and get all the hostnames), some prefer to use *.s3.company.corp - it obscures certain details and also frees them from having to maintain the TLS to add tenant3.s3.company.com when that tenant gets added.

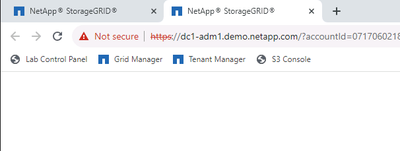

You can find a Tenants tab in here:

It mentions certificates in the context of "platform services". Those are usually external services (Elasticsearch, etc.) used by the tenant, so not something you'd replace *on* StorageGRID.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At the same time there may be another hostname for SANtricity on-board management which is on the "back" and usually connected to infrastructure management LAN.

-The IP-address and "DNS" in the picture were not configured for Santricity Manager when we set up our SG system (Netapp employee consultant). This is probably the infrastructure vlan you are talking about. We could assign a new IP-adresses belonging to a OOB vlan, but it seems difficult, because we also in the process need to assign a IPv6 address to each node. See screenshots below. Is there a way to avoid this route or do we have to do in order NOT to have a problem with Santricity Manager certificate expiration in April 2025? Is this certificate for this interface or for the mgmt network?

I have to ask: Is the part in the S3 certificate for the SG node for the mgmt network or the SG network? It does not say in the documentation. I need to know when creating the DNS entries for the certificate.

To complicate matters even more, all the nodes, including the two admin nodes and load balancers, were given IP-adresses using DHCP and then reserved for the SN MAC-address. Is this a config we should change?

What I would not do is create and upload a cert for the name you obscured without any checking, because that name may not map to any IP or "real" DNS host entry.

-Yes, and I assume this name is referring to the mgmt name. Thanks for your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> Is the part in the S3 certificate for the SG node for the mgmt network or the SG network?

*.*.63.24 (IP and hostname on admin network) would be used by your StorageGRID application services and that could use *.s3.company.com. That's a core part of the SG services.

The SANtricity Web UI is the storage interface for the storage box, which as you mention is on a dedicated management (OOB) LAN and isn't directly related to StorageGRID services. This isn't part of core SG S3 services and I would not use *.s3.company.com (in part because it's not expected to be on the same network - it'd be on the OOB LAN, or manged by the same team - it would be managed by "infra team" rather than "SG admins").

But I'm not sure if SG application connects to that IP (it wouldn't for S3-related services, but it could for general health-check purposes, such as to use the storage disk arrays' management IP:8443 to query system health, and you need that part to work if auto-support is enabled, otherwise those queries would fail and healthcheck reports could be partial and only cover software, for example.)

However, given that it all works now, if you looked up OOB LAN IP's TLS, whatever it is now if you just recreated exactly the same certificate (even self-signed, which it may be right now, for example), everything should continue to work the same way. OOB LAN IP is DHCP-assigned, it's highly unlikely there's a proper TLS certificate on SANtricity management IP interface. I'd guess it's a generic self-signed NetApp certificate at best).

Should you continue using DHCP-assigned IP? Well, I would prefer to configure fixed IPs (you can use the same IP that you now get as dynamic reserved DHCP IP), to avoid the situation where one day somebody forgets that those SG reserved IPs must remain reserved.

Do you have DNS on OOB LAN? If you do you could create DNS entries for the IPv4 and the new IPv6 address, as well as the OOB based host name.

If you are not sure, you can simply re-create the certificate with exactly the same TLS certificate properties (which you can observe in the browser or with openssl CLI) or even leave it as-is for now and create a support ticket and work on this at a slower pace since this OOB TLS doesn't seem to be an immediate problem that you need to solve right away.

Yes, it's interesting that you have DHCP addresses, but it does happen. D-Day comes, installation begins, and there's no Excel spreadsheet with OOB IP addresses, or the ones "reserved" by network team are on a completely wrong network, etc.