Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: NetApp Harvest LUN Latency Not Matching Total Latency

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question regarding Read and Write LUN or Volume latency..as you can see the Read and Write LUN latency is high in this performance collect; top read = 75ms, top write = 600ms:

However main dashboard doesn't seem to indicate an issue or the high latency:

How is the highlevel Read and Write latency determined, and why am I not seeing any issue on the main dashboard?

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Latency is tracked at many different layers inside Data ONTAP. Let's look at LUN and volume latency since this was a concern. LUN latency measures at the SCSI layer and would include time external to the storage system consumed by the network or the initiator, added by QoS if throttled, and the processing time within the cluster and WAFL. Volume latency measures at the WAFL layer and includes time that the request is being processed inside WAFL. So there can be differences between LUN and volume latency because they are measuring different things.

So if you want to compare the cDOT node dashboard latency to the cDOT LUN dashboard LUN latency you have to keep the above in mind. You can also click edit on any panel to see what data it shows.

In my lab if I click edit on the read latency singlestat panel of the cDOT node dashboard:

So it is giving me the average read latency at the volume level for all volumes on that node.

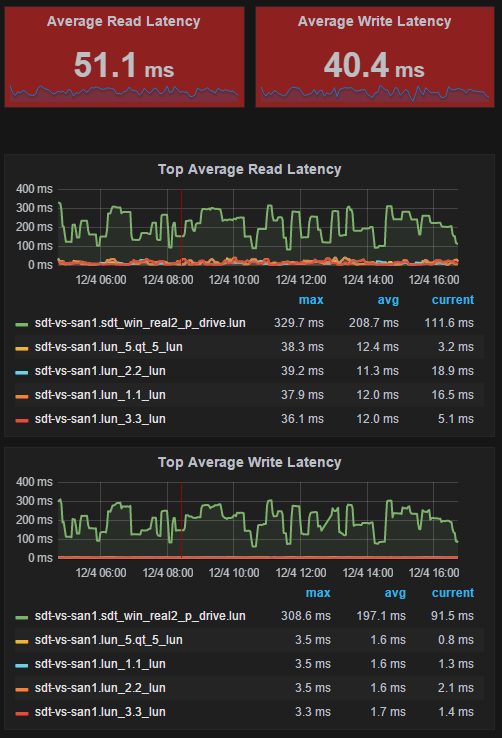

Now lets look at the cDOT LUN dashboard:

Oh, lots of red and very different numbers in the singlestat panels. If you click edit on the singlestat panel you will see that it is also the average of the top X resources, so the average of the worst LUN read_latency. If I look to the more detailed graphs right below though I can see actually that just a single LUN is very poor and the 'next worst' LUNs are much more reasonable.

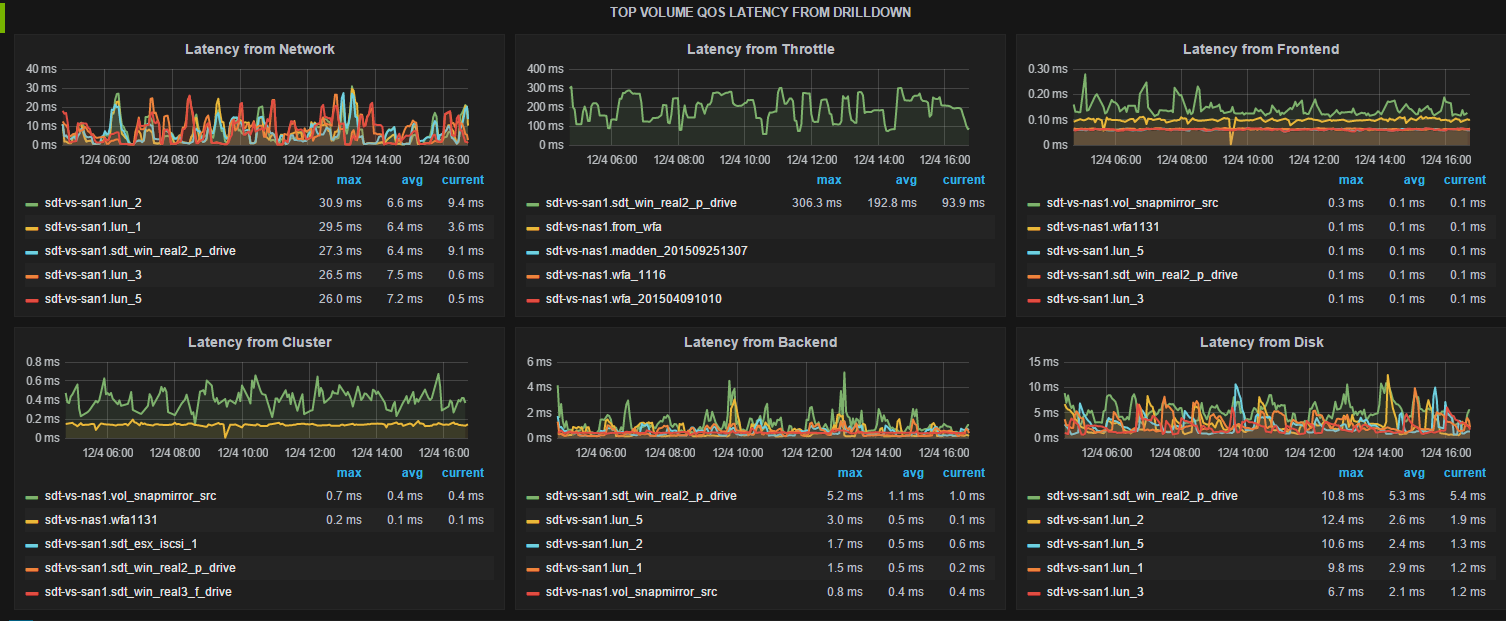

If I want to see why that LUN was so high I can check QoS:

So this shows the top X resources per "Latency From" reason. So here scanning the 'avg' column in all the tables I see that the latency reported is basically coming from throttle on that p_drive volume.

I think this is a good way to report latency because from a global perspective the system is performing well, and that is shown on the node page. Looking more detailed though we see a single volume and LUN that is performing much worse, in this case because of a QoS throttle applied, but it could just have easily been a slow host or lossy iSCSI network. It's really hard to boil hundreds of spiking graphs into a single number and agree sometimes it might not be so obvious how to interpret.

One last thing to keep in mind with latency is the number of IOPs; if very low then latency numbers can be innacurate. So if you see high latency but only a few dozen IOPs I wouldn't be too concerned. I have a latency_io_reqd attribute in the netapp-harvest.conf file that tries to filter these out by only submitting latency if enough IOPs occurred, but today I only implemented for volumes and QOS counters, not LUNs yet. If you think that is needed please let me know.

Hope this helps!

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

P.S. Please select “Options” and then “Accept as Solution” if this response answered your question so that others will find it easily!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Latency is tracked at many different layers inside Data ONTAP. Let's look at LUN and volume latency since this was a concern. LUN latency measures at the SCSI layer and would include time external to the storage system consumed by the network or the initiator, added by QoS if throttled, and the processing time within the cluster and WAFL. Volume latency measures at the WAFL layer and includes time that the request is being processed inside WAFL. So there can be differences between LUN and volume latency because they are measuring different things.

So if you want to compare the cDOT node dashboard latency to the cDOT LUN dashboard LUN latency you have to keep the above in mind. You can also click edit on any panel to see what data it shows.

In my lab if I click edit on the read latency singlestat panel of the cDOT node dashboard:

So it is giving me the average read latency at the volume level for all volumes on that node.

Now lets look at the cDOT LUN dashboard:

Oh, lots of red and very different numbers in the singlestat panels. If you click edit on the singlestat panel you will see that it is also the average of the top X resources, so the average of the worst LUN read_latency. If I look to the more detailed graphs right below though I can see actually that just a single LUN is very poor and the 'next worst' LUNs are much more reasonable.

If I want to see why that LUN was so high I can check QoS:

So this shows the top X resources per "Latency From" reason. So here scanning the 'avg' column in all the tables I see that the latency reported is basically coming from throttle on that p_drive volume.

I think this is a good way to report latency because from a global perspective the system is performing well, and that is shown on the node page. Looking more detailed though we see a single volume and LUN that is performing much worse, in this case because of a QoS throttle applied, but it could just have easily been a slow host or lossy iSCSI network. It's really hard to boil hundreds of spiking graphs into a single number and agree sometimes it might not be so obvious how to interpret.

One last thing to keep in mind with latency is the number of IOPs; if very low then latency numbers can be innacurate. So if you see high latency but only a few dozen IOPs I wouldn't be too concerned. I have a latency_io_reqd attribute in the netapp-harvest.conf file that tries to filter these out by only submitting latency if enough IOPs occurred, but today I only implemented for volumes and QOS counters, not LUNs yet. If you think that is needed please let me know.

Hope this helps!

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

P.S. Please select “Options” and then “Accept as Solution” if this response answered your question so that others will find it easily!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Chris for taking the time to author such a detailed response. NetApp Harvest was my biggest takeaway from NetApp Insight this year and I couldn't wait to get it stood up in my environment when I came home. Thank you for making this fantastic tool so accessible and useful.