Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- Re: qtree monitoring delays - OnCommand 5.1

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I am experiencing issues with the qtree monitoring as our application teams rely on the alerts being cleared when they free up space in the qtree. I was running DFM 4.0.2 on a physical server with qtreeMonInterval value set to 30 min, but recently upgraded to DFM 5.1 on a VM. I recently logged the issue with NetApp and they suggested settting the values to default, which will not work in our environment. Other suggestions applied were:

dfm option set snapDeltaMonitorEnabled=no

dfm option set is EnableWGChecks=No

The server is performing fine, so I dont think there is any CPU\Memory bottleneck:

Relevant details from DFM diag:

Management Station

Version 5.1.0.15008 (5.1)

Executable Type 64-bit

Serial Number 1-50-000225

Edition Standard edition of DataFabric Manager server

Data ONTAP Operating Mode 7-Mode

Administrator Name

Host Name

Host IP Address

Host Full Name

Node limit 250 (currently managing 17)

Operating System Microsoft Windows Server 2008 R2 Service Pack 1 (Build 7601) x64 based

CPU Count 4

System Memory 16383 MB (load: 22%)

Installation Directory C:/Program Files/NetApp/DataFabric Manager/DFM

20.6 GB free (51.4%)

Perf Data Directory e:\perfdata

Data Export Directory C:/Program Files/NetApp/DataFabric Manager/DFM/dataExport

Database Backup Directory e:/data

Reports Archival Directory e:\reports

Database Directory e:/data

256 GB free (63.9%)

Database Log Directory e:/data

256 GB free (63.9%)

Licensed Features DataFabric Manager server: installed

Object Counts

Object Type Count

Administrator 31

Aggregate 57

App Policy 2

Array LUNs 0

Backup jobs started within last week 0

Clusters 0

Configuration 6

Datasets containing generic app objects 0

Datasets containing generic app objects and with storage service attached 0

Datasets with application policy attached 0

Datasets with storage service attached 0

Disks 2112

DP Managed OSSV Rels 0

DP Managed SnapMirror Rels 0

DP Managed SnapVault Rels 0

DP Policy 37

DP Schedule 19

DP Throttle 1

FCP Target 32

Host 32

Initiator Group 58

Interface 163

Lun Path 295

Mgmt Station 1

Mirror 57

Mount jobs started within last week 0

Network 10

OSSV Directory 30

OSSV Hosts 2

OSSV Rels 0

Primary Storage Systems 2

Prov Policy 16

Qtree 5384

Qtrees in DP Managed QtreeSnapMirror Rel 0

Qtrees in DP Managed SnapVault Rel 0

Qtrees in QtreeSnapMirror Rel 10

Qtrees in SnapVault Rel 0

QuotaUser 21194

report schedule 6

Resource Group 121

Resource Pool 2

Restore jobs started within last week 0

Role 34

schedule 5

Secondary Storage Systems 2

SnapMirror Rels 59

SnapVault Rels 0

Storage Service 16

Unmount jobs started within last week 0

UserQuota 15683

vFilers 13

Virtual Servers 0

Volume 552

Volumes in DP Managed VolumeSnapMirror Rel 0

Volumes in VolumeSnapMirror Rel 104

Zapi Hosts 17

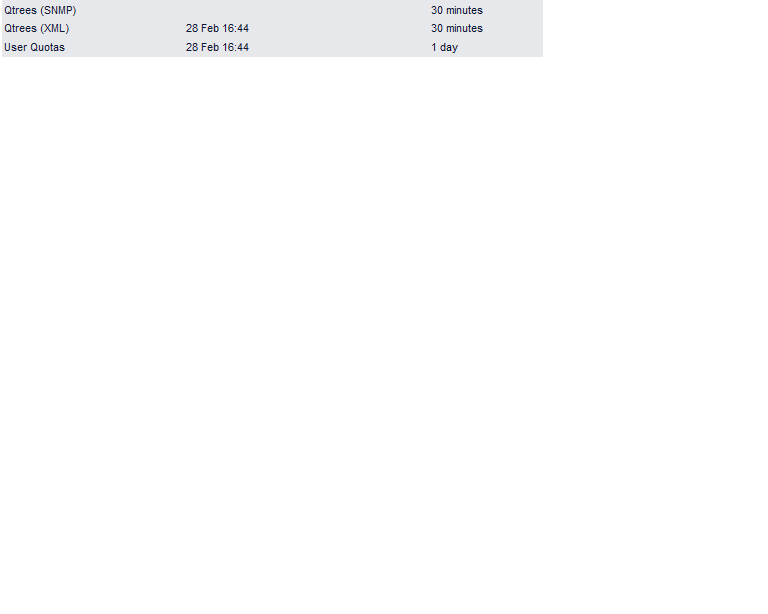

Monitoring Timestamps

Timestamp Name Status Interval Default Last Updated Status Error if older than ...

ccTimestamp Normal 4 hours 4 hours 28 Feb 06:40

cfTimestamp Normal 5 minutes 5 minutes 28 Feb 10:40 Normal 28 Feb 10:35

clusterTimestamp Error Off 15 minutes Not updated ( clusterMonInterval is set to Off )

cpuTimestamp Normal 5 minutes 5 minutes 28 Feb 10:40 Normal 28 Feb 10:35

dfTimestamp Normal 30 minutes 30 minutes 28 Feb 10:39 Normal 28 Feb 10:10

diskTimestamp Normal 4 hours 4 hours 28 Feb 10:40 Normal 28 Feb 06:41

envTimestamp Normal 5 minutes 5 minutes 28 Feb 10:41 Normal 28 Feb 10:36

fsTimestamp Normal 15 minutes 15 minutes 28 Feb 10:40 Normal 28 Feb 10:26

hostPingTimestamp Normal 1 minute 1 minute 28 Feb 10:41 Normal 28 Feb 10:40

ifTimestamp Normal 15 minutes 15 minutes 28 Feb 10:41 Normal 28 Feb 10:26

licenseTimestamp Normal 4 hours 4 hours 28 Feb 10:38 Normal 28 Feb 06:41

lunTimestamp Normal 30 minutes 30 minutes 28 Feb 10:38 Normal 28 Feb 10:11

opsTimestamp Normal 10 minutes 10 minutes 28 Feb 10:41 Normal 28 Feb 10:31

qtreeTimestamp Error 1 hour 8 hours 28 Feb 09:41

rbacTimestamp Normal 1 day 1 day 27 Feb 18:11 Normal 27 Feb 10:41

userQuotaTimestamp Normal 1 day 1 day 28 Feb 10:22 Normal 27 Feb 10:41

sanhostTimestamp Error 6 hours 5 minutes 28 Feb 04:41

snapmirrorTimestamp Error 2 hours 30 minutes 28 Feb 10:33 Normal 28 Feb 08:41

snapshotTimestamp Normal 30 minutes 30 minutes 28 Feb 10:38 Normal 28 Feb 10:11

statusTimestamp Normal 10 minutes 10 minutes 28 Feb 10:41 Normal 28 Feb 10:31

sysInfoTimestamp Normal 1 hour 1 hour 28 Feb 10:38 Normal 28 Feb 09:41

svTimestamp Error 6 hours 30 minutes 28 Feb 08:29 Normal 28 Feb 04:41

svMonTimestamp Normal 8 hours 8 hours 28 Feb 07:14 Normal 28 Feb 02:41

xmlQtreeTimestamp Error 1 hour 8 hours 28 Feb 10:32 Normal 28 Feb 09:41

vFilerTimestamp Normal 1 hour 1 hour 28 Feb 10:32 Normal 28 Feb 09:41

vserverTimestamp Error Off 1 hour Not updated ( vserverMonInterval is set to Off )

Database

monitordb.db 1.71 GB

dbFileVersion 10

ConnCount 46 connections

MaxCacheSize 8388384 KBytes

CurrentCacheSize 1623080 KBytes

PeakCacheSize 1623080 KBytes

PageSize 8192 Bytes

DP Job Information

Job State Count

Jobs Running 0

Jobs Completed Total 1

Jobs Aborted Total 0

Jobs Aborting Total 0

Jobs Completed Today 0

Jobs Aborted Today 0

Jobs Aborting Today 0

Dataset Protection Status

Protection State Count

Protected 0

Unprotected 0

Event Counts

Table Count

Events 212990

Current Events 201401

Abnormal Events 5931

Event Type Counts

Event Type Count

userquota.kbytes 36898

userquota.kbytes.soft.limit 36090

userquota.files.soft.limit 36090

userquota.files 36090

sm.delete 9259

qtree.kbytes 7754

qtree.files 6844

user.email 5998

perf:cifs:cifs_latency 5064

qtree.growthrate 4404

Version 5.1.0.15008 (5.1)

Excerpts from the dfmmonitor.log

Feb 28 08:46:58 [dfmwatchdog: INFO]: [5108:0x500]: dbsrv11 up 1.0 days, mem = 1.13 GB, cpu = 28.9%, db = 1.71 GB, log = 11.6 MB

Feb 28 09:17:31 [dfmwatchdog: INFO]: [5108:0x500]: dbsrv11 up 1.0 days, mem = 1.15 GB, cpu = 18.4%, db = 1.71 GB, log = 13.1 MB

Feb 28 09:35:12 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.0 days, mem = 142 MB, cpu = 27.0%

Feb 28 09:40:08 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.0 days, mem = 93.7 MB, cpu = 27.1%

Feb 28 09:41:16 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.0 days, mem = 104 MB, cpu = 26.9%

Feb 28 09:45:30 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 117 MB, cpu = 5.5%

Feb 28 09:47:10 [dfmwatchdog: INFO]: [5108:0x500]: dbsrv11 up 1.1 days, mem = 1.15 GB, cpu = 25.2%, db = 1.71 GB, log = 14.6 MB

Feb 28 10:11:00 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 78.9 MB, cpu = 29.5%

Feb 28 10:11:48 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 88.8 MB, cpu = 26.7%

Feb 28 10:12:14 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 99.7 MB, cpu = 27.0%

Feb 28 10:12:30 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 113 MB, cpu = 28.8%

Feb 28 10:17:37 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 136 MB, cpu = 12.2%

Feb 28 10:17:53 [dfmwatchdog: INFO]: [5108:0x500]: dbsrv11 up 1.1 days, mem = 1.15 GB, cpu = 42.0%, db = 1.71 GB, log = 16.2 MB

Feb 28 10:18:08 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 104 MB, cpu = 17.0%

Feb 28 10:18:45 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 116 MB, cpu = 4.5%

Feb 28 10:19:54 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 129 MB, cpu = 5.8%

Feb 28 10:31:03 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 115 MB, cpu = 26.1%

Feb 28 10:33:47 [dfmwatchdog: INFO]: [5108:0x500]: dfmmonitor up 1.1 days, mem = 134 MB, cpu = 28.9%

Although the status of xmlQtreeTimestamp is showing Normal, the DFM graph is way out:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Do you Monitor your Appliances with SNMPv1 or SNMPv3?

I recommend to use SNMPv3:

Set SNMPv3 as the preferred SNMP version. As this improves the response times for SNMP communication between DFM and Storage System

----> https://kb.netapp.com/library/CUSTOMER/solutions/1013266_%20OC5_Oct17_2012.pdf

How to Setup:

https://communities.netapp.com/docs/DOC-9314

regards

Thomas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I changed the monitoring to use SNMPv3 yesterday and left it overnight to see if it made a difference but I'm still seeing large lags on the xmlQtreeTimestamp. The weird thing is that the timestamp is not consistent across the controllers and it seems to be in sync with the userQuotaTimestamp

I'm sure something has changed in v5.1 as I don't recall this issue happening in 4.0.2. We are getting apps teams contacting us on a daily basis now to acknowledge a qtree alert as they have performed housekeeping on the share and are still receiving the alert a few hours later.

Any help appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Just thought I would post an update to this issue. I've rolled back to using DFM 4.0.2 on a VM and have not seen any delays with the qtree monitoring samples, so something must have changed in v5.1. Unless someone identifies the actual issue, I don't think I will be in a hurry to move to v5.1.