Hello all,

My name is Marcin and I work as system administrator.

Recenly my company bought Two E-series storage arrays. One for vmware cluster storage and another for DR site (async replication). Hosts are connected to stoage via FC 16G, and a 10Gb/s iSCSI link is established between arrays for a asynchronous replication. We are now testing replication performance and i'm a little bit concerned about replication performance and Santricity performacje charts.

I have created for tests one RAID10 volume group from four 10k SAS disks, and one 800GB volume on it which is assigned to ESXi hosts (vmfs6 datastore). Jumbo frames (MTU 9000) are enabled on iSCSI Interfaces.

Please tak a look at iSCSI tests from Satntricity

Connectivity test is normal.

Latency test is normal.

Controller A

Maximum allowed round trip time: 120 000 microseconds

Longest round trip time: 536 microseconds

Shortest round trip time: 329 microseconds

Average round trip time: 347 microseconds

Controller B

Maximum allowed round trip time: 120 000 microseconds

Longest round trip time: 472 microseconds

Shortest round trip time: 329 microseconds

Average round trip time: 344 microseconds

Bandwidth test is normal.

Controller A

Maximum bandwidth: 10 000 000 000 bits per second

Average bandwidth: 8 747 389 830 bits per second

Shortest bandwidth: 2 016 000 000 bits per second

Controller port speed: 10 Gbps

Controller B

Maximum bandwidth: 10 000 000 000 bits per second

Average bandwidth: 10 000 000 000 bits per second

Shortest bandwidth: 2 081 032 258 bits per second

Controller port speed: 10 Gbps

and my first synchronization

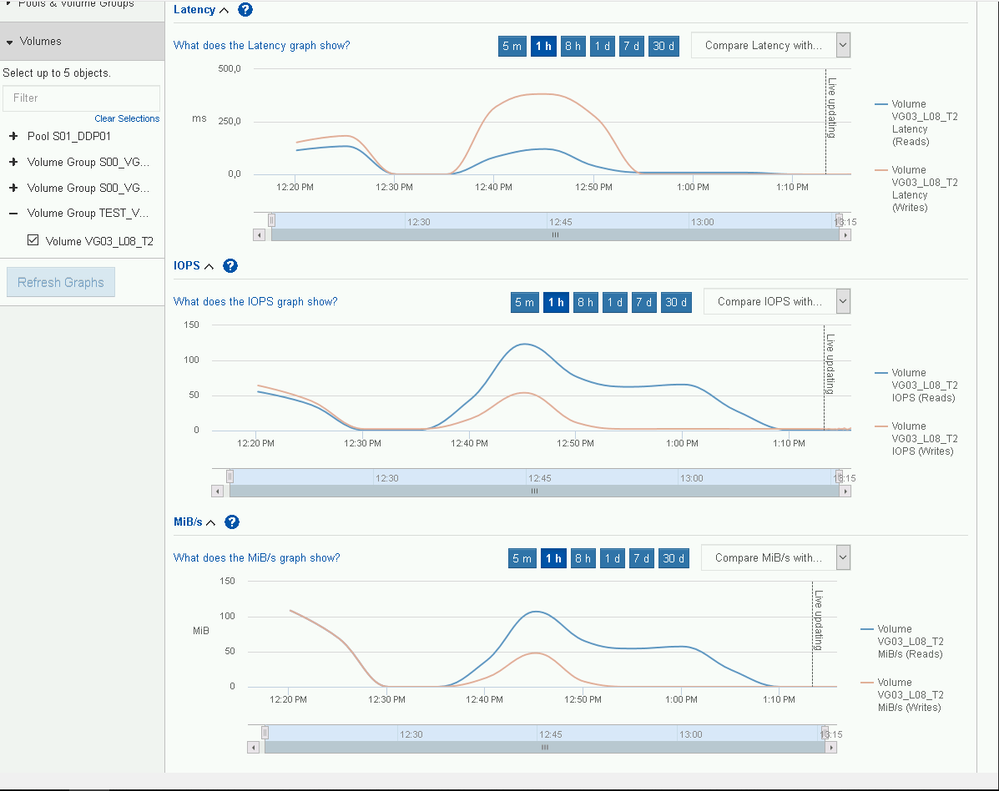

And manual resync after some clone operations on datastore. (12:35-12:55 - added 20GB eager zeroed vmdk)

My thoughts:

Throughput is low (low IOPS). Tests results are worst as I expected.

In simple calculation I have about 500 IOPS from four 10k SAS drives at 4KB block. It gives me throuhput at 2MB/s

As I read from latnecy chart avg IO size iz 700KB, so based on IOPS chart (70) it gives me about 50MB/s througput - as it is on first manual resync charts ...

But why on initial synchronization on the same volume i had:

higher latency (avg 120ms)

more IOPS (250)

larger avg IO size (1000K)

better troughput 250MB/s

I don't understand.

Finally my questions:

What determines the IO size in replication job?

Will it be the same (or near) if I add more disk to my volume group (lower latency->more IOPS->better throughput)?