EF & E-Series, SANtricity, and Related Plug-ins

- Home

- :

- Products and Services

- :

- EF & E-Series, SANtricity, and Related Plug-ins

- :

- Refreshing storage installation - NetApp e-2824 - questions about iSCSI performance

EF & E-Series, SANtricity, and Related Plug-ins

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Refreshing storage installation - NetApp e-2824 - questions about iSCSI performance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

Around 5 years ago, I installed a SAN to serve as a repository for the VMs from 2 VMware servers.

It was my first time working with a SAN, so I had numerous issues (post below).

At the end of the installation, the performance was acceptable, so we decided to stick with that.

We used 1GB/s connections between the SAN (that had 10GB/s NICs), a switch, and the 2 VMware servers.

Our needs increased and we had to keep adding VMs to that system and it started to be slow.

I decided to do an upgrade and added 2 x 10GB/s switches and 10GB/s NICs to the 2 VMware servers, so right now all pieces of equipment in that environment are connected at 10GB/s instead of 1GB/s.

I expected that just doing that, would give me not only HA (I added a separate path to each of those 2 switches) but also an increase in performance, but that hasn't happened and the performance seems kind of the same.

We're currently using active/passive in VMware NICs and DelayedAck is disabled.

Does anyone have any idea on what else I can investigate or if it has any good practice that I could now be aware of?

Thanks,

Carlos

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What seems to be missing from the other post (I skimmed through it) is key detail: E-Series storage configuration.

Presumably you have mostly random access (with VMware clients), so IOPS are important, which also means that if your disks are not SSD then you need a lot of them to get IOPS you need.

For example with 10+ NL-SAS disks in RAID6 you may get only 2-3K 4kB IOPS.

If SANtricity IOPS perf monitor shows 2-3K IOPS, than that's it, you're maxing out IOPS. At the same time, if you look at 10GigE bandwidth utilization you'll probably discover it's low (3000 IOPS x 4kB = 12 MB/s).

It's not possible to add 2 SSDs to a disk group made of non-SSDs, so to increase IOPS you'd have the following choices:

- Add 2 SSDs as new R1 disk group and turn them into read cache (will help with reads, as long as write ratio is low (10-15%). If write % is higher than that, that may not help

- Add 2 SSDs as new R1, create 1 or 2 volumes on this disk group, create new VMware DS, and move busy VMs to these disks

- Add 5 or more SSDs and create a R5 disk group to get more capacity and more performance (same as the bullet above, but you'd get more usable due to a lower R5 overhead compared to R1

- Add more HDDs (I wouldn't recommend this if you have a lot of IOPS, it's cheaper to buy SSDs for IOPS)

You can also monitor the performance from VMware side. We also have a free way to provide detailed metrics using Grafana (https://github.com/netapp/eseries-perf-analyzer/; requires a Linux VM with Docker inside plus some Docker skills).

What else you can do: maybe check https://kb.netapp.com/Advice_and_Troubleshooting/Data_Storage_Systems/E-Series_Storage_Array/Performance_Degradation_with_Data_Assurance_enabled_Volum... - not sure if this applies to your array or not. It may help in marginal ways. If you have HDDs and are maxing out IOPS it probably won't help enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What seems to be missing from the other post (I skimmed through it) is key detail: E-Series storage configuration.

Presumably you have mostly random access (with VMware clients), so IOPS are important, which also means that if your disks are not SSD then you need a lot of them to get IOPS you need.

For example with 10+ NL-SAS disks in RAID6 you may get only 2-3K 4kB IOPS.

If SANtricity IOPS perf monitor shows 2-3K IOPS, than that's it, you're maxing out IOPS. At the same time, if you look at 10GigE bandwidth utilization you'll probably discover it's low (3000 IOPS x 4kB = 12 MB/s).

It's not possible to add 2 SSDs to a disk group made of non-SSDs, so to increase IOPS you'd have the following choices:

- Add 2 SSDs as new R1 disk group and turn them into read cache (will help with reads, as long as write ratio is low (10-15%). If write % is higher than that, that may not help

- Add 2 SSDs as new R1, create 1 or 2 volumes on this disk group, create new VMware DS, and move busy VMs to these disks

- Add 5 or more SSDs and create a R5 disk group to get more capacity and more performance (same as the bullet above, but you'd get more usable due to a lower R5 overhead compared to R1

- Add more HDDs (I wouldn't recommend this if you have a lot of IOPS, it's cheaper to buy SSDs for IOPS)

You can also monitor the performance from VMware side. We also have a free way to provide detailed metrics using Grafana (https://github.com/netapp/eseries-perf-analyzer/; requires a Linux VM with Docker inside plus some Docker skills).

What else you can do: maybe check https://kb.netapp.com/Advice_and_Troubleshooting/Data_Storage_Systems/E-Series_Storage_Array/Performance_Degradation_with_Data_Assurance_enabled_Volum... - not sure if this applies to your array or not. It may help in marginal ways. If you have HDDs and are maxing out IOPS it probably won't help enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @elementx, thank you so much for your answer.

Sorry for the late reply on this, I've been away for a long time, but I'm back now.

I believe you went straight to the point, which is the IOPS.

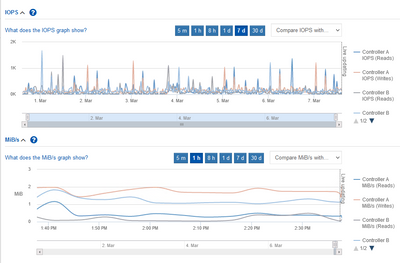

Here is what we have in terms of that:

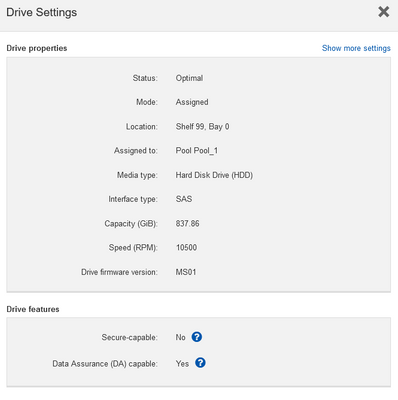

We have 24 of the drives below:

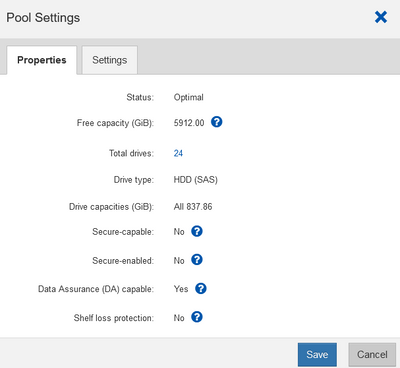

This is the Pool properties:

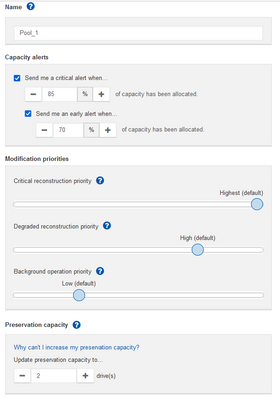

And Settings:

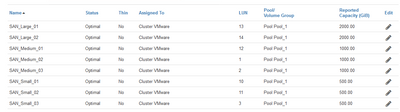

These are all volumes:

I created small, medium, and large volumes and spread the VMs alongside.

Both reads and writes on my system are way lower than the values you mentioned above.

I already tried reaching out to NetApp to see how much it would be to add SSDs but the cost was prohibitive for us.

Do you think I can increase the IOPS just with configuration changes?

Thanks again,

Carlos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Carlos,

Those are useful screenshots! The first shows key info which is that the workload is IOPS-dominated: low MB/s values. Similar note on IOPS vs. bandwidth:

A "back of the envelope" type of calculation might say 24 x 120 IOPS = 2880 IOPS and yet you're seeing peaks of "only" 1000-1500. But as the link I just provided mentions, that (simply summing up IOPS of all the spindles) wouldn't be the right away to think about it in the context of RAID devices, and then also of storage arrays.

The bulk of it stems from the way RAID works. It seems you have DDP pools which are like RAID 6.

RAID 5 and RAID 6 have an overwrite penalty- that is when you want to modify a single block on a RAID stripe, you need to read it first (e.g. from 10 disks, in RAID 6 made of 8D+2P), and then write it back and although the client modified just one small record, to commit back the stripe changes and results in writes to 10 disks.

Usually there's more writes that can be combined and there are various optimizations that RAID controllers implement, but in any case these are known facts and random writes consume disproportionately more resources, which is why for databases and random IO in general you'll see RAID5 more often recommended (lower, but still significant, write penalty).

In the case you wonder "why do I have DDP if RAID5 is better", the answer is RAID 5 isn't better, it's just different and there are reasons why DDP is more suitable for your use case and configuration (the main one being with 24 disks you can't just put all of them in a RAID 5 group, and optimal RAID 5 group size is 8+1 which means you'd end up with something worse if you used 24 disks here).

So, long story short, all this is as expected.

Additionally, notice how the first chart shows IOPS for a 7d period but MB/s for a 5 min period?

The IOPS are averaged, which means short spikes are probably higher than 1500, but you no longer can see them. If you kept the UI open with a 5 min moving window, you'd probably notice that short term spikes are actually higher than they appear when down-sampled after several days.

What can you do now? Well, you can't get more IOPS than there are available - if we could do it we'd have done it by default.

Less destructive option: VMware (depends on version) has the ability to limit IOPS on VMs. You can identify the VMs with high IOPS (high bandwidth VMs can probably can be ignored, unless they are the same as high IOPS VMs) and do something to constrain their IOPS. This is low risk and done outside of E-Series, so maybe you can check with your reseller or try on your own. As far as I know because it's a client-side thing, NetApp doesn't have its own recommendations do this. Of course, the end result is the constrained VM will be slower and less responsive (or even stall, if constrained too much), and outcomes depend not only on VMware version, but also the guest OS and application, so I'd lower those IOPS few percent at a time (from the maximum observed) and observe for a week. Lowering the number of CPUs or RAM on selected guests is another approach, and similarly in the app (e.g. limit RAM available to SQL Server, etc.). All impact application responsiveness and performance, as expected. Other options include general VMware best practices for guest OS - maybe disabling antivirus scanners or excluding some directories from scanning, etc.

More destructive option: backup and restore fest. To change data protection from DDP to RAID 5 (for example), you would have to backup VMs, maybe try to restore several to see it works, destroy VMware datastores, remove datastores from VMware, destroy E-Series volumes, destroy DDP, create new RAID config and then volumes and VMware datastores, followed by a VM restore.

What would be a "faster" configuration? RAID 10 is fastest, but you'd lose a lot of capacity, so probably not an option. RAID 5 is next option that can help you squeeze a bit more out of those disks (maybe 10- 20%? Is it worth the trouble and risk? I don't know). As indicated earlier you won't do better if you don't create optimal RAID 5 groups, so what you could do is 2 groups of 8+1, and the rest (24-19=5) would probably have to be 1 global hot spare (assuming your SANtricity is not ancient), and then you have 4 disks left, maybe do a RAID 10 from it?

So with that you'd get 3 little "RAID islands" (which is why most customers prefer one DDP island), and lose quite a bit of capacity, and now you need to know which VMs need to go where, because unlike with the current DDP setup where any VM on any datastore gets IOPS from all 24 disks, here it can't go beyond the RAID group so you need to be very aware of what each VM does when you "micro-manage" RAID groups otherwise you may need to do frequent Storage vMotion-ing which can create a lot of workload of its own...This is why DDP is an easy and best overall option in cases like yours - no big downsides, just a small-to-moderate sacrifice in IOPS.

But, assuming you have detailed insights into what each VM does, you could put the busiest VM(s) on RAID 10 and the rest on volumes on the two RAID 5 groups.

It's hard to say how much this would help, but at least it would insulate busy from not busy VMs. The busy VM on a DS on a volume on the RAID 10 group would make only those disks busy, while the rest would be responsive.

Considering the complexity (need to analyze and monitor performance, maybe do Storage vMotion exercises over weekends?) and potential risks deleting and restoring VMs, I'd probably try the less destructive approach first. This approach is time-consuming and finding a better layout than DDP may cost you more time (and trouble) than SSDs, so normally people use it for larger databases (where there's just 1 thing going on) and put transaction logs on RAID 10, tables on RAID 5, etc. For VMs, where there's a bunch of them and maybe even churn, it's hard and you may have all VMs doing lots of IOPS at different times...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi elementx, that was a great explanation!

Thanks for that.

When we decided to go for the SAN, that was part of a broader project with would also add VMware with HA and vMotion capability, so we wouldn't need to worry when one of our VMware servers fails.

Unfortunately, this project hasn't been finished and we got stuck with the SAN and 2 VMware servers using that.

I reached out to NetApp to ask about the cost of replacing 6 spinning disks with SSDs, so I'd try to break the current pool and actually have 2, one with 6 SSDs and the other with the remaining disks for the higher intensity VMs.

NetApp was always more interested in either selling a new SAN or adding an expansion, which would cost 2 to 4 times more expensive than the price we paid for the currents SAN.

From my perspective, adding those drives should cost less than half of the price we paid for the SAN.

Then, since replacing drives was too expensive, I decided to upgrade the iSCSI infrastructure from 1GB to 10GB to see if that would boost the performance a bit, which hasn't happened entirely.

Now, when we were renewing the support contract of that SAN, we were surprised with the news that they'll renew it only up to the end of the year, because our SAN is reaching the EoL.

At this point, with just 2 VMware servers using the SAN and no VMware HA or vMotion, I'm asking myself if it's worth buying another SAN.

If so, I'd try to buy one with SSDs if the price is not too high, or at least a hybrid one.

Another option would be to add a huge amount of SSD drives on the current servers and have the VMs locally.

Then, if anything happens with one of the servers, restore the backups of the VMs on the other one.

We have enough RAM on each server to run all the VMs. so that approach would work too, but it would take more time for recovery.

However, that would be a simpler setup, which is not totally bad.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand your point. As a NetApp employee I can't comment on sales-related questions, sorry about that!

Your point about 10G iSCSI reminded me of this NVA, which I like because it shows that iSCSI (25G with EF280, in this particular case) is a very decent option if your iSCSI LAN switch is good.

https://www.netapp.com/pdf.html?item=/media/21016-nva-1152-design.pdf

If you have unused capacity you could "shrink" DDP by two disks.

Then add two SSDs in RAID1, and you'll get plenty of IOPS. If your busy (high IOPS) VMs are few hundred GB, you could move them to this RAID1 even with the small SSDs, and very likely solve your IOPS problem (assuming that 1500 IOPS isn't enough, but 10x of that would be).

As an example (on E2824; your array may be older), just 2 SSDs in RAID1 can sustain 18K IOPS (4kB 70/30 r/w).

And for E2824 you can get SSDs as small as 800 GB (that I can see in internal E-Series sizer), so 2 x 800 in R1 gives you close to 800 GB usable.

Another option - if you get 2 SSDs in R1 - is to set these SSDs to act as read cache for the entire DDP. This won't work well if read ratio isn't high (e.g. 90%), so it's probably less interesting, unless the busy VM is a monster VM that can't fit in two SSDs and IOPS peaks are >90% read (doesn't look that way from the charts).

Also check the TR for general things related to DDP. This reminded me - if you can, considering upgrading SANtricity and all f/w before your support expires, in the case you're running some very old version, it's best to get it updated and stable before it expires:

https://www.netapp.com/pdf.html?item=/media/12421-tr4652.pdf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just noticed you mention E2824 in Subject, so the SSD R1 performance estimate would be accurate for your array.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As workload increases, disks are likely to be the next component to result in slowness. We would need to check some performance data from the system to judge the source of slowness and whether there are any optimizations suitable to the system.

I suggest to open a support case to review the system. If AutoSupport is enabled, the serial number or name of system would help.