ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: ifgrp favor equivalent for singlemode ifgrp?

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there an ifgrp favor in clustered ontap? I must be missing it somewhere...but network port ifgrp does not have a favor sub command.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Scott,

You have not missed anything. The command equivalent does not exist in clustered ONTAP. The workaround would be to setup a LIF failover group with the preferred LIF as the home LIF.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This isn't a workaround since we can't failover to a port in the singlemode Ifgrp

For example...

Ifgrp a0a single mode with ports e2b/e3b on node1

Ifgrp a1a single mode with ports e2b/e3b on node2

Then we have 2 lifs. One homed on a0a and the second homes on a1a.

The goal is to move the active port to e3b and back to e2b within the Ifgrp. We can administratively down a port to force the other to pickup but would prefer a favor/no favor method like 7mode. Any future plans for this?

Thank you.

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Scott,

Apologies it has taken a while to get to you on this.

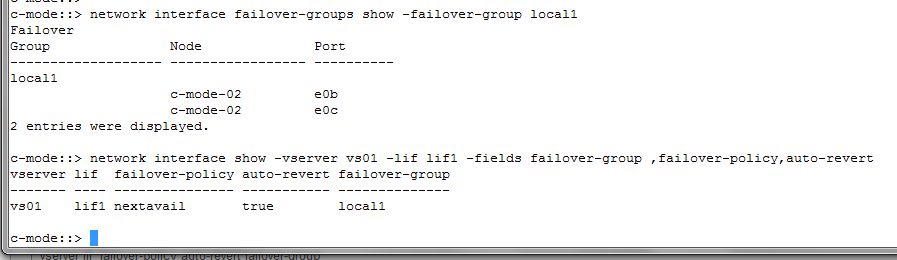

It is possible to achieve your goal with LIF failover groups and 'auto-revert'. Referring to your example, I would create two LIF failover groups. Each one would contain the two network ports that are local to the node. The LIF would be assigned to the LIF failover group, have its failover policy set to "nextavail" and set its ability to automatically revert back to its home port. An example....

With this configuration the LIF can have its home port (favoured port to be e0b). If e0b were to go down the LIF would failover the e0c. When the link to e0b was restored, the LIF would automatically failover to its home port.

Does this help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That does help. It would also need the ports from different nodes in the failover group in case if node failure, but sounds like if a group per node that has the local ports first in the list that it can be done...just more maintenance and use of different failover groups. I would prefer an Ifgrp favor still for equivalent functionality since the Ifgrp single functionality is here...but wouldn't be used with this workaround.

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will pass the message along.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. Your posts are very helpful And make our job a lot easier

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I know this conversation is a little old but I did run into this while working with a customer who wanted to use singlemode and favor. In the Network Management Guide (confirmed in both 8.1.1 and 8.2 docs) on pg 21 it states the following:

"In a single-mode interface group, you can select the active port or designate a port as nonfavored

by executing the ifgrp command from the nodeshell"

I was able to do so, in fact we used the favor command i.e. "ifgrp favor e0b" and it showed under the flags from the ifgrp status command. The issue is that upon reboot this setting was not persistent (expected) as well as inserting it into the local rc file didn't work either.

So favor does exist, now the question is how do you make it persistent across reboots?

Also, if using the workaround above which seems like a reasonable option, what are the negatives in your opinion?

Thanks,

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We decided against single mode Ifgrps. With cDot may as well keep all ports active and let lif failover groups handle ports. Same end result but active/active on all ports.

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I hear you but it is still documented as an option...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good point. And no way to run the command on boot like you said.

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I want to pop up this topic.

I need to configure single mode ifgrp with favor option. I can explain why I can't do it with lif failover, the main problem is with 22x0 systems when 10G port is used for data for iSCSI and NFS. This is a separate topic and need more explanation why single mode ifgrp is the only option IMHO.

I think a good solution is to use single mode 10G active/1G passive and I need to make sure 10G is always active. There should be RC file to put ifgrp favor command at least...

Or can we please put ifgrp favor in next Cmode release wishlist...

Nick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With failover groups you can have 10GB and 1GB separated and still keep the lifs from crossing the card types. What is the configuration of lifs? We can layout a failover policy to make it work without Ifgrps.

Also the 10GB ports should be your cluster ports leaving only 1GB for data. Where are the cluster ports?

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for reply.

1. A comment to your initial post. There is still no mechanism how to set priorities on ports for lif failover, yes you can set a home port and auto-revert, but if you have two 10G ports and two 1G ports it will failover to unknown port in case 10G port fails.Yes, you can set a failover group to failover to 10G ports only but you can't set a policy to failover to 10G ports first, then two 1G ports.

Another workaround I found is to use auto migration of lif depending on load. If clients need more throughput it migrates LIF to another port.

2. I use FAS2240A with two 10G ports on each controller. Starting from ONTAP 8.2 it is possible to use one 10G port for cluster interconnect and one 10G port for data in switchless configuration. So I want to use 10G for data and protect myself from any single failure. I want to use both NFS and iSCSI.

The easy solution could be use one single failover group that includes 10G ports only and 1 iSCSI lif per 10G port. The only problem is if 10G Mezzanine NIC fails in FAS controller (both ports down), the master (CLAM) of this two node cluster takes over the second controller and in case the master was the controller with failed NIC I loose all 10G ports. (Checked in Lab)

That means I need to include at least one 1G port on each controller into failover group. That's ok. Still can work without single mode ifgrp.

The problem is with iSCSI. It can't migrate using failover group. And if I create iSCSI lifs on both 10G ports and 1G ports then the Round Robin will balance traffic across 10G and 1G optimized paths giving me 2Gbps speed at maximum. Setting policy to FixedPath/Weighted Paths makes the whole thing manual...

If I use 10G active 1G passive single mode ifgrp and create iSCSI lifs on this ifgrp that resolves the problem. That's why I want to use ifgrp favor to make 10G active.

There are several workarounds for this scenario:

a. Controllers do not reboot frequently, so I can afford setting favor manually.

b. I can use 1G links for iSCSI with RoundRobin. And 10G for NFS. Cause I don't see throughput more than 2Gbps in production and iSCSI RR can balance traffic (unlike NFS). But it sounds like "buy a Porsche and don't go faster than 90km/h".

c. FAS6240 has enough ports. Upgrade...

Sorry for a long post.

Nick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For #1 put your 10g ports in a single mode ifgrp and then use failover groups.

Sent from my Samsung Galaxy S®4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting and does make a good use case when the goal is to mix ports of different speeds active/passive. The default failover-policy is nextavail and saw there is a priority option…but need to look at if we have a way to set priority on the interface in the failover group.

On failover you noticed the second 10Gb port was not used for data anymore?

Dedicating iscsi to 1GB sounds like a good option.. even though a slower Porsche all ports are active but the 2240 won’t push all ports anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

lasswellt: That exactly what I did. Now I want to make sure 10G is always active i.e. ifgrp favor.

I noticed that even without favor flag it tries to use 10G ports first. I.e. pull out 10G cable, then pull back... the system will switch back to 10G.

Scott, personally I use "priority" as a default policy, I think currently there is no difference between nextavail and priority, cause you can't set priority on interfaces...

Mixing 10G and 1G ports in a ifgrp is a budget solution when we have limited 10G ports and can't use LACP: we hope 10G will never fail, but still we have 1G backup.

I drew a diagram explaining why I need 1G ports as backup in FAS22x0 configuration in case of 10G NIC failure.

See below:

Nick

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't mix them. cDOT is not 7mode. Two ifgrps one with 10g one with 1g with a custom failover group. You should never use the default failover groups anyways as you could end up with lifs failing over to lifs with incorrect vlans.

Failover groups set the priority. Then auto revert moves the lif back home when available.

Sent from my Samsung Galaxy S®4

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

good point. He could use different failover groups. All with the same ports but different order for nextavail. Agreed not ideal though and completely agreed I would put 10G in one group separate from 1G.

Sent from my iPhone 5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was talking about default failover policy, not failover group, which is nextavail. With unknown differences form "priority" failover policy. As I understood there is no way to specify order for failvoer?!

I cannot exclude 1G ports from failover group cause I will loose lifs if 10G NIC fails (see diagram)

Anyway NFS/CIFS is not the biggest problem. The problem is with iSCSI and Round Robin.

I think 10G active + 1G passive single mode ifgrp with iSCSI and NFS VLANs on it is the best solution. Looks like it favors 10G port by default, can't confirm why.

Both ports go to the same switch if possible, then in case of swtich failure I will still use 10G for data using the second controller and Cluster Net interconnect.

Cmode::> network port ifgrp create -node Cmode-04 -ifgrp a0a -distr-func sequential -mode singlemode //distr-dunc is useless for sinlgemode, but it must be specified

Cmode::> network port ifgrp add-port -node Cmode-04 -ifgrp a0a -port e1b /// 10G port

Cmode::> network port ifgrp add-port -node Cmode-04 -ifgrp a0a -port e0a ///1G port for backup in case 10G NIC failure

Cmode::> network port vlan create -node Cmode-04 -vlan-name a0a-200 //NFS

Cmode::> network port vlan create -node Cmode-04 -vlan-name a0a-201 //iSCSI Fabric A

Cmode::> network port vlan create -node Cmode-04 -vlan-name a0a-202 //iSCSI Fabric B

Failover group for NFS will include a0a-200 ports (VLANs) on both controllers (nodes)

What do you think?

Nick

P.S. I wish I could contact Mike Worthen, the author of Cmode Networking best practice TR and discuss this with him.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a similar setup, sort of thinking in "7-mode" when I ordered my net connections. Basically this doesn't involve my 10G ports, but 3 ports on each controller, 2 are in an Etherchannel/Portchannel config and one is just a standalone connection. Basically, the home-port is the etherchannel link and the next priority is the single link on each controller. I did this by using a hidden command to set priorities, the home-port already having priority 0. You can find more here:

https://library.netapp.com/ecmdocs/ECMP1196817/html/network/interface/failover/create.html

So there is actually a way to do it without creating per controller failover groups. You can put everything in a single failover group and then setup a policy per lif to failover in a pre-determined order or priority.