SolidFire and HCI

- Home

- :

- Products and Services

- :

- SolidFire and HCI

- :

- Re: How to fix the Jumboframe problem when I am deploying on H410C NDE for 2+2 node

SolidFire and HCI

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to fix the Jumboframe problem when I am deploying on H410C NDE for 2+2 node

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

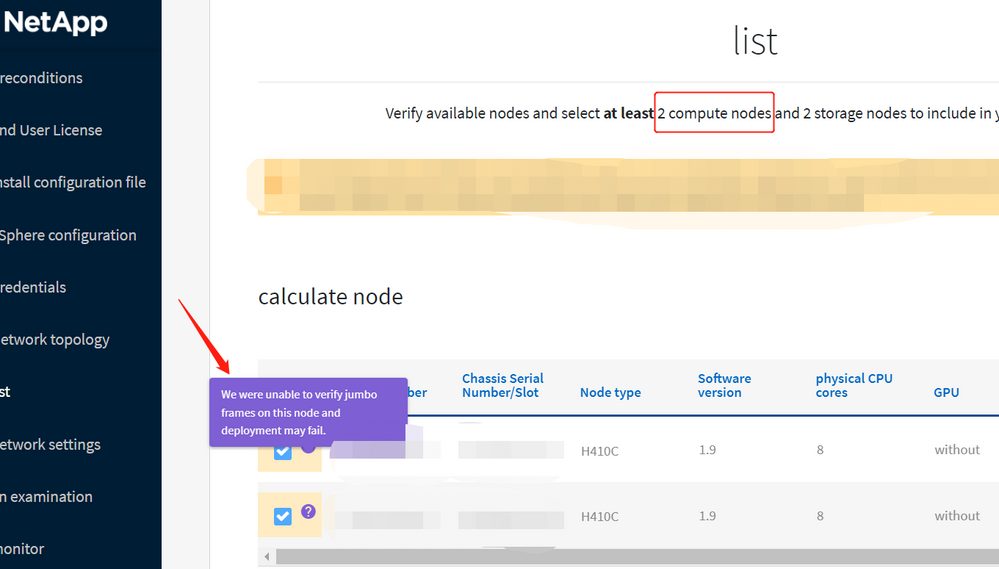

when I use jumboframe on Switch 1 that directly connect to the compute node,

but it shows the jumbo frame problem,

Whats the problem about it?

my simple setting show as below.

Thank you!

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You didn't note what your storage node's IP address is. NDE runs on a storage node.

My first reply contains a suggested workaround which is to set IPs manually. The second to login via SSH and see how ping with jumbo frames are traveling (L2 or via Mgmt Gateway) and that's also recommended in the KB.

There are other workarounds in KB, such as the one related to routing and network separation between iSCSI and Mgmt.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

iSCSI client and server should be able to communicate on the same VLAN. It's not clear from your post if your compute network allows VLAN 112 and if those interfaces came up with MTU 9000.

If the NetApp Deployment Engine fails because your network does not support jumbo frames, you can perform one of the following workarounds:

Use a static IP address and manually set a maximum transmission unit (MTU) of 9000 bytes on the Bond10G network.

Configure the Dynamic Host Configuration Protocol to advertise an interface MTU of 9000 bytes on the Bond10G network.

You can monitor port traffic on the iSCSI ports of storage nodes to see if there's traffic incoming from compute nodes' iSCSI interfaces and if VLAN and MTU is correct.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the switch 1 to ports connet to compute node have trunk another vlan, but another two ports that connect to storage port only access vlan 112.

show you more about the information when I am deploying NDE that show log as below

HCI-T00022-JUMBO-PING-10.1.10.11:0 | fabrixtools:1062 | WARNING | Failed to Jumbo Ping 10.1.10.1: with errors: 10.1.10.11 failed to respond to a jumbo frame ICMP request - exhausted all retries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So the ping with MTU 9000 can't go through.

Maybe VLANs of source and destination are different, maybe jumbo packets can't pass through maybe one of the interfaces isn't up, etc.

NDE cannot know why it doesn't work, so you need to do perform network troubleshooting and validation and retry.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

*correct the underline content as below

show you more about the information when I am deploying NDE that show log as below

HCI-T00022-JUMBO-PING-10.1.10.11:0 | fabrixtools:1062 | WARNING | Failed to Jumbo Ping 10.1.10.11: with errors: 10.1.10.11 failed to respond to a jumbo frame ICMP request - exhausted all retries

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You didn't note what your storage node's IP address is. NDE runs on a storage node.

My first reply contains a suggested workaround which is to set IPs manually. The second to login via SSH and see how ping with jumbo frames are traveling (L2 or via Mgmt Gateway) and that's also recommended in the KB.

There are other workarounds in KB, such as the one related to routing and network separation between iSCSI and Mgmt.