General Discussion

- Home

- :

- General Discussion & Community Support

- :

- General Discussion

- :

- Re: Unable to access CIFS Share after creation

General Discussion

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using Clustered ONTAP 8.2

Have just created a Volume, then a CIFS share with an NTFS security style. Also created a Qtree.

Couldn't access the share. Please help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a lot of gaps and missing information to provide you with a solution, the best will be to make sure you fulfill all the requirements during the process:

I'd start with:

Clustered Data ONTAP® 8.2

CIFS/SMB Server Configuration Express Guide

https://library.netapp.com/ecm/ecm_download_file/ECMP1287609

also

Clustered Data ONTAP® 8.2

File Access Management Guide for CIFS

https://library.netapp.com/ecm/ecm_download_file/ECMP1366834

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, as a friendly reminder if you are not covered by the regular support entitlements it's ok to be asking here, but if you can upgrade your ONTAP version to 9 would be the best, as 8.2 and 8.3 are in self-service support since this year:

Software Version Support

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the security style of the SVM root volume?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You configure security styles on FlexVol volumes and qtrees to determine the type of permissions Data ONTAP uses to control access and what client type can modify these permissions.

You configure the storage virtual machine (SVM) root volume security style to determine the type of permissions used for data on the root volume of the SVM.

As your SVM root volume is the junction to the vols, you should change it according to match with the data volumes... NTFS should be selected if using SMB only.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The security style of the SVM is NTFS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are currently 6 other volumes and shares in the same SVM. The newest volume created and the share associated with it is the one where we are receiving an error :"Windows cannot access \\sharename\volumename"

when trying to access it via Windows explorer.

As previously said, the security style of this newly created share is NTFS, the same as on the SVM root volume.

Surprizingly, when we create the same share under one of the existing volumes, we can access it without problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this is the same aggr as the other volumes or a different one? If a different aggr, what's different about it. NSE disk?

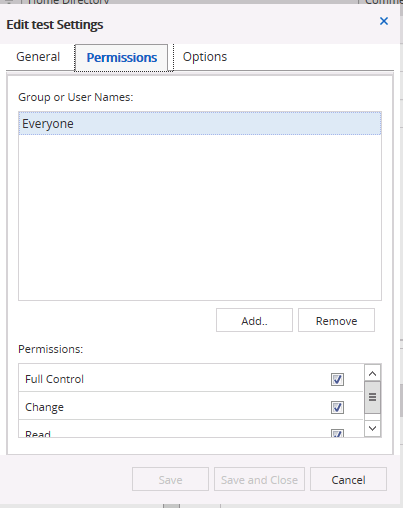

Also assuming you've checked "Permissions" on the share

I'm assuming this is really (Windows cannot access \\CIFS_Server_name\sharename"). What if you use "\\IP address\sharename" what happens? I'll assume you'll recieve the same message.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting b'cos you mentioned uder existing volume/share it works but as a separate volume/share it is not accessible.

I think with 8.2 root vol size was increased to 1gb which is more than enough for storing junction path info, but no harm in checking root vol space, could you check the available space on it ?

Give us this output for both shared volume (that is erroring) and root_volume:

::>vol show -volume <vol_name> -fields security-style,total,used,available

::>vol show -volume <vol_name> -instance

::>cifs share show -vsever <svm_name>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check the export policy on the volume. Back in the day, export policies were a thing on CIFS vols. It went optional&disabled by default at 8.2, but any pre-existing SVMs would still have enforcement enabled.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After many days of troubleshooting with NetApp, the ticket was escalated to a Senior level where it finally found solution: the intra-cluster replication had stopped

It appeared that the problem was caused by the fact that SnapMirror inside the same cluster (intra-cluster replication) had not run since the month of May 2019. Therefore, for the time being, every time we will create a new Volume in this SVM, we will have to manually run SnapMirror command in order for the Root volume to become aware of the new volume since SnapMirror did not run update automatically. The feature is called: Load Sharing Mirror.

In the course of troubleshooting, we ran this command manually:

Cluster1::> set -privilege diag

Cluster1::>snapmirror update-ls-set -source-path cluster://svm_cifs/rootvol

After running this command, we were able to successfully access the share.

Note: We're still researching to understand how this feature is supposed to work. Is it automatically configured out of the box or should it be manually configured?

The engineer who helped figured out the issue wasn't versed on Disaster recovery. Therefore, he suggested that we open another ticket on the issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for sharing this information. Sometimes, it is impossible to point where the problem could be even if you apply your rational logic. This is really a great knowledge sharing, I don't think I could have thought of this issue.

It makes sense now, SVM root vol load sharing mirror is not automatic, b'cos it does not exists in my current or previous enviorments. So, someone must have set it up following the NetApp best practices. It is still a best practice to create Load Sharing Mirror for SVM root vol.

Some info:

Every SVM has a root volume that serves as the entry point to the namespace provided by that SVM. In the unlikely event that the root volume of the SVM is unavailable, NAS clients cannot access the namespace hierarchy and therefore cannot access data in the namespace. For this reason, it is a NetApp best practice to create a load-sharing mirror for the root volume on each node of the cluster so that the namespace directory information remains available in the event of a node outage or

failover.

Data ONTAP directs all read requests to the load-sharing mirror destination volumes. The set of loadsharing mirrors you create for the SVM root volume should therefore include a load-sharing mirror on the same node where the source volume resides. Looks like in your case, Ontap directed the read requests to LS Mirror on the Node hosting the volumes, and b'cos it was not updated due to Intra-cluster issue, share wasn't accessible, once it was updated it worked!

SVM Root Volume Protection Express Guide

https://library.netapp.com/ecm/ecm_download_file/ECMLP2496241