ONTAP Discussions

- Home

- :

- ONTAP, AFF, and FAS

- :

- ONTAP Discussions

- :

- Re: FAS2220 Cannot add specified disks to the aggregate

ONTAP Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

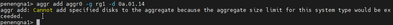

We encounter the error when trying to add disk to aggregate:

aggr add aggr0 -g rg0 -d 0a.01.14

aggr add: Cannot add specified disks to the aggregate because the aggregate size limit for this system type would be exceeded.

We increase raidsize from 21 to 28 also we could not fix the error.

What is the limit here?

Any one have any ideas. We would like to assigned all the spare disk to the respective raidgroup.

Our current setup:

aggr status -s

RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks)

--------- ------ ------------- ---- ---- ---- ----- -------------- --------------

Spare disks for block checksum

spare 0a.01.14 0a 1 14 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

spare 0a.01.16 0a 1 16 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

spare 0a.01.18 0a 1 18 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

spare 0b.01.15 0b 1 15 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

spare 0b.01.17 0b 1 17 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

spare 0b.02.0 0b 2 0 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.1 0b 2 1 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.2 0b 2 2 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.13 0b 2 13 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.14 0b 2 14 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.15 0b 2 15 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.16 0b 2 16 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.17 0b 2 17 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.18 0b 2 18 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.19 0b 2 19 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.20 0b 2 20 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.21 0b 2 21 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.22 0b 2 22 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

spare 0b.02.23 0b 2 23 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

aggr status -v

Aggr State Status Options

aggr0 online raid_dp, aggr root, diskroot, nosnap=off, raidtype=raid_dp,

64-bit raidsize=28, ignore_inconsistent=off,

snapmirrored=off, resyncsnaptime=60,

fs_size_fixed=off, snapshot_autodelete=on,

lost_write_protect=on, ha_policy=cfo,

hybrid_enabled=off, percent_snapshot_space=0%,

free_space_realloc=off

Volumes:....

Plex /aggr0/plex0: online, normal, active

RAID group /aggr0/plex0/rg0: normal, block checksums

RAID group /aggr0/plex0/rg1: normal, block checksums

aggr status -r aggr0

Aggregate aggr0 (online, raid_dp) (block checksums)

Plex /aggr0/plex0 (online, normal, active, pool0)

RAID group /aggr0/plex0/rg0 (normal, block checksums)

RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks)

--------- ------ ------------- ---- ---- ---- ----- -------------- --------------

dparity 0a.00.11 0a 0 11 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

parity 0a.00.2 0a 0 2 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.4 0a 0 4 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.9 0a 0 9 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.7 0a 0 7 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.8 0a 0 8 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.6 0a 0 6 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.10 0a 0 10 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.0 0a 1 0 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.00.0 0a 0 0 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.1 0b 1 1 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.2 0a 1 2 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.3 0b 1 3 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.4 0a 1 4 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.10 0a 1 10 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.11 0b 1 11 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.5 0b 1 5 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.6 0a 1 6 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.7 0b 1 7 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0a.01.8 0a 1 8 SA:A 0 BSAS 7200 1695466/3472315904 1695759/3472914816

data 0b.01.9 0b 1 9 SA:B 0 BSAS 7200 1695466/3472315904 1695759/3472914816

RAID group /aggr0/plex0/rg1 (normal, block checksums)

RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks)

--------- ------ ------------- ---- ---- ---- ----- -------------- --------------

dparity 0b.02.3 0b 2 3 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

parity 0b.02.4 0b 2 4 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.5 0b 2 5 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.6 0b 2 6 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.7 0b 2 7 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.8 0b 2 8 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.9 0b 2 9 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.10 0b 2 10 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.11 0b 2 11 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

data 0b.02.12 0b 2 12 SA:B 0 FSAS 7200 3807816/7798408704 3815447/7814037168

Solved! See The Solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After looking further at your configuration, I think you are hitting the raid-dp disk count limit.

It looks like your rg0 has 21 drives that is over the limit (SATA/BSAS/FSAS/MSATA/ATA: 20). You might want to reconfigure your aggregate to stay within the limit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It could be that the max supported raidgroup size for the particular disk type has exceeded.

Please refer to HWU for the limits.

Also, I see that you are trying to add disk to - rg0

Instead, can you try adding it to - rg1

Also, let us know:

1) Data ontap version?

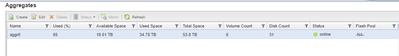

2) Current aggregate aggr0 size?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tried rg1, still error:

aggr add aggr0 -g rg1 -d 0a.01.14

aggr add: Cannot add specified disks to the aggregate because the aggregate size limit for this system type would be exceeded.

1) Data ontap version? => NetApp Release 8.1.4P10 7-Mode:

2) Current aggregate aggr0 size?

Both raid group rg0 and rg1 also giving the same error message.

Aggr State Status Options

aggr0 online raid_dp, aggr root, diskroot, nosnap=off, raidtype=raid_dp,

64-bit raidsize=28, ignore_inconsistent=off,

snapmirrored=off, resyncsnaptime=60,

fs_size_fixed=off, snapshot_autodelete=on,

lost_write_protect=on, ha_policy=cfo,

hybrid_enabled=off, percent_snapshot_space=0%,

free_space_realloc=off

Volumes: vol0, DataLog_vol, TestProg_vol, Eng_vol, Eng_NFS_temp,

Eng_NFS_vol

Plex /aggr0/plex0: online, normal, active

RAID group /aggr0/plex0/rg0: normal, block checksums

RAID group /aggr0/plex0/rg1: normal, block checksums

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Different system models have aggregate limits. As Ontapforrum mentioned, check the system model and its aggregate limit on Netapp Hardware Universe. https://hwu.netapp.com/Home/Index

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

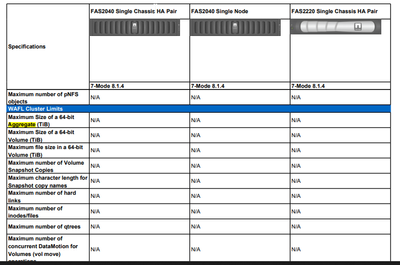

I look at the document 8.1.4_7-Mode-FAS_EOA.pdf (netapp.com)

However there is no information on the aggregate limit.

It just mentioned N/A.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I recall, max size aggregates are model and ontap version dependent. I believe for FAS2220 it was 120TB (maybe less) for RAID-DP. During those times, we do not create aggregates more than 100TB. The max cap limit for that model is 180TB I think.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The current data disk (minus parity and dparity) size is 60TB in both rg0 and rg1 combine.

This is base on total disk * the size in MB / 1024 /1024.

There is along way to go to reach 100TB.

We are still stuck where we are not allow to add even we are below the limit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer is in your post. The aggregate would be over it’s limit with the additional drive. Check the aggregate current size and compare to hardware universe. I suspect that will confirm

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately the hardware universe do not provide the limit for aggregate.

Also Storage limits (netapp.com) we could not see the aggregate limit.

How could you check?

Our total drive is within the limit.

The limit for RAID-DP group size is which we already set for the aggr

SATA/BSAS/FSAS/MSATA/ATA: 20

However in RAID-DP group rg1 we only have 10 disks assigned.

Why we can't add additional disks to make it to max. 20?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After looking further at your configuration, I think you are hitting the raid-dp disk count limit.

It looks like your rg0 has 21 drives that is over the limit (SATA/BSAS/FSAS/MSATA/ATA: 20). You might want to reconfigure your aggregate to stay within the limit.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately we can't remove 1 disk out of it from the 21.

Anyway we found the following in one of the internet site.

For FAS 2200 series, there is limit of 60TB max. per aggregate.

I think that's the reason we can't go beyond that. Do you agree with it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That can be true. Another thing you can do is to upgrade the Ontap version to 8.2.5P3 and see if that makes a difference. I believe the aggregate max size was adjusted on 8.2.x.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to find the information for 8.2.5P3 in release notes and etc. Still could not find the information for aggregate limit size.

Anyway our FAS2220 8.1.4P10 is no longer support by NetApp as it is already EOS/EOA.

As such there is no way we could upgrade that. And 8.2 required new license key with 28 character if not mistaken. There is no way for us to generate new license key.