Simulator Discussions

- Home

- :

- Developer Network

- :

- Simulator Discussions

- :

- 8.3.1 OVA format Simulator uses 5 GB RAM. If lowering to 2 GB Simulator does not start anymore.

Simulator Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

8.3.1 OVA format Simulator uses 5 GB RAM. If lowering to 2 GB Simulator does not start anymore.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Big Problem with new Sim 8.3.1 format is the high amount of Memory 5 GB usage! 8.2 Sim used 1.6 GB Memory and now 5 GB. Especial if running in VMware Workstation means

for a Cluster 10 GB RAM what is very high. With Windows AD so almost no Memory Left to play around with other VM.

Think Back when 7-Mode used for HA only ~512 MB!

After Installation changing back ot 1.6 GB, Sim 8.3 crash and does not start anymore. When putting back to 5 GB all works again.

Does anybody has an Idea how to setup to 1.6 GB Memory and that is working? Is there any setenv?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I seem to recall answering a nearly identical question around 8.3.

On 8.3, I was able to run it on 2gb/node with some tuning. On 8.3.1 it needs more like 2.5gb/node minimum but 3gb is a safer starting point. There are two tunables in the loader environment you can optimize for a low memory vsim. First is the variable that sets how much ram is reserved for the BSD layer, the second sets the size of the Virtual NVRAM. If the sim has already been run on the default 256mb vnvram, it may crash if you resize the vnvram. To avoid that crash we'll discard the vnvram contents.

- Halt the sim

- Reduce the VMs RAM

- Power on the sim, stopping at the VLOADER>

- From the VLOADER:

setenv bootarg.init.low_mem 512

setenv bootarg.vnvram.size 64

setenv nvram_discard true

boot_ontap

ONTAP still needs more ram, but for a short term lightweight laptop demo 3gb will usually suffice. Give it 4gb if you have it or just want to keep it alive longer, but the 1gb default root volume will take it out pretty quickly so remediate that right away.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

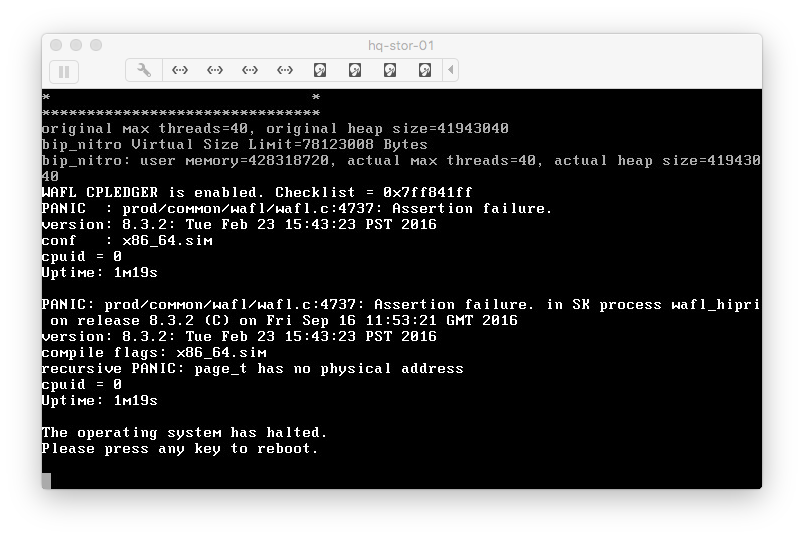

I according to your guidance to test, vsim is still panic, prompt physical memory is not enough, the previous 8.3 tgz version is OK, but 8.3.1 ova version seems to be no。

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just spun one up with the workstation 8.3.1 OVA build: I set ram to 2560MB, vnvram to 64, low_mem to 512

demo100::> run local sysconfig -v

NetApp Release 8.3.1: Mon Aug 31 02:06:44 PDT 2015

System ID: 4082368507 (demo100-01)

System Serial Number: 4082368-50-7 (demo100-01)

System Storage Configuration: Single Path

System ACP Connectivity: NA

All-Flash Optimized: false

slot 0: System Board 1.6 GHz (NetApp VSim)

Model Name: SIMBOX

Serial Number: 999999

Loader version: 1.0

Processors: 2

Memory Size: 2559 MB

Memory Attributes: None

Virtual NVRAM Size: 64 MB

CMOS RAM Status: OK

slot 0: 10/100/1000 Ethernet Controller V

e0a MAC Address: 00:0c:29:bd:2c:67 (auto-1000t-fd-up)

e0b MAC Address: 00:0c:29:bd:2c:71 (auto-1000t-fd-up)

e0c MAC Address: 00:0c:29:bd:2c:7b (auto-1000t-fd-up)

e0d MAC Address: 00:0c:29:bd:2c:85 (auto-1000t-fd-up)

Device Type: Rev 1

Firmware Version: 15.15

demo100::>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Has anyone managed to get the 8.3.1 simulator working with less memory on ESX?

I have tried setting vnvram to 64, low_mem to 512 and various memory sizes up to 4 GB but it kept panicking. It only booted with 5 GB of ram. Below is the panic message with any ram <= 4 GB

VLOADER> setenv bootarg.vnvram.size 64

VLOADER> setenv bootarg.init.low_mem 512

VLOADER> boot

x86_64/freebsd/image1/kernel data=0x99f0c8+0x41a0e0 syms=[0x8+0x46758+0x8+0x2fbe3]

x86_64/freebsd/image1/platform.ko text=0x1e5edd data=0x485a0+0x42bd0 syms=[0x8+0x21768+0x8+0x175cd]

bootarg.init.low_mem set. This will set the FreeBSD size to 512MB

NetApp Data ONTAP 8.3.1

Copyright (C) 1992-2015 NetApp.

All rights reserved.

*******************************

* *

* Press Ctrl-C for Boot Menu. *

* *

*******************************

^CBoot Menu will be available.

Please choose one of the following:

(1) Normal Boot.

(2) Boot without /etc/rc.

(3) Change password.

(4) Clean configuration and initialize all disks.

(5) Maintenance mode boot.

(6) Update flash from backup config.

(7) Install new software first.

(8) Reboot node.

Selection (1-8)? 5

WAFL CPLEDGER is enabled. Checklist = 0x7ff841ff

PANIC : prod/common/wafl/free_cache.c:997: Assertion failure.

version: 8.3.1: Mon Aug 31 02:06:44 PDT 2015

conf : x86_64.sim

cpuid = 0

Uptime: 1m44s

PANIC: prod/common/wafl/free_cache.c:997: Assertion failure. in SK process wafl_hipri on release 8.3.1 (C) on Tue Nov 17 12:29:03 GMT 2015

version: 8.3.1: Mon Aug 31 02:06:44 PDT 2015

compile flags: x86_64.sim

recursive PANIC: page_t has no physical address

cpuid = 0

Uptime: 1m44s

The operating system has halted.

Please press any key to reboot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you setting nvram_discard true whenever you adjust the sizing?

Also, what hypervisor are you running it on?

I've been testing against fusion8 and esx6, but its possible on some versions that you may have to manually place the pci hole when you drop under 4gb. Try adding "pciHole.start = 3072" to the vmx file.

On 8.3.1 I've been able to boot to the setup script without modifying the loader values all the way down to 2560MB of ram.

Setting vnvram=128 gets it to boot down to 2432MB

Setting vnvram=64 boots at 2368MB

So here I'm just stealing from vnvram to give to wafl, and its riding the envelope of the low end. I can push it down a little further with low_mem=512. Stealing from BSD now, and get it to boot with 2112. But that only gets you to the setup script. The RDBs consume ram, so you need to add some ram to the sizing to account for actually getting the cluster services up and running. So 3072 seems like a pretty good number for a laptop instance, depending on what you need it to do and how long you need it to live.

That smallest supported platform for 8.3+ has 6gb of ram, so its probably going to get harder to bring up smaller instances as time goes on.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using esxi6 on workstation hardware ( i5@3100GHz / 32 GB ram) as virtual lab.

Haven't set "nvram_discard true" each time I changed ram.

However after your reply (thanks for that!) I have restarted from scratch and managed to get it running using the steps below:

1. Deploy 8.3.1 esx ova (by default it gets 8 GB of ram).

2. Reduce memory allocation to 3072 MB (3 GB).

3. Add two more network cards (for a total of 6) with default settings.

4. Add a serial port set to "Use Network", Direction = "Server", Port URI = "telnet://:12345". This way after power on I am able to connect via putty (telnet to esx host IP address on port 12345) to the virtual serial console making initial setup and management much easier. This will actually start working right after step 6.b below.

5. Power up vm and initially use the vm console to get to VLOADER prompt.

6. VLOADER commands:

a. setenv comconsole_speed 115200

b. setenv console comconsole,vidconsole (at which point we can open the putty telnet session and continue from there)

c.1 setenv bootarg.vm.sim.vdevinit "36:14:0,31:14:1,30:14:2,35:14:3"

c.2 setenv bootarg.sim.vdevinit "36:14:0,31:14:1,30:14:2,35:14:3" (this changes the disk configuration to add a shelf of virtual ssd's)

d. boot

7. Ctrl + C and choose option 5 to assign 3 specific disks for the root aggregate then halt and reboot.

8. Ctrl + C and choose option 4 to initialize all disks and reset configuration.

After reboot it got to the system setup and I was able to go all the way to create the cluster.

I'm not expecting too much from the simulator performance wise therefore not going to push it however I would need it to be as stable as possible (as in not panicking) for a fairly long run as my lab is normally up 24x7.. so given the steps I've taken can you advise on the below:

1. What LOADER values I should now amend (if any) to achieve this?

2. Would it make a difference if I gave each node 4 GB ram instead of 3?

3. Any other tuning that may help?

Thanks,

Dragos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks good. I also favor the telnet console, its just easier to work with, capture output, etc. I would just make sure root landed on either the 4gb or the 9gb drives, if not add enough disks to get the root aggr to about 4gb, expand to root vol as high as it will let you, and disable snapshots on the root volume. If you have the simulator running inside your nested esx host you may be better off moving it out to the physical workstation host. The telnet console still works there, but it requires manual vmx entries. These should do it:

serial0.present = "TRUE" serial0.fileType = "network" serial0.fileName = "telnet://0.0.0.0:2201" serial0.network.endPoint = "server" serial0.startConnected = "TRUE"

The ram really depends on your usage. If you are going to use it to host datastores back to your nested esx hosts and put VMs in them, then more ram is probably a good idea. Mostly because the VHA disk subsystem is really slow, so more ram would mean better cache hits and fewer calls to the simdisks. 3gb is probably enough to get by otherwise. 8.3.2 raises the low end a tad, but still seems to be working on 3gb. Leaving it up 24x7 will generate logs and asup files that you may need to periodically prune from the mroot, but at ~4gb on the root its not usually a problem.

One other thing to check is the vnvram settings. on some builds I've seen it set to full, on some builds I've seen it set to panic. I would set it to full, so the contents to the nvram are persisted to the backing vmdk. That way when microsoft gives a bsod theres a good chance your sim will recover gracefully.

setenv bootarg.vm.vnvram full

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So far so good.. nodes stable powered on for weeks.. no load yet though. They are VMs on ESXi 6 which is directly installed on the hardware, no additional virtualization layer, however I'm facing a big problem: unfortunately in this setup I don't have a RAID or SSD underneath but just poor independent sata spindles holding datastores.. so obviously as stated before I really don't expect anything performance wise until I'll be able to move to a RAID or SSD.

However at this point please advise on the following: given the various internal (background) ONTAP / WAFL tasks that produce I/O which ones would it be safe to disable to save some ops? I've noticed the nodes taking scheduled system backups and found that these cannot be disabled. Fine, what can we safely disable?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are not a lot of things you can adjust on that front. Most of the IO activity is from logging and the cluster DBs on the node root aggregates. You can disable ASUP, but I think the files are still generated they just aren't sent.

Even on local SSD, don't expect a lot of performance. The simulated disk subsystem is a really good simulation, but its not designed for performance. We have Data ONTAP Edge and CloudONTAP for that.

However there are a few things you can do to minimize disk IO inside the sim:

-Give the sim as much RAM as you can: 8gb or more. system RAM is the primary read cache so the more you have the better your cache hit rate will be, and the fewer reads will have to come from the simulated disks.

-Use smaller raid group sizes with larger disks, and use RAID4. Within the confines of the simulator a 3x9gb raid4 is better than a 20x1gb raid-dp because at the bottom of the funnel they all share a single VMDK anyway.

-If you use dedupe, set it for on-demand. It generates a lot of IO so you don't want it running while you are doing other testing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have two nodes of OnTap 8.3 running on VMware 6.

I also have a Win 2012 server running

My machine only has 8GB of RAM.

All three run, they can be slow from time to time,

but they do run.

I did not make any changes to the settings.

Mark Tayler

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VMware is pretty good at memory overcommittment. Hopefully you are getting most of that from transparent page sharing, or you've put VM swap on SSD.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good afternoon vsim experts.

I've been following this thread for awhile now as I'm constant user of multiple vsims for lab work. Most recently I've been using the "low_mem 512 / vnvram.size 64 / nvram_discard true" note from above to drop my 8.3.1, and more recently 8.3.2, vsims down to 3Gb. (note: I always do 1-node clusters cuz multi-node doesn't but me much for what I do). I run these this under both Fusion8 and an ESX6 server.

I do alot of provisioning automation testing (create SVMs, volumes, policies) but usually never more than 6-8 SVMs, a dozen volumes, plicies, etc before I tear down the SVMs/volumes and start over again. About my only problems seem to be occasional root vol currption (recent after ESXbox power failures) and sometimes the vsims get sluggish. I usually backoff and reboot and am fine.

Recently I felt my 'sluggishness' was coming too often so I decided to up by VMs from 3Gb to 4Gb on my main ESX6 server. I halted, used vSphere webclient to bumb from 3072 to 4096 MB and then powered on. I got the error, very similar to above, which eludes to memory issue. I didn't figure I needed to re-do the LOADER setenv's if I was increasing but I re-did those and get the same error. In reading this thread it sounds like 'setenv nvram_discard true' may be required whenever changing VM memory size up or down, so I was hoping that would fix it but it didn't.

Any ideas? I hate to rebuild these, but I guess it's good practice.

Also, is the out-of-the-box VM memory footprint for the 8.3.2 vsim 8GB or 5GB? ... I've seen both comments.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're probably running into a situation where the PCIhole is landing in a bad spot. Try manually placeing it at 1024 in your vmx file:

pciHole.start = "1024"

With 4gb of ram, and the pcihole at 1024, go with low_mem 760, vnvram.size 256. The discard setting is needed whenever you resize the vnvram, so be sure to do a clean shutdown first.

The downloadable OVAs have 5gb allocated for the workstation image, and 8gb allocated for the ESX image, which is closer to a real controller and works regardless of where the pcihole lands in the address space.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Sean. I think your reply and my followup Q's overlapped so you already answered most of those.

Here's my ESX ignorance question: How do I edit a vmx file on ESX?

I know where that file is found under Fusion but ESX6/Webclient is still new to me.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, here is a quick update. On a hunch I just upped the ESX6 VM memory settings from the 4GB I was attempting to 5GB. I powered on, VLOADER setenv the "low_mem 512 / vnvram 64 / nvram_discard true" and they seem to be booting up fine now. This raises some questions I'm now wondering about.

1) is there any difference in what happens between 'autoboot' and 'boot_ontap'? I think I've used both at different times and wondering

2) How persistent are each of these?

2a) setenv bootarg.init.low_mem 512 ? --- I read above that this tamps down BSD space ... is this just for "this bootup?" or persistent on subsequent boots?

2b) setenv bootarg.vnvram.size 64 ? --- just this boot or persistent on subsequent boots?

2c) setenv nvram_discard true ? --- this one sounds like it's 'just for this boot' whch makes sense

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

autoboot counts down then boots, boot_ontap boots now.

discard is this boot only, the rest are persistent. Look on the next boot and you'll see its flipped back to false. low_mem manually sets the BSD memory space. Without it it tries to auto size which works fine with more ram. The smallest controller that actually supports 8.3+ was the 2240 with 6gb, so 5gb is a good compromise without needing to tune it or deal with extraConfig parameters in the OVA.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When you create a simulator with a mix of disk types at first boot using the vdevinit bootargs, it may not select the disks you intend when creating the root aggregate. By manually assigning the root aggregate disks in maintenance mode you take control of that selection process. Then when you run the option 4 it will first try to build it using the disks it already owns instead of arbitrarily picking from the unowned disks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where can I download this ? 8.3.1 sim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Never mind. Found it, seems it was recently posted....