Active IQ Unified Manager Discussions

- Home

- :

- Active IQ and AutoSupport

- :

- Active IQ Unified Manager Discussions

- :

- What is the CPU domain wafl_exempt?

Active IQ Unified Manager Discussions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey all,

Love the Grafana monitoring solution.

For the last month since moving our final customer from our FAS3240 to the FAS8040 (about 250 Horizon desktops) we've been experiencing constant CPU utilization in the 80-90% range. The wafl_exempt domain is by far the top CPU domain well in the 300% range. I attached a file from the past hour between 2-3PM.

Can someone explain what wafl_exempt is? We need to determine if the node is overstressed or are we at the tipping point? Or maybe a bug with ONTAP 8.3? Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Multi-processor safe wafl_exempt threads can run in parallel on multiple processors. These threads handle WAFL tasks such as writes, reads, readdir.The purpose is to increase WAFL performance.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @cgeck0000

CPU domains are discussed in KB3014084. WAFL Exempt is the domain where parallelized WAFL work happens, so if it is high it simply means that WAFL is doing lots of parallel work. If you can correlate frontend work (throughput or IOPs) with CPU load then probably it's just a sign of a busy system. If not, then something for support to analyze and see if there is bug or something.

I also see in the screenshot you are at 82%-92% average CPU utilization. At this utilization level new work will oftentimes have to queue before it can be run on the CPU. My rule of thumb is that from around 70% avg CPU you will start to see measurable queue time while something waits to find an available CPU. Also if you have a controller failover and both nodes are running at this load level there will be a pretty big shortfall of CPU resource leading to much higher queuing for CPU. So if the workload is driving the CPU usage (i.e. no bug) then I would recommend to get a bigger node, reduce workload, or accept higher latency than possible during normal use and very high if a failover occurs.

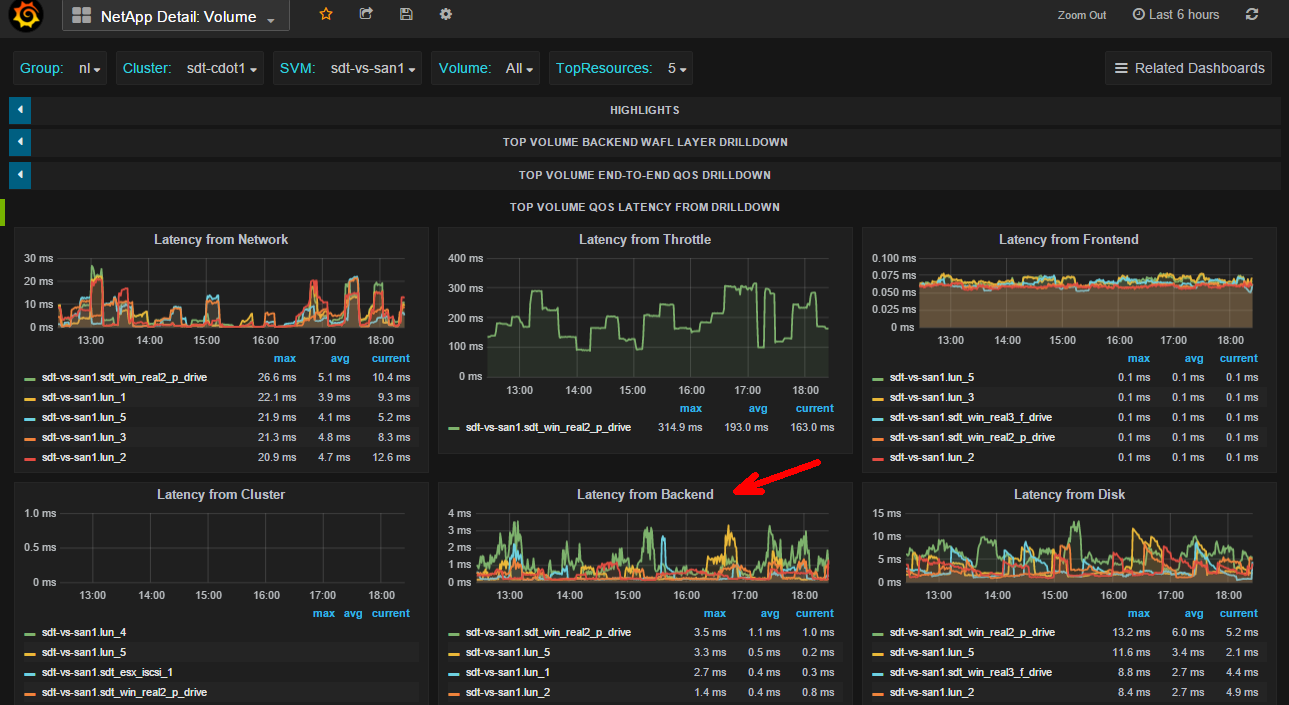

Also, a handy dashboard for checking the latency cost of each component in the cluster is on the volume page:

Each graph shows the avg latency breakdown for IOs by component in the data path where the 'from" is:

• Network: latency from the network outside NetApp like waiting on vscan for NAS, or SCSI XFER_RDY (which includes network and host delay, here for an example of a write) for SAN

• Throttle: latency from QoS throttle

• Frontend: latency to unpack/pack the protocol layer and translate to/from cluster messages occuring on the node that owns the LIF

• Cluster: latency from sending data over the cluster interconnect (the 'latency cost' of indirect IO)

• Backend: latency from the WAFL layer to process the message on the node that owns the volume

• Disk: latency from HDD/SSD access

I put a red arrow on backend because here is where you would see evidence of queuing for CPU. It actually includes queue time + service time, but usually service time is less than 500us, so if this is a major contributor to your overall latency I bet it is coming from wait time. A perfstat and subsequent analysis by NetApp can tell you how much queue time and service time you have on a per operation basis.

Hope this helps!

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your time reviewing and insight into our current performance. I really do appreciate it.

Chris Geck

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Chris,

I am reviewing the latency from backend dashboard that you mention and I'm seeing latency spikes every hour for one of our SVM that is going to 150ms to 200ms. Does cDOT have a default job running at the hour an a half mark? We're seeing these spikes at 1:30, 2:30, 3:30, 4:30 and so on. I have submitted perfstats and opened a case, awaiting a response. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @cgeck0000,

I don't know anything that runs on the half hour by default, but check your 'cron show' to see if maybe you have a schedule that does, and if yes then check where that schedule is used (think snapmirror/vault and dedupe). I would suggest to find a busy volume on that SVM and then select it on the Volume dashboard I showed earlier. On that dashboard you can see the QoS latency experienced and breakdown of where it comes from which might give you some hints where to dig deeper. If it's captured in a perfstat (with smallish iterations, maybe 5 min each) then support should also be able to analyze short-lived spikes from that data.

Cheers,

Chris Madden

Storage Architect, NetApp EMEA (and author of Harvest)

Blog: It all begins with data

If this post resolved your issue, please help others by selecting ACCEPT AS SOLUTION or adding a KUDO